Do you have any idea how to operate a robot? If you're reading this blog, odds are that you in fact might. But that makes you a total weirdo, because most people have no idea how to operate a robot. And why should they? If roboticists do their job right, the idea is, no end user should have to learn how to program or how to use a teaching pendant or game controller or whatever. It should all be simple and intuitive and user friendly.

A common approach has been to let users drag robots around to show them a task, which the robot can then remember and execute autonomously. And there's nothing wrong with that, except that it requires the robot to be human safe while you're doing it, and it's harder to jump into the middle of a task to tweak something. Also, if the task requires adaptation (like, trying to grab a randomly moving object), anything pre-programmed gets thrown out the window, and teleoperation is the only way to go.

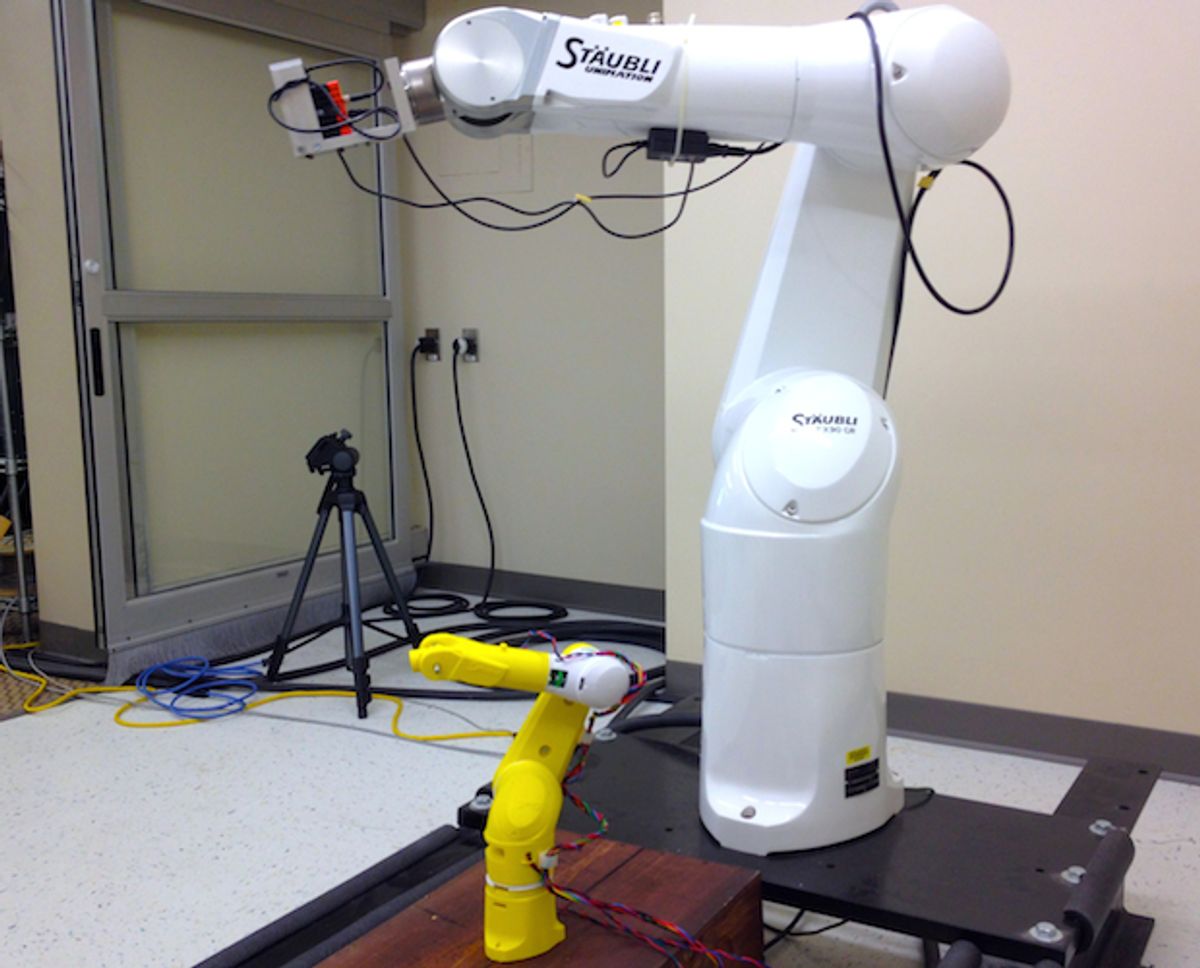

RoboPuppet is a way of remotely controlling a robot that makes so much sense, and is so obviously a good idea, that we're honestly not sure why it's taken this long to implement. It's simply an interface where you 3D print a little tiny version of the robot you want to control, add some encoders, and then use the model to puppet the full-size version, which just mimics whatever it is you do. It's adaptable, it's cheap, and it lets even inexperienced users do some remarkable things.

The total cost for this setup was US $85, the biggest chunk of which goes to an Arduino board. Total construction time was 34 hours, 29 of which were spent 3D printing parts based on a CAD file of the arm. The construction process itself is very simple: you just need to know how to use a 3D printer, how to solder, and some wiring basics to fit it all together. RoboPuppet is more than just a system for constructing hardware, though: it's an integrated method that also includes its own calibration tool along with a "real-time planning method for translating puppet movements into robot movements that are safe and respect the dynamic limits of the target robot."

I don't want to belabor (again) why this is such a good idea, but I absolutely love what the RoboPuppet website has to say about why they're doing this:

Robots are hard to drive. ROBOPuppet exists to make them easier to drive.

One day while working in the lab, some researchers were driving a camera on the end of a robotic arm to capture 3D data. Part of the data to be collected was a shot of the underside of a table. The researchers could see how the robot should be positioned to capture that data but, no matter how hard they tried or how they moved the robot, they could not get the robot to safely enter that configuration. The movements necessary to get into that position required moving multiple joints at once in a very fluid and complex fashion. They could see how it could be done but the counter intuitive nature of the controller prevented them from achieving their goal. It was incredibly frustrating. It was at that point ROBOPuppet was first considered.

We should point out that the researchers behind RoboPuppet have not yet been able to quantify exactly how much easier their method is, although they do say that inexperienced children as young as 6 were able to perform reaching tasks in a trial without any instruction whatsoever. It's also true that this version of RoboPuppet has some limitations (encoders with rotation limits, no physical stops for joint limits, only models for a few joint types), but the researchers are aware of all this, and they're working to improve the system. Next up, they'll be building themselves a little puppet version of a Baxter.

A way to make this idea even better (since we like wildly speculating about stuff like this) might be to incorporate a surface (or surfaces) with QR codes around the model so that sensor data from the real robot (or sensors around the real robot) could be used in a virtual environment to give the user more information about what the real robot is dealing with as they use the puppet. And that's just one idea: RoboPuppet is ripe for creative adaptations, and being so inherently accessible and affordable makes it very appealing to do exactly that.

"ROBOPuppet: Low-Cost, 3D Printed Miniatures for Teleoperating Full-Size Robots," by Anna Eilering, Giulia Franchi and Kris Hauser from Indiana University, was presented last month at IROS 2014 in Chicago.

[ RoboPuppet ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.