This week at the World Medical Innovation Forum in Boston, industry experts gathered to discuss the role of artificial intelligence (AI) in healthcare. While AI has made waves in diagnosing certain diseases better than doctors, there’s another area where the tech is being applied that might eventually have even greater impacts on health.

Today, at least 18 pharmaceutical companies and more than 75 startups are applying machine learning to drug discovery—the complex, expensive process of identifying and testing new drug compounds. These companies are betting hundreds of millions of dollars that AI will reduce costs, shorten timelines, and lead to new and better drugs.

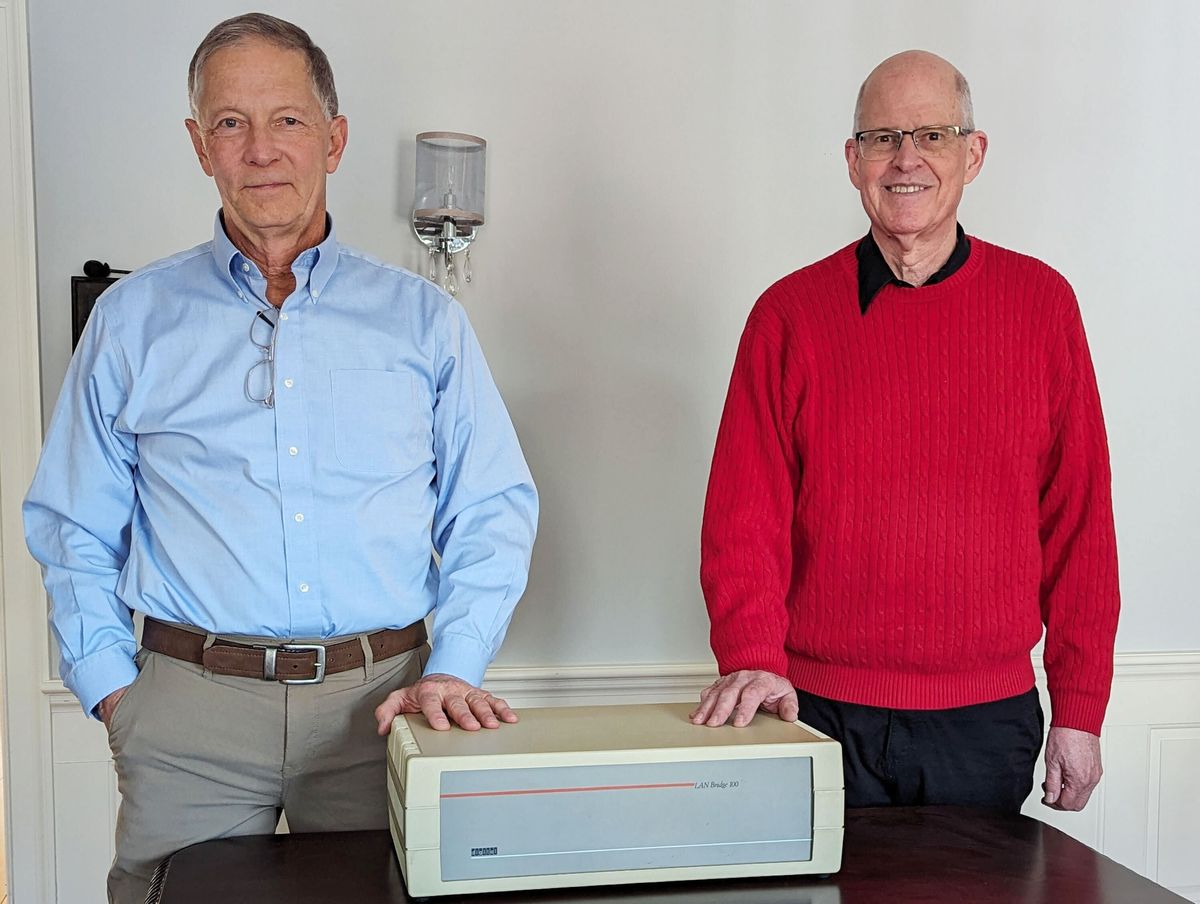

At the Forum on Monday, Exscientia founder and CEO Andrew Hopkins, formerly a professor at the University of Dundee in Scotland and a 10-year veteran of Pfizer, spoke about how AI can lead to improvements in drug development. Exscientia, formed in 2012, uses AI-driven systems to automate drug design while still “mimicking human creativity,” says Hopkins. The company has recently been racking up awards and inking deals with major industry players, including GlaxoSmithKline.

Before he jetted back to his native United Kingdom, IEEE Spectrum caught up with Hopkins to get his thoughts on how AI handles messy biological data, whether there’s too much hype in the field, and that age-old question—will machines supplant humans? The interview has been edited for length and clarity.

IEEE Spectrum: What are the one or two key ways you think AI will improve or alter the process of drug discovery?

Andrew Hopkins: By having the AI make better designs and better decisions about what compounds to make and test, we are ultimately conducting fewer experiments. Fewer experiments means you’re saving time and money.

The other big advantage is helping us identify and select [drug] targets. We use a wide range of predictive models; in fact, we’re agnostic to model type. It depends on the datasets and the challenge you’re facing. Of all the different methodologies we’ve investigated, we’re finding that evolutionary computing is the one which most closely mimics human creativity. One of the issues we had in computer-aided drug design over the past 20 years was that, often compounds being generated were really quite unattractive for chemists to synthesize. The beautiful thing now is that the compounds developed by our systems really look and feel and smell like something a human chemist would design and want to synthesize.

Ultimately, we want AI to lead to better drugs. In the projects we’ve undertaken so far, we are noticing the molecules we generate tend to be more efficient—at lower molecular weight and sometimes better biopharmaceutical properties—than other molecules in the same class for a drug target. We think this is fundamentally a result of an algorithmic approach. Because [the molecules] are under certain selection pressure, there has to be a reason for every atom to survive in a particular molecule: Is it adding to the potency, is it contributing to selectivity required, is it adding drug metabolism benefits? So there are interesting properties coming out that are directly derived from the algorithms.

IEEE Spectrum: Exscientia has many partnerships and collaborations with pharma and biotech companies. Have any compounds discovered by your technology entered into clinical trials? If not, how soon would you expect that to happen?

Hopkins: We are seeing the first molecules designed by algorithms heading to [clinical trials] now. We ourselves have now delivered three candidates with a partner, with a decision to be made on the 4th—one of our own molecules—imminently.

IEEE Spectrum: Are there certain types of drug compounds for which this approach works best?

Hopkins: Our company is specialized for small molecule drugs, which are usually below 500 daltons. These are drugs that can be taken as a pill or as an injectable. We also tend to focus toward targets that are ‘druggable,’ which means they are a bit more amenable to discovering a small molecule drug against. We haven’t yet developed the technology for biologics or the antibody space, but that’s certainly a possibility.

IEEE Spectrum: In the 1980s, computer-aided drug design (CADD) made big waves, but in the end, it did not supplant traditional drug discovery. Today, AI appears to be reaching a similar level of hype. Do you think it will live up to those expectations or is the field over-promising?

Hopkins: If you go back to the 1980s, computer-aided drug design and the use of computers in structural biology actually did become ubiquitous in drug discovery. Many projects now use those techniques. The more interesting question is: Why haven’t we seen subsequent productivity increases over the past few years? Why haven’t many technologies—CADD, high-throughput screening, computational chemistry, genomics—yet made an impact on timelines?

Our thesis is that it’s because when you add on new technologies, you add on new complexity and more decision-making, and what hasn’t changed is how long human decision-making takes. AI is fundamentally different from other approaches because it changes how decisions are made. If you use AI as just an add-on technology, you won’t get the full benefits of it. The key to AI is figuring out how to redesign drug discovery processes to benefit from it.

There are companies that do hype and promise, but we’re not one of them. We’ve been very careful and circumspect in developing our technology over the past four years. It has been a cultural challenge, actually, to suggest to people that algorithms can do something as creative as design.

A lot of people think data has to be perfect before it is sent into an AI system. And people think because biology is messy and unpredictable, we can’t use AI techniques in the space. But it’s precisely because of the complexity of the decision-making that we should use AI. For example, Bayesian approaches are particularly applicable to messy data, where you can embrace uncertainty in the data. AI doesn’t require perfect data for perfect predictions. It’s actually about how you use it in these imperfect, messy, complicated situations to find a signal amid all the noise.

IEEE Spectrum: If we re-design the process of drug discovery to include AI, will we still need bench scientists studying the basic biology of diseases and compounds?

Hopkins: Absolutely. One key thing we realized is that you will always need a close relationship between experimentalists and the AI information technologists. What we’re ultimately trying to do is to ask the question, “Which experiments will provide us with the best information to allow us to move the project forward as quickly as possible?”

IEEE Spectrum: Yet some say that using AI requires so much data input that pharma scientists already have more than enough information needed to design a good drug.

Hopkins: We did a human versus machine study with Sunovion, where we set up the algorithms against 10 experienced human medicinal chemists. Using data from a real candidate drug optimisation project, we created a simulation of the project to see how quickly humans and machines could learn about a project, starting with just 10 points to see how quickly one could optimize the best compounds in the dataset. The algorithm was able to beat 9 out of 10 humans in that study. The latest version of the algorithm now outperforms all 10 people in the test.

The problem isn’t necessarily the same as one Google or Facebook would have: The issue isn’t big data, but the paucity of data on [new] projects. We’ve found active learning to be far more important than deep learning in this domain. We’ve developed a whole set of algorithms around the concept of active learning. We’re interested in the question of how do you more efficiently learn from a small amount of data, and what data do you need to rapidly improve your models.

Megan is an award-winning freelance journalist based in Boston, Massachusetts, specializing in the life sciences and biotechnology. She was previously a health columnist for the Boston Globe and has contributed to Newsweek, Scientific American, and Nature, among others. She is the co-author of a college biology textbook, “Biology Now,” published by W.W. Norton. Megan received an M.S. from the Graduate Program in Science Writing at the Massachusetts Institute of Technology, a B.A. at Boston College, and worked as an educator at the Museum of Science, Boston.