On Wednesday, in the former San Francisco church that now serves as the headquarters of the Internet Archive, pioneers of the Internet and the World Wide Web joined together to call for a new kind of Web—a decentralized Web. It was a call for change, a call for action, and a call to develop technology that would “lock the Web open.”

And in the audience were the developers and entrepreneurs and thinkers who are going to try to answer that call. These men and women (because the next Web will have mothers as well as fathers), many sporting dreadlocks or tattoos, grew up with the Internet and love the Web, but believe it can be better and are determined to make it so.

This meeting, the Decentralized Web Summit, was part of a 3-day event organized by Brewster Kahle, founder of the Internet Archive, and sponsored by the Internet Archive, the Ford Foundation, Google, Mozilla, and others. It was as much a revival meeting as a tech conference, a feeling enhanced by the rows of pews that made up the seating. There was a lot of fan-boying and fan-girling going on, as the tech leaders of tomorrow buzzed about how they might get this or that luminary to sign their laptops. (Had there been printed programs—there were not—I’m guessing the rush for autographs would have been intense.)

Today’s Web has a number of problems, the attendees agreed; the most obvious being the kind of surveillance uncovered by Edward Snowden’s revelations and the ability to block access, like China’s Great Firewall.

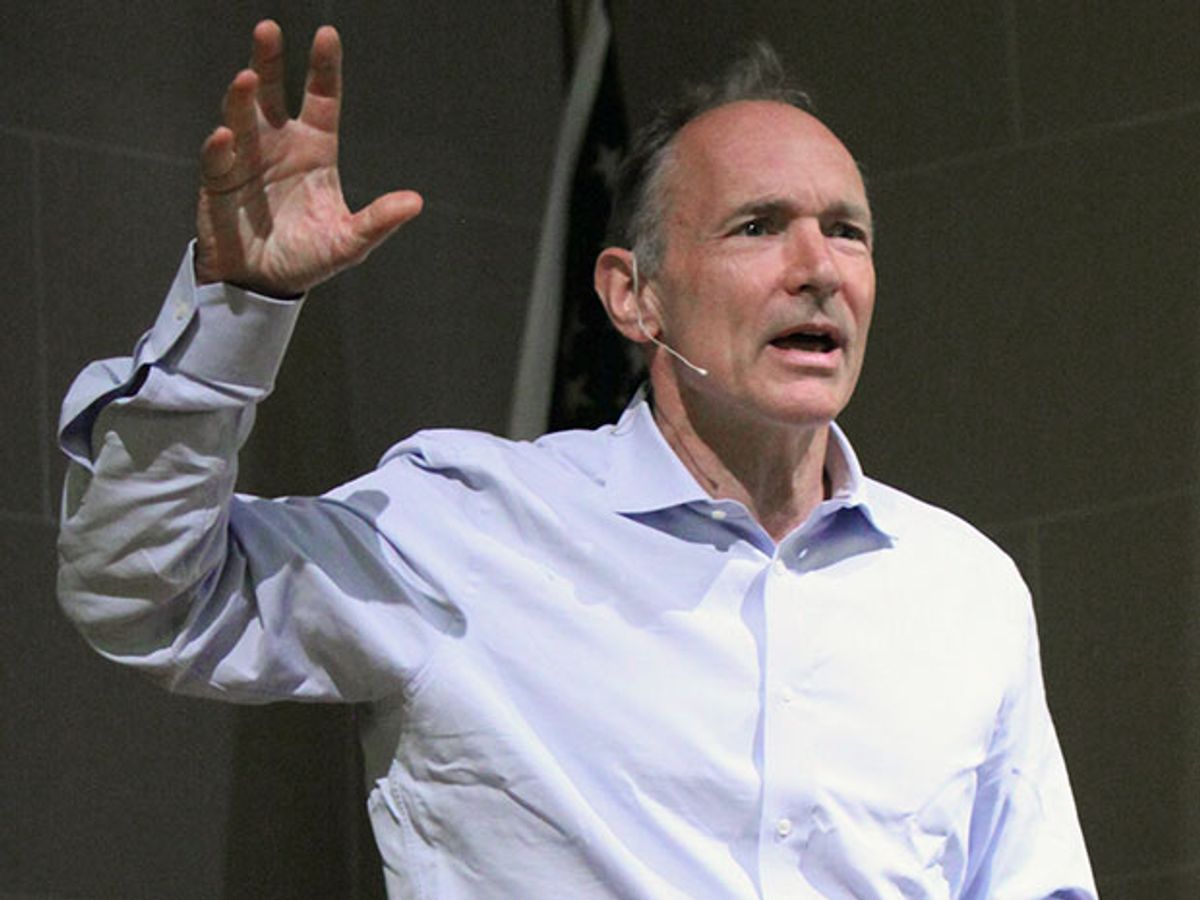

Tim Berners-Lee, who founded the Web and is now director of the World Wide Web Consortium, pointed out how far it has strayed from the original dreams for the technology. “That utopian leveling of society, the reinvention of the systems of debate and government—what happened to that?” he asked. “We hoped everyone would be making their own web sites—turns out people are afraid to.”

But even the basic things people want to do aren’t possible, because instead of being a true, interconnected web, it has become a collection of silos. “People have their friends on Facebook and some photos on Flickr and their colleagues on LinkedIn. All they want to do is share the photos with the colleagues and the friends—and they can’t. Which is really stupid. You either have to tell Flickr about your Facebook friends, or move your photos to Facebook and LinkedIn separately, or build and run a third application to build a bridge between the two.”

He also criticized the model of trading privacy for free access to things on the Internet, and said it doesn’t have to be so. “The deal the consumer makes is a myth,” he said. “It is a myth that it has to be, it is a myth that everybody is happy with it, it is a myth that it is optimal” for anybody, the consumers or the marketing machine.

“I’m frustrated on behalf of everybody using the Web at the moment,” said Berners-Lee. “But excited that we can really decentralize the web, and that we have the group of people here” who can do it.

Berners-Lee and other speakers at the event pointed out a key problem of the Web today is its ephemeral nature, only partly compensated for by Kahle’s Wayback Machine, an effort he himself called a kluge. The fact that web pages “blink on and offline,” when businesses close or web sites move, breaking hyperlinks, means the web is not reliable.

Another major problem is the sketchy privacy controls that leave users unsure about who and what is monitoring their activity and data.

Still, we’re all using the Web because, Kahle acknowledged, “It is fun. It’s a jungle out there, but it’s a fun jungle to go play in.”

It can be a lot better, however. “We can go for a trifecta, make it reliable, private, and still fun,” he said, and, “extra credit if we can make it that people can make money by publishing without going through a third party.”

This all must be “baked into the code,” he insisted.

“Code is law,” said Kahle. “The way we code the Web is how we live our lives online.” We can bake privacy in, said Kahle, we can bake the first amendment in, and we can bake openness in.

Cory Doctorow, author and special advisor at the Electronic Frontier Foundation, put it a different way: “When you go on a diet,” he said, “throw away all your Oreos.”

“The reason the web ceases to be decentralized,” Doctorow said, “is that there are a lot of short-term gains to centralizing things. The Web is centralized today because people like you make compromises.”

Most of the technology to fix the web, the speakers agreed, already exists; it just must be identified and put into a workable, unified system.

Vint Cerf, known as the father of the Internet and now at Google, tossed out a rapid-fire list of suggestions about where to look for this technology. Among these, he asked the developers to:

- Consider the way Google Docs works with its ability to propagate changes to multiple editors in real time.

- Think about some sort of publish/subscribe system, in which a web-page creator can regularly hit a publish command that makes it available for archiving, and various web archives can subscribe to receive updates.

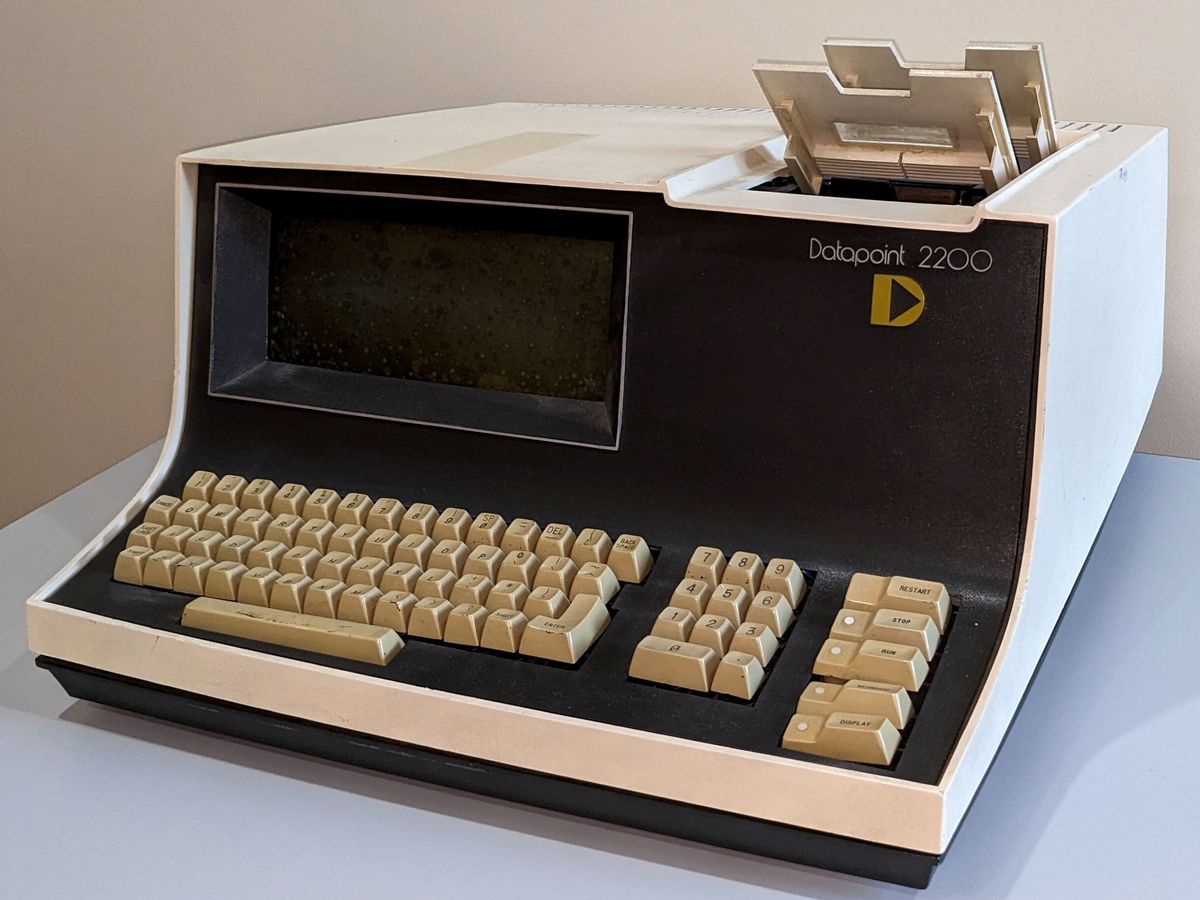

- Think about creating an archive of software as well, that perhaps may have to include emulations of defunct hardware and operating systems to make the Web always backwards compatible.

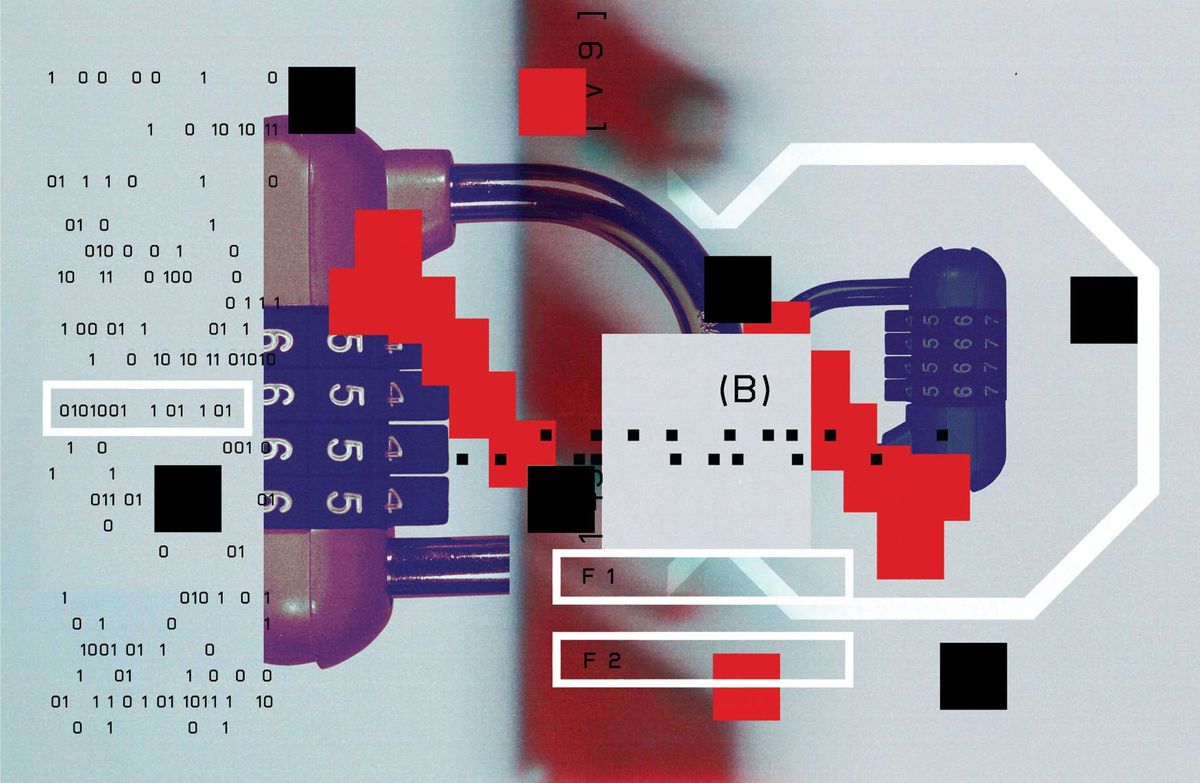

- Figure out a way to authenticate Web sites, so only true versions go down in history, and consider how powerful that tool would be for a society interested in verifying financial transactions or intellectual property.

- Figure out how to protect copyright for a time, but then automatically unlock those protections when copyright expires.

Lee also had some advice:

- Change the naming system, and stop thinking of the URL as a location—it’s a name, a format he picked to look like a Unix file name simply because people were comfortable with that.

- Consider a dot-archive, you get a name there forever, and anything you put there stays, even after you die.

- Surface the data behind web pages, maybe each page needs two ways of looking at it, the plain piece that you can view with a standard browser, and the data layer that you can explore with more powerful tools.

And Kahle added to the list:

- Consider the Amazon cloud, “It is a decentralized system that has some great features,” albeit being under the control of one owner.

- Look at JavaScript; it can live in a distributed computing platform and could be the operating system for the new web.

- Think about blockchain and Bitcoin as key components of the next web.

- Don’t forget about public key encryption; it was illegal to distribute when Lee conceived the first Web, but today it has a role to play.

- Don’t discount Wordpress, it has been embraced by large numbers of people, perhaps the new web should have a decentralized Wordpress type of service.

EFF’s Doctorow said that, in addition to the right technology, the new Web must be based on some key moral principles that, like the U.S. Constitution, will prevent “our wise leaders of tomorrow” from being “pressured into making compromises.” He suggested two:

- Computers obey their owners. That is, when a computer receives conflicting instructions from its owner and a remote party, the owner always wins.

- True facts about security vulnerabilities are always legal to talk about. That is, though there can be codes of conduct about responsible disclosure of bugs, the state should never control this.

Was this the day the Web started to change? Will the Decentralized Web Summit be looked back on like, the first Hackers’ conference or the Mother of All Demos? Only history will tell, but just in case, the organizers gathered the group together for a big photograph. And if the Web becomes decentralized and permanently archived, this picture will never disappear.

What comes next is unclear, but the attendees appeared determined to figure it out. Said Kahle, “Do we need VC funding? There are foundations that could get us over the hump, is that what is needed? Or would money hinder rather than help? Should we have a series of conferences? What about prizes? What do we do now?”

Those sounded like questions, but really, they were a call to action, to the charged-up attendees to go out and do it.

Concluded Kahle. “Let’s go build the decentralized web!”

Tekla S. Perry is a senior editor at IEEE Spectrum. Based in Palo Alto, Calif., she's been covering the people, companies, and technology that make Silicon Valley a special place for more than 40 years. An IEEE member, she holds a bachelor's degree in journalism from Michigan State University.