The evolution and wider use of artificial intelligence (AI) in our society is creating an ethical crisis in computer science like nothing the field has ever faced before.

“This crisis is in large part the product of our misplaced trust in AI in which we hope that whatever technology we denote by this term will solve the kinds of societal problems that an engineering artifact simply cannot solve,” says Julia Stoyanovich, an Assistant Professor in theDepartment of Computer Science and Engineering at the NYU Tandon School of Engineering, and theCenter for Data Science at New York University. “These problems require human discretion and judgement, and where a human must be held accountable for any mistakes.”

Stoyanovich believes the strikingly good performance of machine learning (ML) algorithms on tasks ranging from game playing, to perception, to medical diagnosis, and the fact that it is often hard to understand why these algorithms do so well and why they sometimes fail, is surely part of the issue. But Stoyanovich is concerned that it is also true that simple rule-based algorithms such as score-based rankers -- that compute a score for each job applicant, sort applicants on their score, and then suggest to interview the top-scoring three -- can have discriminatory results. “The devil is in the data,” says Stoyanovich.

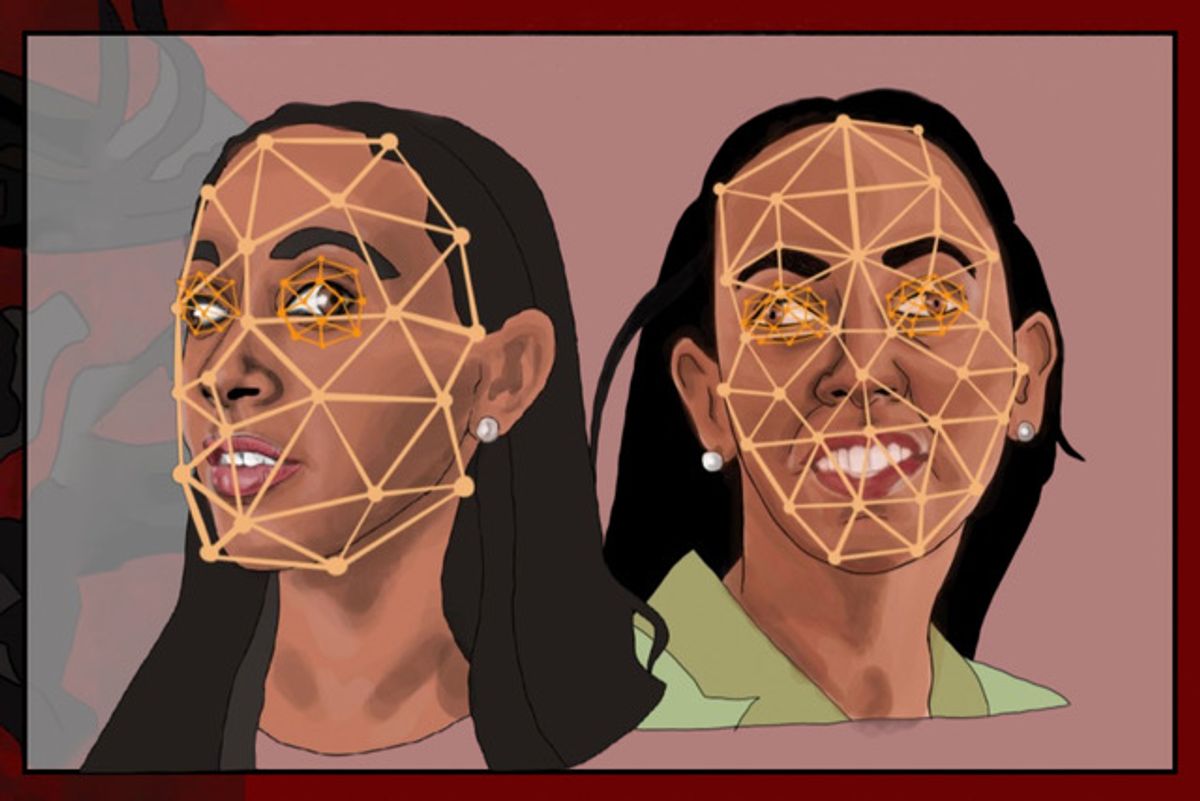

As an illustration of this point, in a comic book that Stoyanovich produced withFalaah Arif Khan entitled “Mirror, Mirror“, it is made clear that when we ask AI to move beyond games, likechess or Go, in which the rules are the same irrespective of a player’s gender, race, or disability status, and look for it to perform tasks that allocated resources or predict social outcomes, such as deciding who gets a job or a loan, or which sidewalks in a city should be fixed first, we quickly discover that embedded in the data are social, political and cultural biases that distort results.

In addition to societal bias in the data, technical systems can introduce additional skew as a result of their design or operation. Stoyanovich explains that if, for example, a job application form has two options for sex, ‘male’ and ‘female,’ a female applicant may choose to leave this field blank for fear of discrimination. An applicant who identifies as non-binary will also probably leave the field blank. But if the system works under the assumption that sex is binary and post-processes the data, then the missing values will be filled in. The most common method for this is to set the field to the value that occurs most frequently in the data, which will likely be ‘male’. This introduces systematic skew in the data distribution, and will make errors more likely for these individuals.

This example illustrates that technical bias can arise from an incomplete or incorrect choice of data representation. “It’s been documented that data quality issues often disproportionately affect members of historically disadvantaged groups, and we risk compounding technical bias due to data representation with pre-existing societal bias for such groups,” adds Stoyanovich.

This raises a host of questions, according to Stoyanovich, such as: How do we identify ethical issues in our technical systems? What types of “bias bugs” can be resolved with the help of technology? And what are some cases where a technical solution simply won’t do? As challenging as these questions are, Stoyanovich maintains we must find a way to reflect them in how we teach computer science and data science to the next generation of practitioners.

“Virtually all of the departments or centers at Tandon do research and collaborations involving AI in some way, whether artificial neural networks, various other kinds of machine learning, computer vision and other sensors, data modeling, AI-driven hardware, etc.,” says Jelena Kovačević, Dean of the NYU Tandon School of Engineering. “As we rely more and more on AI in everyday life, our curricula are embracing not only the stunning possibilities in technology, but the serious responsibilities and social consequences of its applications.”

Stoyanovich quickly realized as she looked at this issue as a pedagogical problem that professors who were teaching the ethics courses for computer science students were not computer scientists themselves, but instead came from humanities backgrounds. There were also very few people who had expertise in both computer science and the humanities, a fact that is exacerbated by the “publish or perish” motto that keeps professors siloed in their own areas of expertise.

“While it is important to incentivize technical students to do more writing and critical thinking, we should also keep in mind that computer scientists are engineers. We want to take conceptual ideas and build them into systems,” says Stoyanovich. “Thoughtfully, carefully, and responsibly, but build we must!”

But if computer scientists need to take on this educational responsibility, Stoyanovich believes that they will have to come to terms with the reality that computer science is in fact limited by the constraints of the real world, like any other engineering discipline.

“My generation of computer scientists was always led to think that we were only limited by the speed of light. Whatever we can imagine, we can create,” she explains. “These days we are coming to better understand how what we do impacts society and we have to impart that understanding to our students.”

Kovačević echoes this cultural shift in how we must start to approach the teaching of AI. Kovačević notes that computer science education at the collegiate level typically keeps the tiller set on skill development, and exploration of the technological scope of computer science — and a unspoken cultural norm in the field that since anything is possible, anything is acceptable. “While exploration is critical, awareness of consequences must be, as well,” she adds.

Once the first hurdle of understanding that computer science has restraints in the real world is met, Stoyanovich argues that we will next have to confront the specious idea that AI is the tool that will lead humanity into some kind of utopia.

“We need to better understand that whatever an AI program tells us is not true by default,” says Stoyanovich. “Companies claim they are fixing bias in the data they present into these AI programs, but it’s not that easy to fix thousands of years of injustice embedded in this data.”

In order to include these fundamentally different approaches to AI and how it is taught, Stoyanovich has created a new course at NYU entitledResponsible Data Science. This course has now become a requirement for students getting a BA degree in data science at NYU. Later, she would like to see the course become a requirement for graduate degrees as well. In the course, students are taught both “what we can do with data” and, at the same time, “what we shouldn’t do.”

Stoyanovich has also found it exciting to engage students in conversations surrounding AI regulation. “Right now, for computer science students there are a lot of opportunities to engage with policy makers on these issues and to get involved in some really interesting research,” says Stoyanovich. “It’s becoming clear that the pathway to seeing results in this area is not limited to engaging industry but also extends to working with policy makers, who will appreciate your input.”

In these efforts towards engagement, Stoyanovich and NYU are establishing the Center for Responsible AI, to whichIEEE-USA offered its full support last year. One of the projects the Center for Responsible AI is currently engaged in is a new law in New York City toamend its administrative code in relation to the sale of automated employment decision tools.

“It is important to emphasize that the purpose of the Center for Responsible AI is to serve as more than a colloquium for critical analysis of AI and its interface with society, but as an active change agent,” says Kovačević. “What that means for pedagogy is that we teach students to think not just about their skill sets, but their roles in shaping how artificial intelligence amplifies human nature, and that may include bias.”

Stoyanovich notes: “I encourage the students taking Responsible Data Science to go to the hearings of the NYC Committee on Technology. This keeps the students more engaged with the material, and also gives them a chance to offer their technical expertise.”

Dexter Johnson is a contributing editor at IEEE Spectrum, with a focus on nanotechnology.