Deep learning has a DRAM problem. Systems designed to do difficult things in real time, such as telling a cat from a kid in a car’s backup camera video stream, are continuously shuttling the data that makes up the neural network’s guts from memory to the processor.

The problem, according to startup Flex Logix, isn’t a lack of storage for that data; it’s a lack of bandwidth between the processor and memory. Some systems need four or even eight DRAM chips to sling the hundreds of gigabits to the processor, which adds a lot of space and consumes considerable power. Flex Logix says that the interconnect technology and tile-based architecture it developed for reconfigurable chips will lead to AI systems that need the bandwidth of only a single DRAM chip and consume one-tenth the power.

Mountain View–based Flex Logix had started to commercialize a new architecture for embedded field-programmable gate arrays (eFPGAs). But after some exploration, one of the founders, Cheng C. Wang, realized the technology could speed neural networks.

A neural network is made up of connections and “weights” that denote how strong those connections are. A good AI chip needs two things, explains the other founder, Geoff Tate. One is a lot of circuits that do the critical “inferencing” computation, called multiply and accumulate. “But what’s even harder is that you have to be very good at bringing in all these weights, so that the multipliers always have the data they need in order to do the math that’s required. [Wang] realized that the technology that we have in the interconnect of our FPGA, he could adapt to make an architecture that was extremely good at loading weights rapidly and efficiently, giving high performance and low power.”

The need to load millions of weights into the network in rapid succession is why AI systems that operate in the range of trillions to tens of trillions of operations per second need so many DRAM chips. Each pin on a DRAM chip can deliver a maximum of about 4 gigabits per second, so you need multiple chips to reach the needed hundreds of Gb/s.

In developing the original technology for FPGAs, Wang noted that these chips were about 80 percent interconnect by area, and so he sought an architecture that would cut that area down and allow for more logic. He and his colleagues at UCLA adapted a kind of telecommunications architecture called a folded-Beneš network to do the job. This allowed for an FPGA architecture that looks like a bunch of tiles of logic and SRAM.

Distributing the SRAM in this specialized interconnect scheme winds up having a big impact on deep learning’s DRAM bandwidth problem, says Tate. “We’re displacing DRAM bandwidth with SRAM on the chip,” he says.

The tiles for Flex Logix’s AI offering, called NMAX, take up less than 2 square millimeters using TSMC’s 16-nanometer technology. Each tile is made up of a set of cores that do the critical multiply and accumulate computation, programmable logic to control the processing and flow of data, and SRAM. Three different types of interconnect technology are involved. One links all the pieces on the tile together. Another connects the tile to additional SRAM located between the tiles and to external DRAM. And the third connects adjacent tiles together.

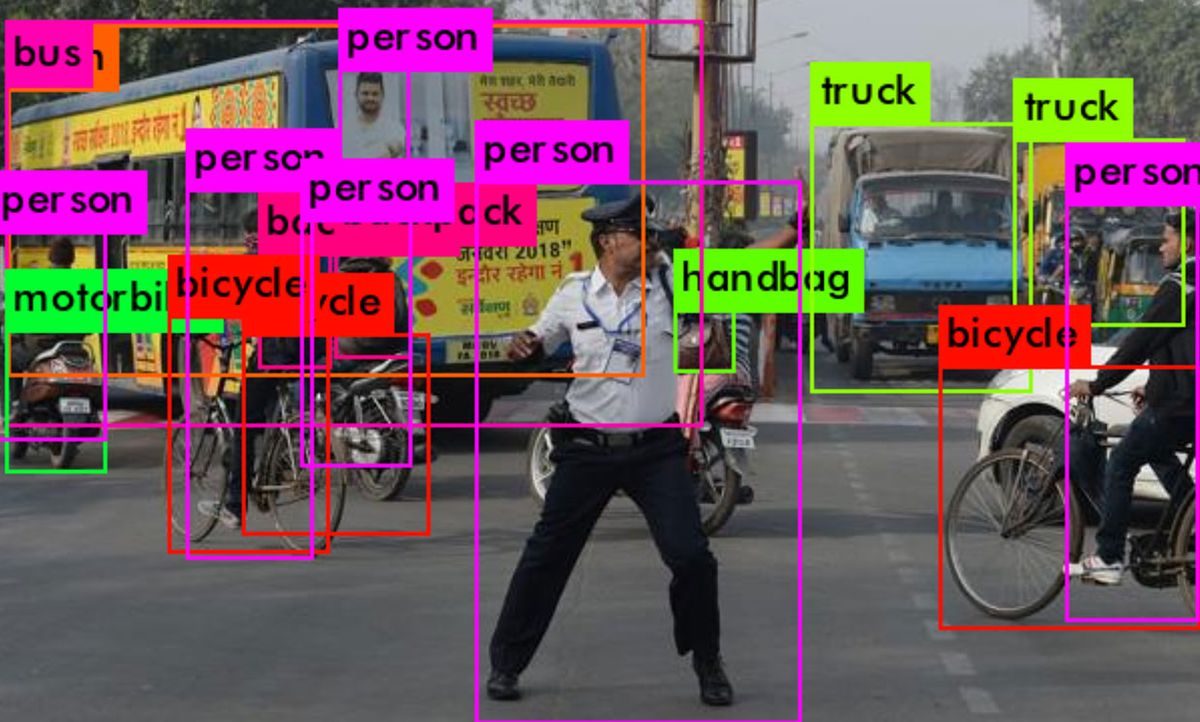

True apples-to-apples comparisons in deep learning are hard to come by. But Flex Logix’s analysis comparing a simulated 6-by-6-tile NMAX512 array with one DRAM chip against an Nvidia Tesla T4 with eight DRAMs showed the new architecture identifying 4,600 images per second versus Nvidia’s 3,920. The same size NMAX array hit 22 trillion operations per second on a real-time video processing test called YOLOv3 using one-tenth the DRAM bandwidth of other systems.

The designs for the first NMAX chips will be sent to the foundry for manufacture in the second half of 2019, says Tate.

Flex Logix counts Boeing among its customers for its high-throughput embedded FPGA product. But embedded FPGA is a hard sell compared to neural networks, admits Tate. “Embedded FPGA is a good business, but inferencing will likely surpass it very quickly,” says Tate.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.