For decades, the trend was for more and more of a computer’s systems to be integrated onto a single chip. Today’s system-on-chips, which power smartphones and servers alike, are the result. But complexity and cost are starting to erode the idea that everything should be on a single slice of silicon.

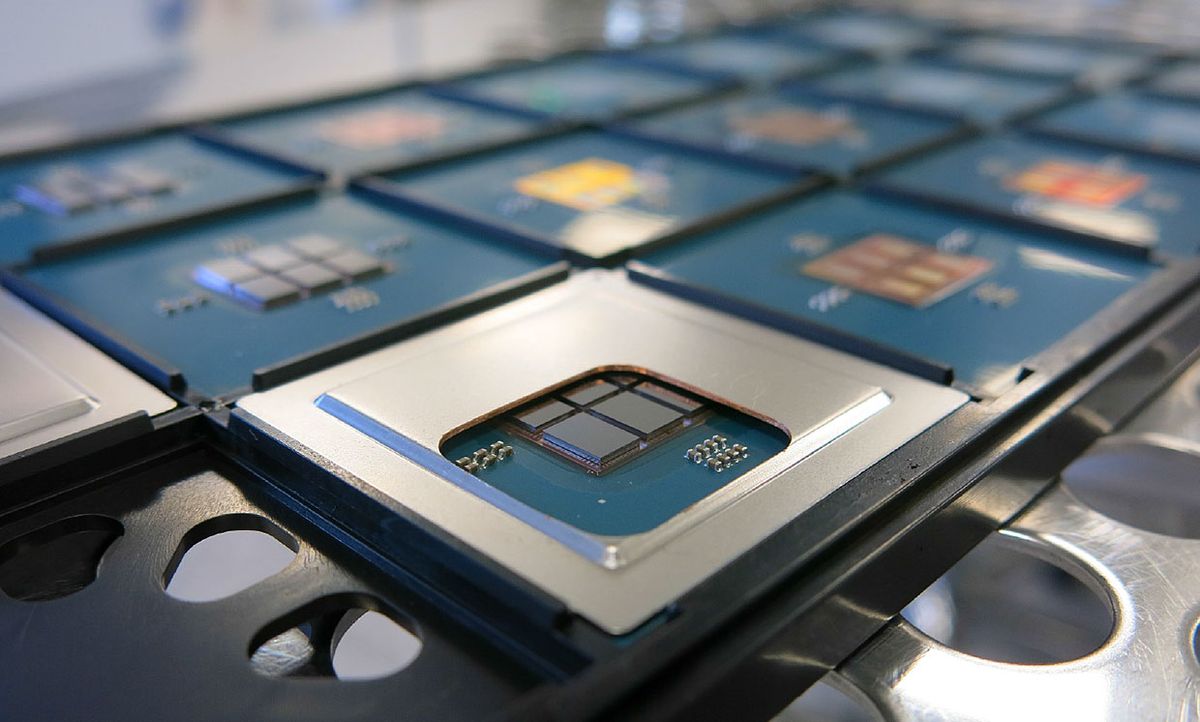

Already, some of the most of advanced processors, such as AMD’s Zen 2 processor family, are actually a collection of chiplets bound together by high-bandwidth connections within a single package. This week at the IEEE Solid-State Circuits Conference (ISSCC) in San Francisco, French research organization CEA-Leti showed how far this scheme can go, creating a 96-core processor out of six chiplets.

The CEA-Leti chip—for want of a better word—stacks six 16-core chiplets on top of a thin sliver of silicon, called an active interposer. The interposer contains both voltage regulation circuits and a network that links the various parts of the core’s on-chip memories together. Active interposers are the best way forward for chiplet technology if it is ever to allow for disparate technologies and multiple chiplet vendors to be integrated into systems, according to Pascal Vivet, a scientific director at CEA-Leti.

“If you want to integrate chiplets from vendor A with chiplets from vendor B, and their interfaces are not compatible, you need a way to glue them together,” he says. “And the only way to glue them together is with active circuits in the interposer.”

The interposer features a network-on-chip that uses three different communication circuits to link the core’s on-chip SRAM memory. The fastest-access memories, called the L1 and L2 caches, are linked directly, with no additional circuitry between them. The next highest level of cache connections, L2 to L3, require some network smarts built into the interposer, as does the link between the L3 cache and off-chip memory. All told, the system can sling 3 terabytes per second per square millimeter of silicon with a latency of just 0.6 nanoseconds per millimeter.

The interposer also houses voltage regulation systems usually found on the processor itself. Processors often use circuits called low-dropout regulators to adjust their voltage levels and save power. Vivet’s team chose more power efficient circuits called switched capacitor voltage regulators. The usual drawback to these circuits is that they require space-consuming off-chip capacitors. But the interposer had enough room to integrate the capacitors, explains Vivet. The regulators helped the chip achieve power consumption of 156 milliwatts per square millimeter.

Chiplet enthusiasts imagine a remaking of the system-on-chip industry so that chiplets from multiple vendors can all be integrated with little effort, thanks to standardized interfaces. The result would be cheaper, more flexible, mix-and-match systems.

But industry is not there yet. Unlike CEA-Leti’s prototype, commercial systems that rely on chiplets use silicon interposers that have no active circuitry embedded in them. And many systems don’t even use silicon, instead relying on organic circuit-board materials or small pieces of silicon embedded in the organic board.

Rather than simple mix-and-match systems, these require a large amount of codesign between the chiplets and the package that integrates them. Nevertheless, the results can be worthwhile.

At ISSCC, AMD detailed how it codesigned the chiplets and package that make up its Zen 2 high-performance processors. The resulting system broke a record that night at ISSCC. When overclocked and cooled with a decanter of liquid nitrogen, the single AMD processor scored 39,744 on the Cinebench 3D rendering benchmark. The record a few weeks ago was about 32,000 set by a roughly US $20,000 128-core server system, according to AMD’s Jerry Ahrens.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.