IMAGE CREDIT: Wikimedia Commons

I'll be covering the 21st annual Hot Chips conference for the next couple of days.

Hot Chips is an industry nerd-off brings together designers and architects of high-performance hardware and software among the Spanish colonial architecture and rarefied air of Stanford University every August. The logic-heavy Powerpoints are interspersed with a few keynotes to remind everyone what’s at stake in all these mind-numbing comparisons of SIMD vs MIMD.

One of the big ideas this year appears to be the future of gaming. On Tuesday, Intel’s Pradeep Dubey will chair a keynote presented by Electronic Arts chief creative officer Rich Hilleman.

When I first saw the title of the keynote, “Let's Get Small: How Computers are Making a Big Difference in the Games Business,” I pinched my arm because I thought for a second I was experiencing some horrible Life on Mars style delusion/time travel. Computers making a big difference in the games business? Oh, you think so, doctor!

But it turns out to be more complicated than the title indicates. As usual, it all goes back to Moore’s Law.

Moore’s law says that as transistor size keeps shrinking, more of them can be squeezed onto a given area of silicon, and as long as the price of silicon remains the same, those trasistors will just get cheaper as they get smaller. That means the chips will get cheaper too. That’s why you can get so much processing power for ever-decreasing amounts of outlay.

These days you can get the kind of processing power in your mother’s basement that just 10 years ago, was reserved for the Crays and Blue Genes and other monstrosities available only to government research facilities.

However--the cost of developing a PC or console game increases exponentially alongside Moore's Law. David Kanter, my go-to guru at Real World Technologies explained it thus:

Moore's law says transistors double in density roughly every 18 months. Graphics performance is perfectly parallel with Moore’s law, which means graphics performance too, roughly doubles every 18 months.

And when graphics performance doubles, you need higher-resolution artwork to render in a game. At that point frame rate over 60 FPS aren’t helpful, what you really want is more details and new effects to wow your gamers.

And if you want that higher-resolution artwork, you need to hire more artists. That rule also tracks with Moore’s law—more and more artists are necessary for each generation of chip.

The upshot is that the cost of developing artwork scales with transistor counts for GPUs, which are themselves driven by Moore's Law. This means that the cost of big-name games—like Grand Theft Auto, Quake, Doom, and the like—increases exponentially. That's a big problem for developers whose pockets are shallower than EA’s.

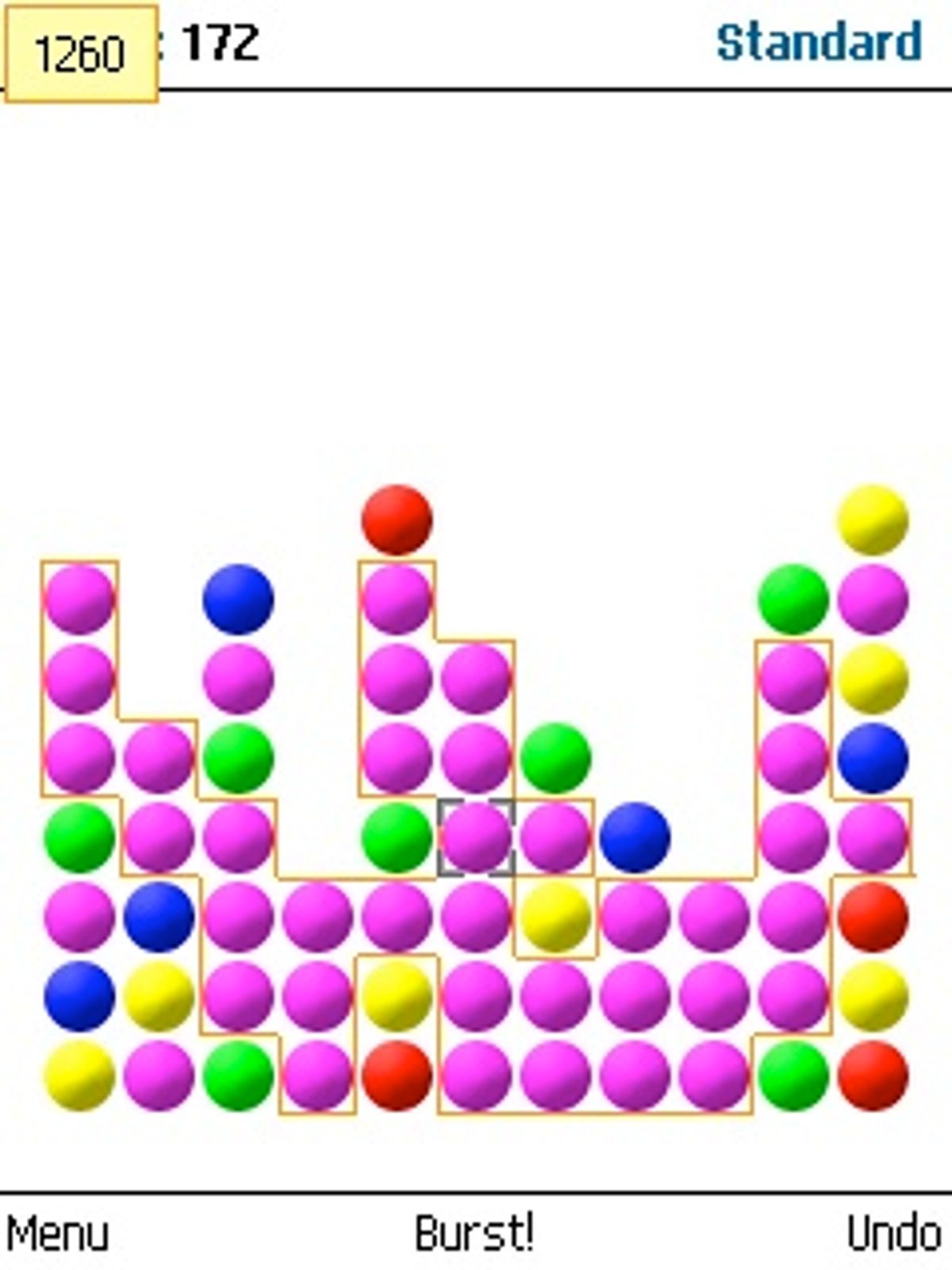

And that is one reason the games market for phones is exploding. For these little rinky dink displays (iPhone = 480 x 320 pixels, my phone = 220 x176), development costs are so low compared to a PC or console, anyone can make one (maybe even in their spare time).

Back in March, at the 2009 Game Developer’s Conference, ex-EA developer Neil Young (founder and CEO, ngmoco) delivered a keynote called “Why the iPhone just changed everything.”

He said that the iPhone--and the class of devices it represents--is a game changer on the order of the Atari 2600, Gameboy, PlayStation One, or Wii. He predicted that the iPhone will “emerge a gaming device as compelling as existing dedicated handheld game devices.”

Kanter suspects that at Tuesday's keynote, EA and Intel may discuss how ray tracing could make developing artwork easier and less expensive. Thoughts? Comments? Predictions? Do you buy the idea that gaming is splitting the world into empire of AAA game development vs. rebellion of mobile phone developers?

Sally Adee, formerly an associate editor at IEEE Spectrum, is now a technology features editor at New Scientist, in London. She says it was an honor to write her last feature for Spectrum about the European Space Agency’s Loredana Bessone, a woman she considers a role model. “One day, I’m going to hit her up at ESA to start training me as an astronaut,” she says with a wink. “Right after I get sick of playing roller derby in London.”