Creating a virtual experience that’s as close to real life as possible is one of virtual reality’s (VR’s) ultimate goals, as companies and researchers find ways to accurately mimic a person’s facial expressions and body gestures. But if the lighting and shadows on faces—notoriously tricky to simulate with accuracy—are all wrong, expressions and gestures don’t matter. It just looks wrong. So how to simulate light, shadows, and reflections on the virtual human face?

This is the motivating question behind a recently developed process for creating relightable 3D portraits of the human head and part of the upper body, courtesy of researchers at Russia’s Skolkovo Institute of Science and Technology (Skoltech).

“Oftentimes, when you take a photo of someone and add lighting to it, the image looks unnatural because the lighting on the person and the lighting on the environment don’t match,” says Victor Lempitsky, associate professor and head of the computer vision group at Skoltech. “This mismatch in lighting becomes a problem. It affects realism, suspending the impression of looking at a real person. That’s why we decided to investigate how to make those portraits relightable.”

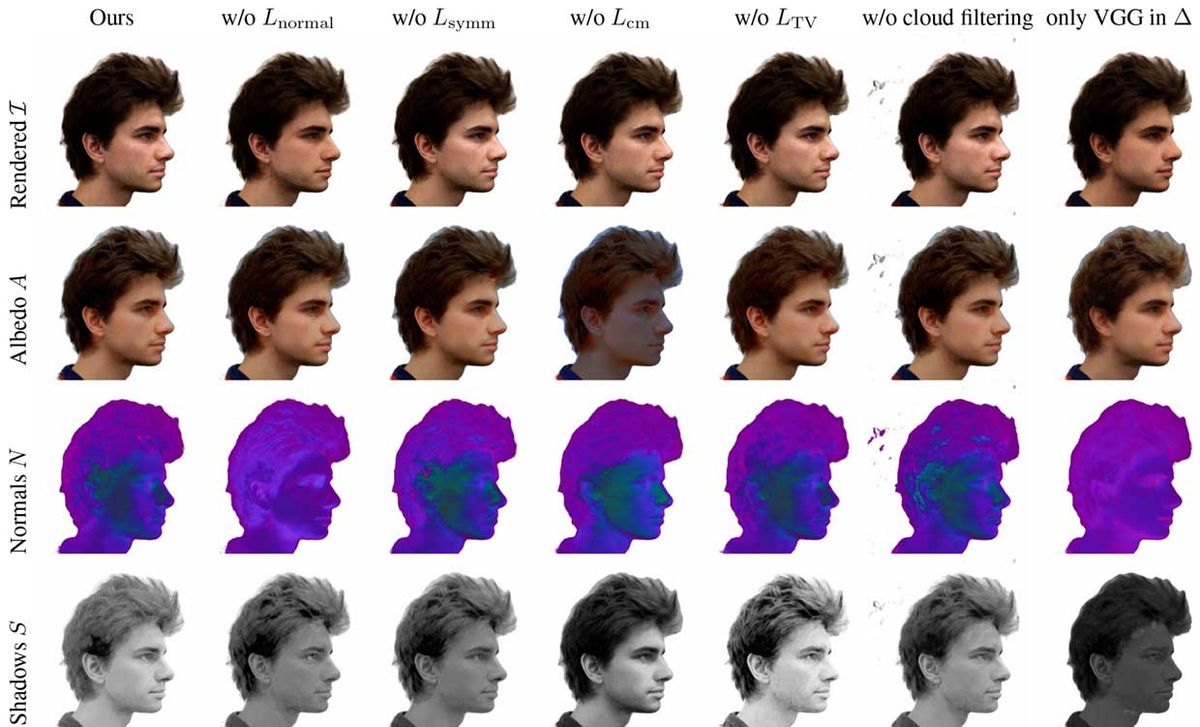

The team’s process involves taking a video-recorded orbit around a person standing still, with the flash going off at periodic intervals. A point cloud is then generated, and an algorithm called neural point-based graphics takes care of the 3D reconstruction. In other words, a deep neural network processes images and predicts lighting-related properties such as albedo and shadows based on the room lighting. These maps then help to relight images from different viewpoints and under various lighting conditions. As an example, using this technique, a 3D face might be lit and shadowed via a point light source, or an ambient source from light in all directions, or a directional source coming from, perhaps, a large window.

“The main appeal of neural point-based graphics is its robustness and suitability to a wide variety of geometries,” says Lempitsky. Polygonal meshes are still the most common geometric representation of 3D objects in traditional computer graphics—meshes are well supported and typically allow for fast rendering. But mesh-based approaches often fail on thin objects or those with a small diameter, such as hair threads, fingers, or small sheets of cloth. Point-based graphics have a similar problem: The light and shadows they generate on a face can still have small holes in it, because the mesh is not dense enough.

“Combining point-based graphics with neural rendering helps overcome these challenges, with the neural network deciding how to connect points locally during rendering,” Lempitsky says.

Unlike other approaches which focus on a single view, require specific lighting, or call for the use of sophisticated equipment such as lidar instruments or light stages, the process devised by Skoltech needs only a smartphone. “Not everyone has access to high-end setups in studios or has knowledge of complex techniques like photogrammetry,” says Artem Sevastopolsky, a Ph.D. student at Skoltech and primary author of the research. “One of our motivations was to make data acquisition simpler.”

These photorealistic portraits veer into uncanny valley territory while potentially sowing the seeds for future deepfake mischief. “Our work is in line with the uncanny valley concept, but we quite accurately simulate the face. In this way, it’s hard to frighten anyone with the 3D portraits we create,” Sevastopolsky says. “This field of synthesizing facial images does have a connection to deepfakes, but I don’t think our process can be used for that because it doesn’t simulate lip motions or facial expressions.”

To further advance their research, Sevastopolsky and the computer vision group at Skoltech are looking into applying their process to full body relighting. And aside from creating more realistic VR experiences, the team’s process can also be used for 3D reconstruction of objects or environments.

“This is important for cultural heritage preservation, for example,” says Evgeny Burnaev, associate professor and head of the advanced data analytics in science and engineering group at Skoltech. “We can use simpler equipment instead of costly and complicated scanners to quickly capture things. The corresponding 3D model can then be put on a website to attract more people to a certain historical place, or allow people with limited abilities or who can’t otherwise visit that place to view and experience it virtually.”

Rina Diane Caballar is a journalist and former software engineer based in Wellington, New Zealand.