Ever since our first experience with a prototype of the Oculus Rift, we’ve been getting more and more excited about high quality consumer virtual reality hardware. The first production version of the Rift is almost here, and when it arrives (probably in early 2016), you might even be able to justify its rumored $1,500 cost.

Good as the Rift is (and it’s very, very good), it’s taken this long for Oculus to get the hardware ready because fooling your eyes and brain to the extent that the Rift (or any other piece of VR hardware) does is a very tricky thing to pull off. The vast majority of us have an entire lifetime of experience of looking at the world in 3-D, and we notice immediately when things aren’t quite right. This can lead to headaches, nausea, and a general desire never to try VR ever again. A big part of what makes VR so difficult is that it’s continually trying to convince your eyes that they should be focusing on a scene in the distance, when really, they’re looking at a screen just a few inches away.

The current generation of VR displays use a few different techniques to artificially generate images that appear to have depth despite being displayed on flat screens. But there’s one that they're missing out on—one that could make VR displays much more comfortable to use. The same sort of 4-D light field technology that allows the Lytro camera to work its magic could solve this problem for VR as well.

Conventional VR displays like the Oculus Rift artifically create images that seem to have depth using visual depth cues including:

Binocular Disparity (Stereopsis) – Your left and right eyes each see a slightly different scene. When your brain processes these two scenes, the disparities between the two scenes give the illusion of depth.

Motion Parallax – As you move your head from side to side, things that are closer to your eyes appear to move faster laterally than things that are farther from your eyes. Displays take advantage of that to make your brain think some objects in the scene are farther away than they actually are.

Binocular Occlusions – Objects that are in the foreground of a scene, and are therefore in front of other objects, are nearer. This results in a simple relative ranking of distance. But if the amount of occlusion is different for each eye, the brain registers it as depth.

Vergence – When your eyes look at something, the closer that thing is to you, the more your eyes have to rotate inwards (converge) to keep it in the center of your field of view. If something is farther away, your eyes have to rotate outwards (diverge) until at an infinite distance, the center of gaze for your eyes are parallel. The amount of rotation gives your brain the data it needs (two angles and the base of a triangle) to calculate the distance to the thing.

To take advantage all of these depth cues, most VR displays show a separate image to each eye. If those images are properly synced up with each other and with the motion of your head, it generates a tolerably convincing illusion of depth.

However, your brain can still tell that something’s not quite right, because vergence is coupled with another depth cue: focus. When something close to you is in focus, your eyes are converged a bit, and as you focus on things that are farther away, your eyes diverge. Most head-mounted virtual reality displays (HMDs) don’t present focus cues at all. The entire image is always in focus, because it’s on a flat screen that stays at the same distance from your eyes. But, your eyes are converging and diverging depending on the part of a changing stereoscopic image your attention is drawn to.

Your brain doesn't like this at all, and this “vergence-accommodation conflict” can result in “visual discomfort and fatigue, eyestrain, diplopic vision, headaches, nausea, compromised image quality, and it may even lead to pathologies in the developing visual system of children.” So say the Stanford University researchers who will be presenting a paper at SIGGRAPH 2015. They, of course, have a way of fixing this problem: 4-D light field displays.

To understand what a 4-D light field display is and how it works, it helps to start from the other side of the process, with a 4-D light field camera. You can buy one of these; they're funny looking and made by a company called Lytro. We’ve got a fantastic explainer about how Lytro’s light field camera works, but essentially, it’s like putting a whole bunch of tiny little cameras together to capture light from multiple perspectives of a scene simultaneously. By recording the direction that each light ray comes from, you can do a bunch of fancy math to reconstruct how that scene will look at any point of focus. The 4-D, incidentally, refers to the dimensional information contained in each pixel: x location, y location, and θ and φ for the angle of the incoming light ray.

So now you’ve got a camera that can capture images viewable from any perspective. Cramming those images into a display would be pretty awesome, if for no other reason than because it would solve the family of VR-induced problems that includes vergence-accommodation, visual discomfort and fatigue, eyestrain, diplopic vision, headaches, nausea, compromised image quality, and pathologies in the developing visual system of children.

And this is what the Stanford researchers have managed to put together: a high resolution light field display with refresh rates that make it practical for VR.

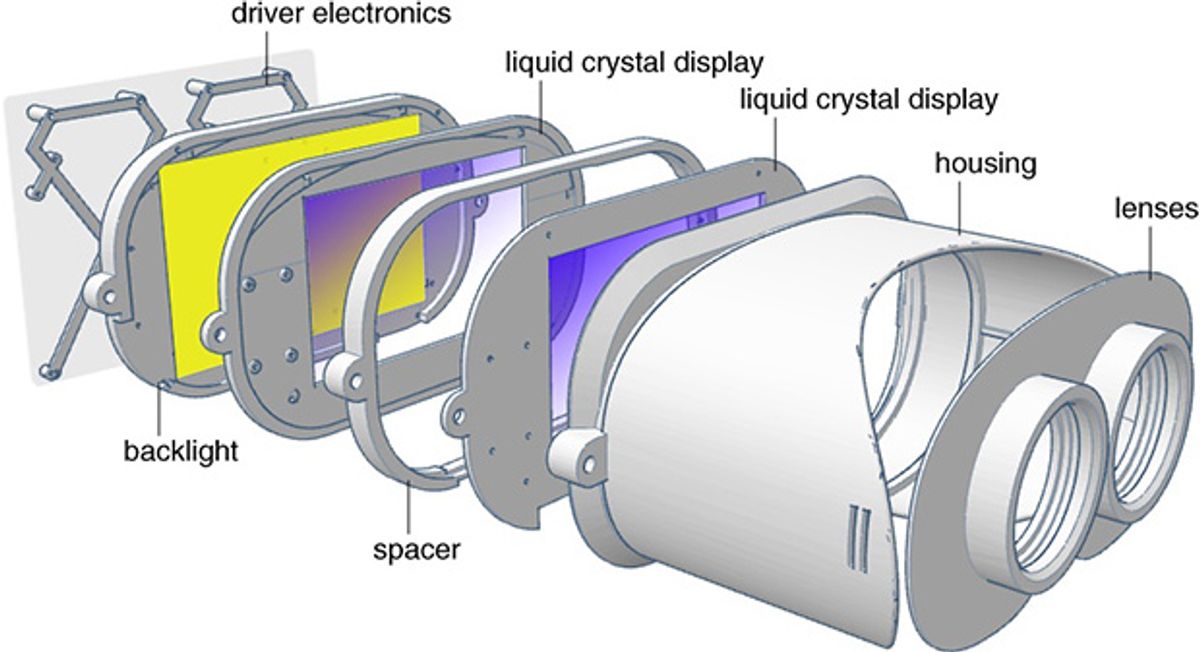

The video skips quickly over how the display actually works, but it’s shockingly simple. Two semi-transparent LCD screens are placed in series over a backlight, which is just one more than you’d usually have. The data in a light field image is then factored into two separate 2-D images, such that when those images are displayed, one on top of the other, the light multiplicatively recreates the entire light field. For all the mathematical details on how that actually works, you can read the paper, but the upshot is that it results in a full light field being available to the eye so that the viewer can focus naturally at any point. The rest of the image blurs just like the real world does when you focus on something. And because the entire light field is there in the background, the display doesn’t need any kind of fancy eye tracking to make this all work.

Getting all of this to work in real time at framerates that don’t totally suck require would require only a modest amount of graphical computing power using 1280-by-800-pixel displays. With an Intel i7 CPU and an NVIDIA Quadro K6000 graphics card, simple images could be rendered at about 35 frames per second. Stereo images are demand nearly twice the performance; we’re not quite at that minimum 60 frames per second that it takes to percieve convincingly smooth motion, but computing power is one of those things that can just be waited out until it gets to the point where you need it to be.

Because this is a research paper, it’s unlikely that light field displays will make it into the forthcoming generation of consumer VR displays. But since the hardware requirements are “inexpensive and simple,” it may not be that far off. There’s still plenty of research to be done, like seeing whether eye tracking could reduce computing requirements (and increase frame rates) enough to be worthwhile. But in any case, we’re delighted to hear that the future of virtual reality might be one in which visual discomfort and fatigue, eyestrain, diplopic vision, headaches, and nausea have been eliminated. Phew.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.