Magically seeing around corners to spot moving people or objects may not rank first in most people's superhero daydreams. But MIT researchers have shown how they could someday bestow that superpower upon anyone with a smartphone.

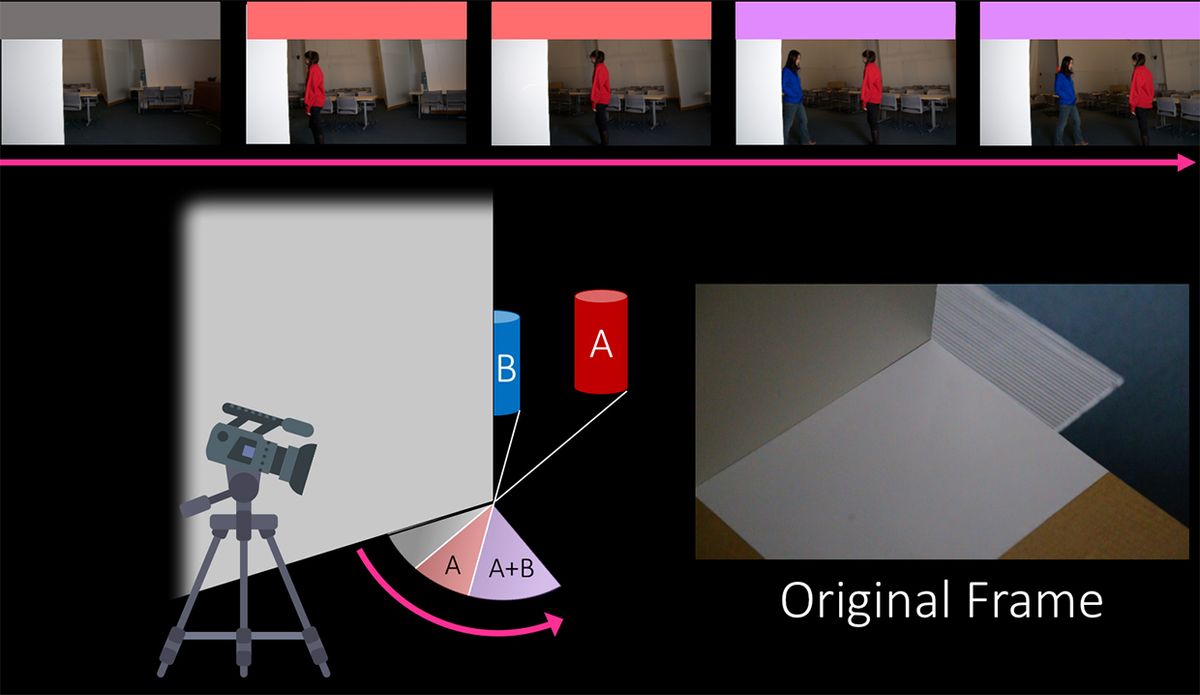

Their secret to peeking around corners is detecting slight differences in light patterns reflected from moving objects or people. Those reflected light patterns form subtle variations in the shadowy area near the base of each corner. MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) created simple software that can detect fuzzy pattern variations in the pixels of a 2-D video—taken by a basic consumer camera or even a smartphone camera—and reconstruct the speed and trajectory of moving objects by stitching together multiple, distinct 1-D images.

“Each point on the ground is reflecting light from a partial view of the hidden scene," says Katie Bouman, an electrical engineer who worked on the new study as part of her Ph.D. at MIT CSAIL in Cambridge. "Because different slices of the hidden scene are being reflected from the ground, you can recover and interpret how light is changing in the hidden scene over time.”

MIT's "CornerCameras" system can reveal the number of moving people or objects as individual lines on a graph that tracks angular velocity over time. Thicker lines mean objects are closer, while thinner lines mean the objects are farther away. If researchers can observe the reflected light patterns at the base of two adjacent corners—as in the case of a doorway—their software algorithm can even triangulate the approximate location of the moving objects in the hidden scene.

Such technology could potentially allow self-driving cars to spot a child running out from a corner or behind another vehicle. The U.S. military also has a keen interest in such technology, and the MIT project received funding through the REVEAL program of the U.S. Defense Advanced Research Projects Agency (DARPA).

Other researchers previously developed a system that pinpoints the location of hidden objects by firing millions of laser pulses at the ground and measuring the reflected light. That active laser system can detect even stationary objects with fairly high precision, whereas the new MIT CornerCameras system can only detect moving objects.

But such laser systems work best with no ambient light, rain, or dust to confuse the system. By comparison, the passive MIT system can make use of environmental lighting conditions as long as it's not completely dark. It also seemed to work on a variety of surfaces such as concrete, carpet, brick, and linoleum.

"Even though there are a lot of good ideas for looking around corners, they often require complex algorithms, specialized hardware, or are computationally expensive and impractical to use in real-time scenarios," Bouman says.

Outdoor tests suggest that the MIT system may also function well in the rain. "When we first got it to work on outdoor scenes, that was a really pleasant surprise," says William Freeman, professor of electrical engineering and computer science at MIT.

The MIT CornerCameras system is fairly simple and needs nothing more than a basic webcam or iPhone 5s smartphone camera, along with a laptop to run the software algorithm. That's a big advantage in someday making the system work for a wide range of commercial applications. The active laser system relies on a more extensive set of high-quality electronics to perform its laser-based tracking.

Despite the relative simplicity of MIT's approach, getting this far was no cakewalk. The team began by experimenting with people wearing bright white clothing and walking just out of sight around the corner of a wall or doorway, Freeman explained. Over time, they started to push the system's capability to detect people wearing different-colored clothing at greater distances.

A next big step for the MIT team will be to see if the CornerCameras system works on a moving platform—a necessary feature if it's to ever become part of future collision-avoidance systems in cars. Vickie Ye, a computer vision researcher at MIT and coauthor on the paper, has been working with CSAIL robotics graduate student Felix Nase to test the system's stability while it’s being wheeled around in wheelchairs. It's a prelude to trying the system out on a moving vehicle.

The team also hopes to begin using machine learning algorithms to automatically interpret patterns behind the number of moving objects and what they're doing, Freeman says. The early MIT testing still required the human researchers to eyeball the 1-D videos and interpret what was going on with the moving lines.

It's unlikely that we will all be using our smartphones to peek around corners within the next few years. But in a world filled with uncertainty and surprises, a refined version of the MIT approach could eventually help both cars and humans get a glimpse of what's coming up just ahead.

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.