Using artificial intelligence, scientists can now rapidly generate photorealistic color 3D holograms even on a smartphone. And according to a new study, this new technology could find use in virtual reality (VR) and augmented reality (AR) headsets and other applications.

A hologram is an image that essentially resembles a 2D window looking onto a 3D scene. The pixels of each hologram scatter light waves falling onto them, making these waves interact with each other in ways that generate an illusion of depth.

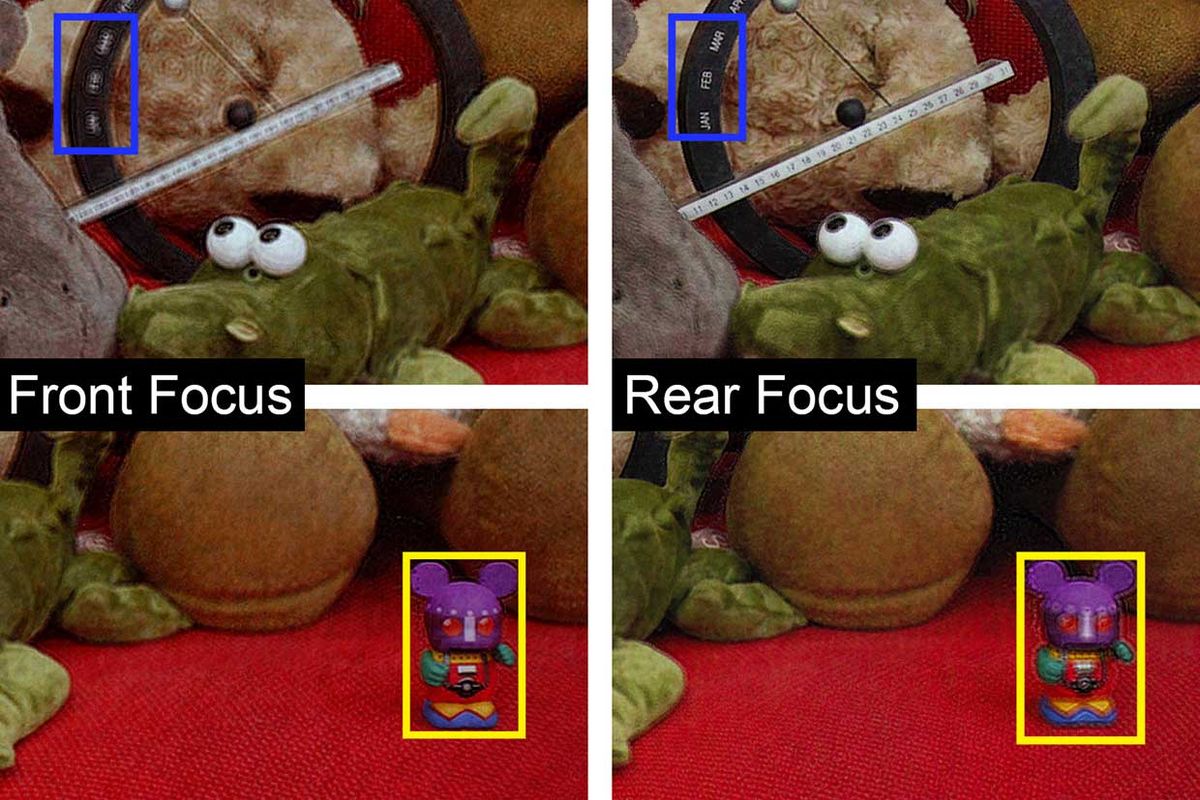

Holographic video displays create 3D images that people can view without feeling eye strain, unlike conventional 3D displays that produce the illusion of depth using 2D images. However, although companies such as Samsung have recently made strides toward developing hardware that can display holographic video, it remains a major challenge actually generating the holographic data for such devices to display.

Each hologram encodes an extraordinary amount of data in order to create the illusion of depth throughout an image. As such, generating holographic video has often required a supercomputer’s worth of computing power.

In order to bring holographic video to the masses, scientists have tried a number of different strategies to cut down the amount of computation needed — for example, replacing complex physics simulations with simple lookup tables. However, these often come at the cost of image quality.

Now researchers at MIT have developed a new way to produce holograms nearly instantly—a deep-learning based method so efficient, it can generate holograms on a laptop in a blink of an eye. They detailed their findings this week, which were funded in part by Sony, online in the journal Nature.

“Everything worked out magically, which really exceeded all of our expectations,” says study lead author Liang Shi, a computer scientist at MIT.

Using physics simulations for computer-generated holography involves calculating the appearance of many chunks of a hologram and then combining them to get the final hologram, Shi notes. Using lookup tables is like memorizing a set of frequently used chunks of hologram, but this sacrifices accuracy and still requires the combination step, he says.

In a way, computer-generated holography is a bit like figuring out how to cut a cake, Shi says. Using physics simulations to calculate the appearance of each point in space is a time-consuming process that resembles using eight precise cuts to produce eight slices of cake. Using lookup tables for computer-generated holography is like marking the boundary of each slice before cutting. Although this saves a bit of time by eliminating the step of calculating where to cut, carrying out all eight cuts still takes up a lot of time.

In contrast, the new technique uses deep learning to essentially figure out how to cut a cake into eight slices using just three cuts, Shi says. The convolutional neural network—a system that roughly mimics how the human brain processes visual data—learns shortcuts to generate a complete hologram without needing to separately calculate how each chunk of it appears, “which will reduce total operations by orders of magnitude,” he says.

The researchers first built a custom database of 4,000 computer-generated images, which each included color and depth information for each pixel. This database also included a 3D hologram corresponding to each image.

Using this data, the convolutional neural network learned how to calculate how best to generate holograms from the images. It could then produce new holograms from images with depth information, which is provided with typical computer-generated images and can be calculated from a multi-camera setup or from lidar sensors, both of which are standard on some new iPhones.

The new system requires less than 620 kilobytes of memory, and can generate 60 color 3D holograms per second with a resolution of 1,920 by 1,080 pixels on a single consumer-grade GPU. The researchers could run it an iPhone 11 Pro at a rate of 1.1 holograms per second and on a Google Edge TPU at a rate of 2 holograms per second, suggesting it could one day generate holograms in real-time on future virtual-reality (VR) and augmented-reality (AR) mobile headsets.

Real-time 3D holography might also help enhance so-called volumetric 3D printing techniques, which create 3D objects by projecting images onto vats of liquid and can generate complex hollow structures. The scientists note their technique could also find use in optical and acoustic tweezers useful for manipulating matter on a microscopic level, as well as holographic microscopes that can analyze cells and conventional static holograms for use in art, security, data storage and other applications.

Future research might add eye-tracking technology to speed up the system by creating holograms that are high-resolution only where the eyes are looking, Shi says. Another direction is to generate holograms with a person's visual acuity in mind, so users with eyeglasses don’t need special VR headsets matching their eye prescription, he adds.

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.