Q&A: This Autocompletion Tool Aims to Supercharge Your Coding

Deep TabNine uses natural-language processing to auto-generate code in 22 programming languages

Machine learning can already help you write emails faster by suggesting quick responses or finishing your sentences. But what if the concept behind Google’s Smart Compose could also help software developers be more productive?

That’s what Jacob Jackson aims to do with Deep TabNine, a code autocompletion tool. Jackson, a computer science undergraduate student at the University of Waterloo, in Canada, and a previous intern at AI research company OpenAI, first launched TabNine as a code completion plug-in in November 2018, and then added deep-learning capabilities to create what’s now known as Deep TabNine.

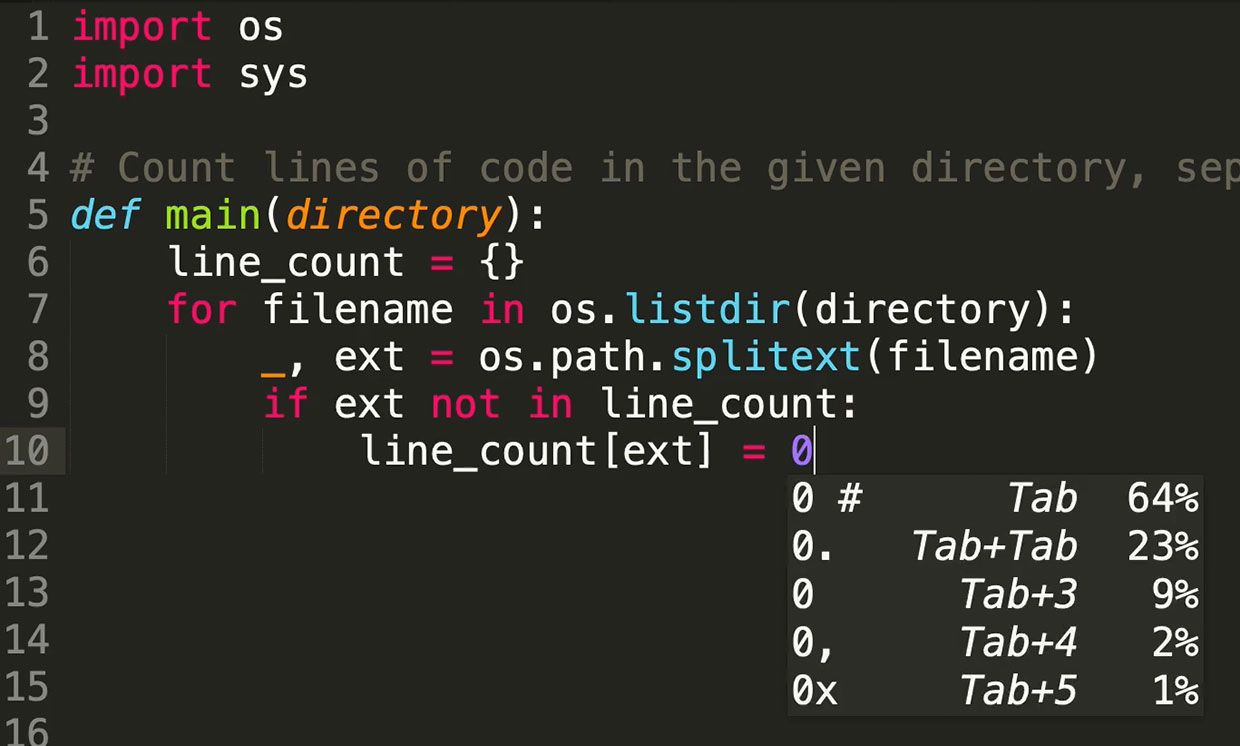

Deep TabNine uses GPT-2—a natural-language-processing model designed by OpenAI—to generate relevant coding suggestions and predict the elements in each line of code. Similar to how OpenAI trained GPT-2 on a data set of 8 million web pages to “predict the next word given all of the previous words within some text,” Jackson used GPT-2 to train Deep TabNine on an estimated 2 million files from source-code-hosting and version-control platform GitHub to “predict each token given the tokens that come before it.”

Deep TabNine works with existing code editors and integrated-development environments (IDEs) and currently supports 22 programming languages, including C++, CSS, HTML, Java, JavaScript, PHP, Python, and SQL.

Jackson spoke to IEEE Spectrum about Deep TabNine and how it could help software developers focus on solving problems, rather than on navigating the intricacies of a programming language.

This interview has been edited and condensed for clarity.

IEEE Spectrum: What gave you the idea for Deep TabNine?

Jacob Jackson: I was working at a software company and there were two kinds of autocompletion tools available: one that understood the language quite well but was slow, and one that was fast but wasn’t good. I wanted [something] in between, where it was really fast but smarter than the other tools out there. That evolved into developing features like using a deep-learning model to rank the completions and trying to figure out common patterns [in code]. That was the founding goal, and then GPT-2 was released, and I saw that this deep learning technology was heading to a point where it was really good. Given that TabNine was an autocompleter, I thought it was a natural fit.

IEEE Spectrum: What more can you tell us about how Deep TabNine works?

Jackson: It’s based on GPT-2, and the way GPT-2 works is that you feed it a sequence of tokens. You can think of one token as one word, and if you have a sequence of words, then it will give you a distribution of the words you’re going to see next. So to get the completion suggestion, you run this model many times and ask it what token it thinks will come next, and then you run it again [on actual code] and that’s when you get the [list of suggested] tokens. Although it does have some understanding of documentation and syntax, all that is learned naturally [through] giving it code and using it to predict what comes next.

IEEE Spectrum: What was hard about developing Deep TabNine?

Jackson: The biggest challenge in applying deep learning is that these models are computationally intensive, and because we require a high-performing and highly responsive system, that’s a problem. We started offering Deep TabNine as a cloud service, so even if your computer isn’t that powerful, you can still use it. We also recently released TabNine Local, which lets you run Deep TabNine on your own computer.

IEEE Spectrum: Does deep learning also make it easier to port the implementation to different languages?

Jackson: Yes. The advantage of this language-agnostic approach is that all its knowledge is obtained from examples of code in [a particular programming] language, so it’s easy to add support for new languages.

IEEE Spectrum: How does Deep TabNine differ from other autocompletion tools or similar features that IDEs and code editors have?

Jackson: A lot of the tools out there only work for one language or a few languages. Also, to my knowledge, this is the only autocompleter that uses deep learning, and I think that improves the suggestion quality.

IEEE Spectrum: What can developers expect from Deep TabNine?

Jackson: The goal is to make [coding] easier. Let’s say you have an idea and you want to convert that to code, but in order to write code, you have to type it into a keyboard, look at the editor, and make sure you don’t make a mistake. I think the value of a tool that reduces that friction is, it lets you focus more on the high-level stuff. You’ll spend less time typing, and it can complete the simple things for you. You don’t have to waste time thinking about that.

IEEE Spectrum: What’s next for Deep TabNine?

Jackson: In machine learning, you can always work on improving the model, improving the data, [creating] better suggestions. Documentation is one area we’re thinking about—having local, easier-to-access documentation.

With Deep TabNine, the analogy I use sometimes is that we want it to be like using a keyboard as opposed to a [smartphone] keyboard. It’s not like the keyboard is writing your code for you, [but] it makes it a little bit easier, and you have to think about it a little bit less. You would be a lot more productive with your keyboard than a [smartphone] keyboard, and that’s essentially what we’re going for.

—

Deep TabNine is currently available in beta, and anyone can sign up for free access.

Rina Diane Caballar is a journalist and former software engineer based in Wellington, New Zealand.