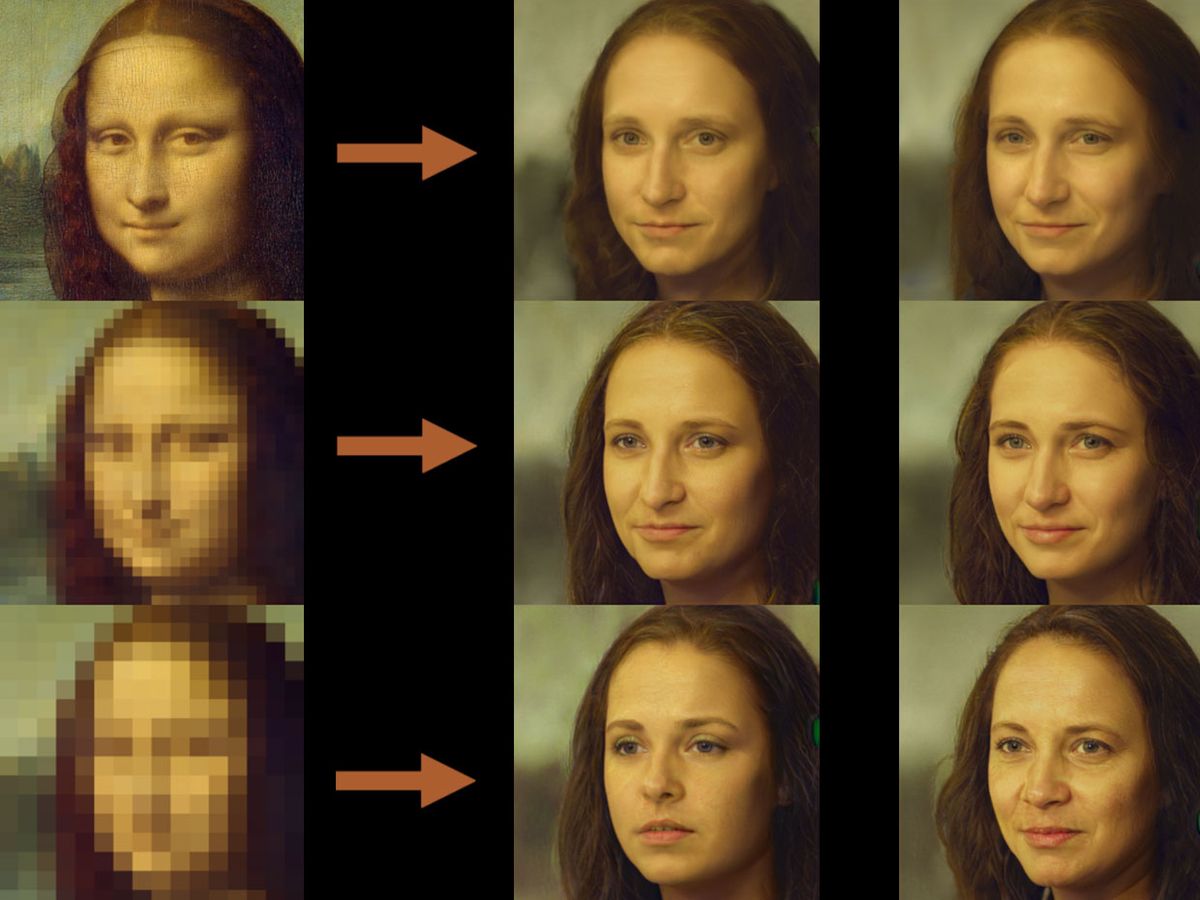

One more trope of Hollywood spy movies has now taken at least a partial turn toward science-fact. You’ve seen it: To save the world, the secret agent occasionally needs a high-res picture of a human face recovered from a very blurry, grainy, or pixelated image.

Now, artificial intelligence has delivered a partial (though probably ultimately unhelpful) basket of goods for that fictional spy. It’s only partial because Claude Shannon’s Theory of Information Entropy always gets the last laugh. As a new algorithm now demonstrates for anyone to see, an AI-generated photorealistic face “upsampled” from the low-res original probably looks very little like the person our hero is racing the clock to track down. There may even be no resemblance at all.

Sorry, Mr. Bond and Ms. Faust. Those handful of pixels in the source image contain only so much information. Because, however convincingly the AI renders that imaginary face—and computer-generated faces can be quite uncanny these days—there’s no dodging the fact that the original image was, in fact, very information-sparse. No one, not even someone with a license to kill, gets to extract free information out of nowhere.

But that’s not the end of the story, says Cynthia Rudin, professor of computer science at Duke University in Durham, N.C. There may be other kinds of value to be extracted from the AI algorithm she and her colleagues have developed.

For starters, Rudin said, “We kind of proved that you can’t do facial recognition from blurry images because there are so many possibilities. So zoom and enhance, beyond a certain threshold level, cannot possibly exist.”

However, Rudin added that “PULSE,” the Python module her group developed, could have wide-ranging applications beyond just the possibly problematic “upsampling” of pixelated images of human faces. (Though it’d only be problematic if misused for facial recognition purposes. Rudin said there are no doubt any number of unexplored artistic and creative possibilities for PULSE, too.)

Rudin and four collaborators at Duke developed their Photo Upsampling via Latent Space Exploration algorithm (accepted for presentation at the 2020 Conference on Computer Vision and Pattern Recognition conference earlier this month) in response to a challenge.

“A lot of algorithms in the past have tried to recover the high-resolution image from the low-res/high-res pair,” Rudin said. But according to her, that’s probably the wrong approach. Most real-world applications of this upsampling problem would involve having access to only the low-res original image. That would be the starting point from which one would try to recreate the high-resolution equivalent of that low-res original.

“When we finally abandoned trying to come up with the ground truth, we then were able to take the low-res [picture] and try to construct many very good high-res images,” Rudin said.

So while PULSE looks beyond the failure point of facial recognition applications, she said, it may still find applications in fields that grapple with their own blurry images—among them, astronomy, medicine, microscopy, and satellite imagery.

Rudin cautions: So long as anyone using PULSE understands that it generates a broad range of possible images, any one of which could be the progenitor of the blurry image that’s available, PULSE has potential to give researchers a better understanding of a given imaginative space.

Say, for instance, an astronomer has a blurry image of a black hole. Coupled with an AI imaging tool that generates astronomical images, PULSE could render many possible astrophysical scenarios that might have yielded that low-res photograph.

At the moment, PULSE is optimized for human faces, because NVIDIA already developed an AI “generative adversarial network” (GAN) that creates photorealistic human faces. So the applications the PULSE team explored built atop NVIDIA’s StyleGAN algorithm.

In other words, PULSE, provides the sorting and exploring tools that sit atop the GAN that, on its own, mindlessly sprays out endless supplies of images of whatever it has been trained to make.

Rudin also sees a possible PULSE application in the field of architecture and design.

“There’s not a StyleGAN for that many other things right now,” she said. “It’d be nice to be able to generate rooms. If you had just a few pixels, it’d be nice to develop a full picture of a room. That would be cool. And that’s probably coming.

“Anytime you have that kind of generative modeling, you can use PULSE to search through that space,” she said.

And so long as searching through that space doesn’t involve a ticking timebomb set to detonate when it hits “00:00,” this PULSE may still ultimately open more doors than it blows off its hinges.

Margo Anderson is senior associate editor and telecommunications editor at IEEE Spectrum. She has a bachelor’s degree in physics and a master’s degree in astrophysics.