Back in my days as an undergraduate student, campus police relied on their “judgment” to decide who might pose a threat to the campus community. The fact that they would regularly pass by white students, under the presumption that they belonged there, in order to interrogate one of the few black students on campus was a strong indicator that officers’ judgment—individual and collective—was based on flawed application of a limited data set. Worse, it was an issue that never seemed to respond to the “officer training” that was promised in the wake of such incidents.

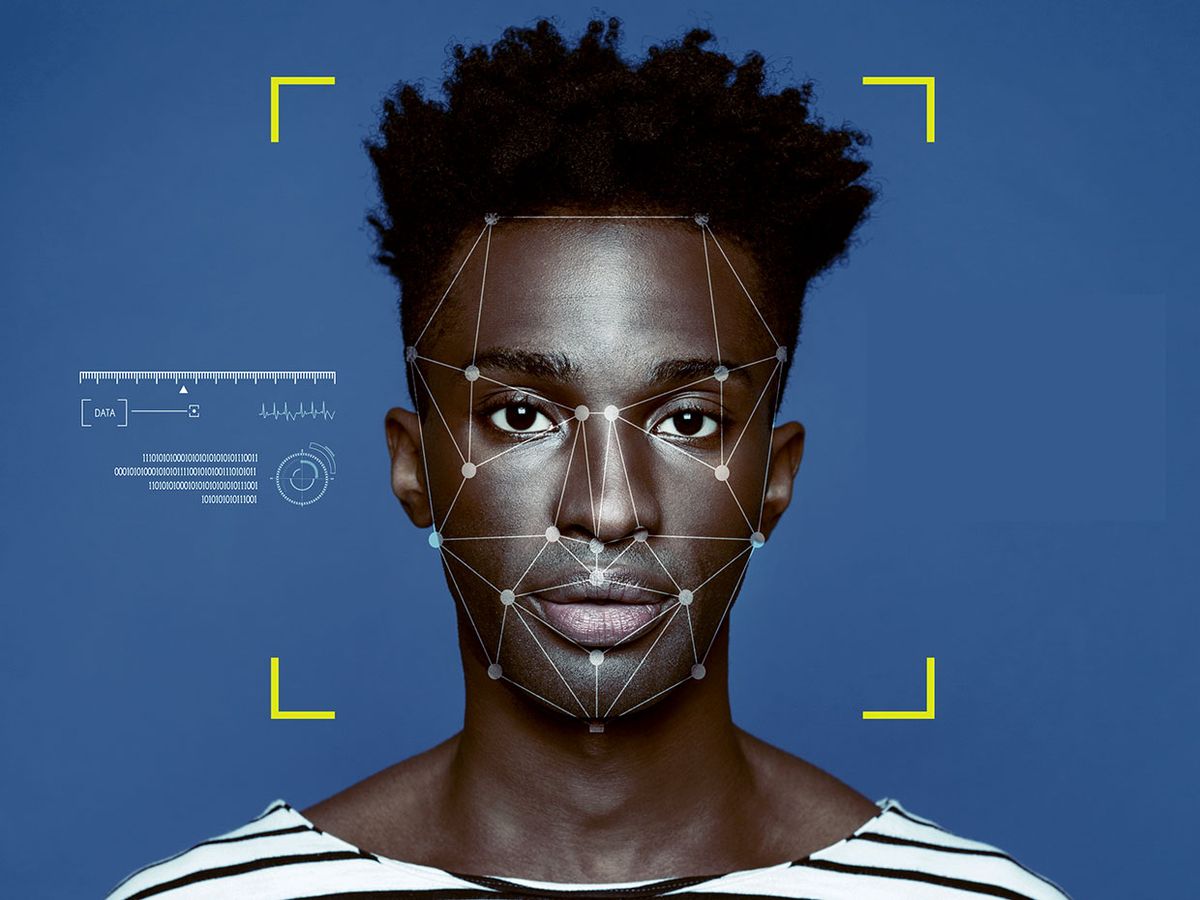

Nearly 30 years hence, some colleges are looking to avoid accusations of racial profiling by letting artificial intelligence exercise its judgment about who belongs on their campuses. But facial recognition systems offer no escape from bias. Why? Like campus police, their results are too often based on flawed application of a limited data set.

One of the first schools to openly acknowledge its intention to automate its vetting of people stepping onto its grounds was UCLA. School administrators decided to employ facial recognition in an attempt to identify every person captured by its campus-wide network of cameras. But last week, the university’s administration reversed course when it got word that a Boston-based digital rights nonprofit called Fight for the Future had followed UCLA’s plan to its logical conclusion with chilling results.

Fight for the Future says it used Rekognition, Amazon’s commercially available facial recognition software, to compare publicly available photos of just over 400 members of the UCLA campus community—including faculty members and players on the varsity basketball and football teams—with images in a mugshot database.

The system returned 58 false positive matches linking students and faculty to actual criminals. Bad as that was, the results revealed that algorithms are no less biased than humans. According to a Fight for the Future press release, “The vast majority of incorrect matches were of people of color. In many cases, the software matched two individuals who had almost nothing in common beyond their race, and claimed they were the same person with ‘100% confidence.’”

“This was an effective way of illustrating the potential danger of these racially-biased systems,” says Evan Greer, deputy director of Fight for the Future. “Imagine a basketball player walking across UCLA’s campus being mislabeled by this system and swarmed by police who are led to believe that he is wanted for a serious crime. How might that end?”

Greer says that the rise of facial recognition has put Fight for the Future in unfamiliar territory: “We’re usually out there fighting against government limitations on the use of technology. But [facial recognition] is such a uniquely dangerous form of surveillance that we’re dead set against it.” Greer insists that “Facial recognition has no place on college campuses,” telling IEEE Spectrum that, “It exacerbates a preexisting problem—which is campus police disproportionately stopping, searching, and arresting black and Latino people.”

It’s not news that one of the obvious problems with facial recognition systems is the tendency of the algorithms to exhibit the same prejudices and misperceptions held by their human programmers. Still, producers of these systems continue to peddle them with little concern for the consequences, despite multiple reminders that facial recognition is not ready for prime time.

“The technology has been continuously accused of being biased against certain groups of people,” Marie-Jose Montpetit, chair of the IEEE P7013 working group focused on developing application standards for automated facial analysis technology, told The Institute last September. “I think it’s important for us to define mechanisms to make sure that if the technology is going to be used, it’s used in a fair and accurate way.”

Back in 2016, mathematician and data scientist Cathy O’Neil released the book Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. It pointed out that big data—which includes facial recognition databases—continues to be attractive because of the assumption that it’s eliminating human subjectivity and bias. But O’Neil told IEEE Spectrum that predictive models and algorithms are really just “opinions embedded in math.”

It should be clear by now what those opinions are. In 2018, the American Civil Liberties Union revealed that it had used Amazon’s Rekognition system to compare the photos of members of the U.S. Congress against a database of 25,000 publicly available mug shots of criminals. The result: The system wrongly indicated that 28 congresspersons had previously been arrested. Like this year’s test using UCLA students and faculty, an overwhelming majority of the false positive results linked black and Latino legislators with criminality.

That same year, MIT researcher Joy Buolamwini tested the photo of herself that accompanied the bio from her TED talk against the facial recognition prowess of several systems. One did not detect her face at all; one indicated that the face in her picture belonged to a male; another simply misidentified her. This triggered a systematic investigation wherein Buolamwini and colleagues at the MIT Media Lab analyzed how these systems responded to 1,270 unique faces. The investigation, known as the Gender Shades study, revealed that severe gender and skin-type bias in facial recognition algorithms is way more than anecdotal.

In their evaluation of three classifier algorithms whose task was to indicate whether the person in an image was male or female, the artificial intelligence was most accurate when the image was of a male with pale skin (an error rate of 0.3 percent at most). They did a comparatively poor job labeling women in general, and even worse the darker a person’s skin. In the worst case, a system marked 34.7 percent of the dark-skinned female images it was presented as male.

With such glaring racial and gender discrepancies yet to be fixed, it stands to reason that in the recent test, noted UCLA law professor Kimberlé Crenshaw—the black woman who coined the term intersectionality to refer to the way that, say, racism and sexism combine and accumulate to heighten their effect on marginalized people—was wrongly flagged as a person with a criminal background.

So, why, at this point, would a highly regarded school like UCLA even consider using such a system? Fight for the Future’s Greer blamed companies that produced them, saying that UCLA was likely the victim of these firms’ aggressive marketing and assurances that AI would help improve campus security. But it and other institutions have a duty to do their homework. Fortunately, UCLA administrators responded when they heard criticism from members of the campus community and experts in the fields of security, civil rights, and racial justice.

Bottom line: Facial recognition needs additional training using a much broader and more representative array of faces before it’s used in situations that could worsen existing societal bias—up to and including putting marginalized people’s lives in danger.

“Let this be a warning to other schools,” says Greer. “If you think you can get away with experimenting on your students and employees with this invasive technology, you’re wrong. We won’t stop organizing until facial recognition is banned on every campus.”

Willie Jones is an associate editor at IEEE Spectrum. In addition to editing and planning daily coverage, he manages several of Spectrum's newsletters and contributes regularly to the monthly Big Picture section that appears in the print edition.