AI Can Edit Photos With Zero Experience

A new technique called Double-DIP deploys deep learning to polish images without prior training

Imagine showing a photo taken through a storefront window to someone who has never opened her eyes before, and asking her to point to what’s in the reflection and what’s in the store. To her, everything in the photo would just be a big jumble. Computers can perform image separations, but to do it well, they typically require handcrafted rules or many, many explicit demonstrations: here’s an image, and here are its component parts.

New research finds that a machine-learning algorithm given just one image can discover patterns that allow it to separate the parts you want from the parts you don’t. The multi-purpose method might someday benefit any area where computer vision is used, including forensics, wildlife observation, and artistic photo enhancement.

Many tasks in machine learning require massive amounts of training data, which is not always available. A team of Israeli researchers is exploring what they call “deep internal learning,” where software figures out the internal structure of a single image from scratch. Their new work builds on a recent advance from another group called DIP, or Deep Image Prior. (Spoiler: The new method is called Double-DIP.)

Deep Image Prior uses deep learning, a technique involving multi-layer neural networks. Broadly, a DIP is trained to reproduce a specific given image. First, you feed the network a random input and it outputs a mishmash of pixels. It compares its output with the given image and adjusts its internal parameters to produce something closer to that image the next time. This process repeats hundreds of times for the same target image.

Critically, the DIP uses a kind of neural network that mirrors the way the brain processes visual information, seeking hierarchies of repeating features, from edges and corners up to, say, limbs and animals. This structure acts as a kind of prior expectation that the world will have patterns on multiple scales, which it does. So, if there is something wrong with the given image—such as the presence of dust or blank spots—the network imposes its own expectations, overriding the target’s flaws and, under the right conditions, producing something even more realistic. You end up with a better-looking version of the image you started with, with specks removed, and blank spots filled.

The Double-DIP combines two DIPs in parallel. They each turn a random input into an image, and the two images are superimposed. The combined image is compared with a target image, and the DIPs independently tweak their parameters so their outputs add up to something closer. What ends up happening is that each DIP focuses on a set of visual features or patches that are internally similar, and which also complement those of the other DIP. You get two images that are cohesive, though different from one another, and which combine to form the target.

“I was surprised by the easiness with which two networks split the patches among themselves,” says Michal Irani, a computer scientist at the Weizmann Institute of Science, in Israel, and the paper’s senior author. “It’s like Occam’s razor,” she says, referring to principle in philosophy that the simpler of two explanations is more likely to be true. “The networks learn the simplest possible explanation.”

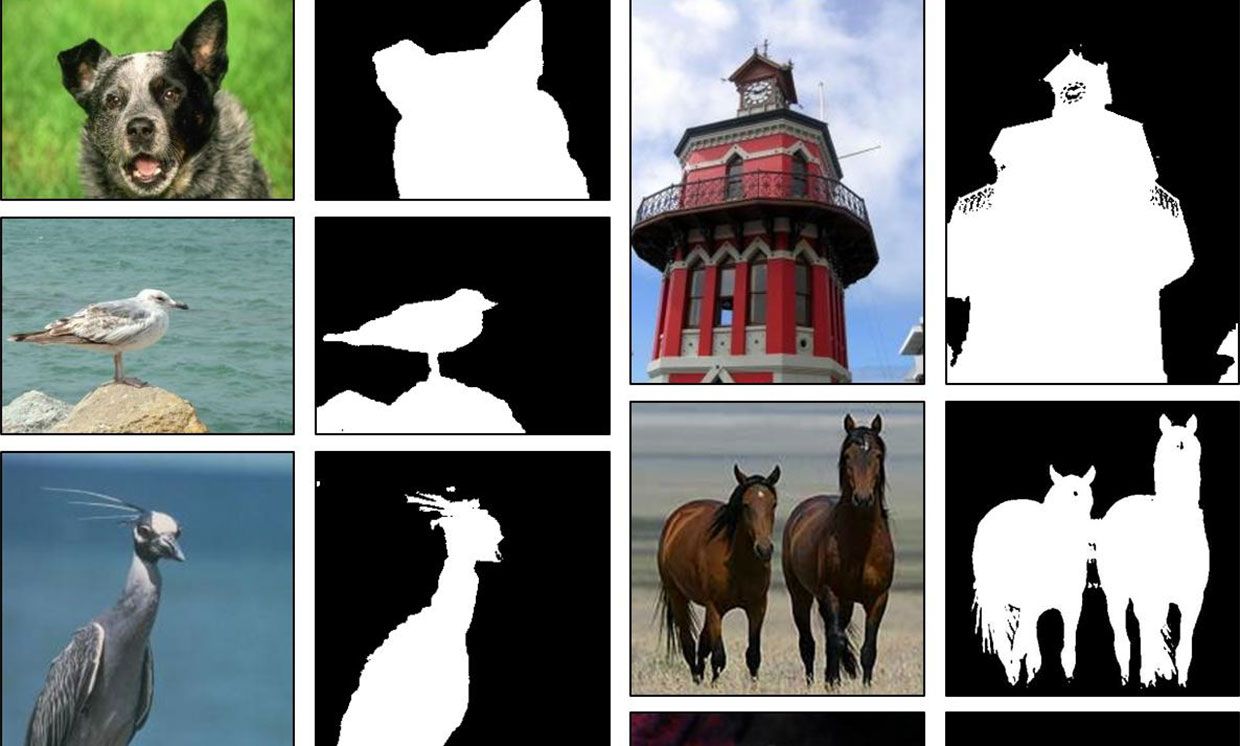

There are some knobs on the algorithm that guide exactly how it splits up images. It can, for instance, separate foreground from background: Shown a zebra on grass, it produces a lone zebra and, separately, an empty field. Under a different setting, it splits images taken through glass into reflections and what’s behind the glass.

Turn another knob, and it takes a hazy image and separates the haze from the city skyline. It dehazes as well as state-of-the-art systems trained on many images. It can also remove watermarks from photos—again, without previous training. The researchers reported their work last month at the Computer Vision and Pattern Recognition conference in Long Beach, California.

Dmitry Ulyanov, computer scientist at the Skolkovo Institute of Science and Technology, in Moscow, and the primary author of the original DIP paper, says he and his collaborators designed DIP to study the importance of network architecture (versus data)—not to create practical applications. But “in Double-DIP, they proposed four or five more applications,” he adds, “and the experiments are also amazing that they are working, so it’s a quite nice extension.”

Irani says her team is now applying the idea to the cocktail-party problem, by using Double-DIP to separate multiple mixed voices into two or more recordings. She sees “zero-shot” and “few-shot” learning—figuring out a task with zero or few previous training examples—as an important building block in AI.

Matthew Hutson is a freelance writer who covers science and technology, with specialties in psychology and AI. He’s written for Science, Nature, Wired, The Atlantic, The New Yorker, and The Wall Street Journal. He’s a former editor at Psychology Today and is the author of The 7 Laws of Magical Thinking. Follow him on Twitter at @SilverJacket.