At the Optical Networking and Communication Conference in San Francisco, which wrapped up last Thursday, a team of researchers from Intel described a possible solution to a computing problem that keeps data server engineers awake at night: how networks can keep up with our growing demand for data.

The amount of data used globally is growing exponentially. Reports from last year suggest that something like 2.5 quintillion bytes of data are produced each day. All that data has to be routed from its origins—in consumer hard drives, mobile phones, IoT devices, and other processors—through multiple servers as it finds its way to other machines.

“The challenge is to get data in and out of the chip,” without losing information or slowing down processing, said Robert Blum, the Director of Marketing and New Business at Intel. Optical systems, like fiber-optic cables, have been in widespread use as an alternative computing medium for decades, but loss still occurs at the inevitable boundaries between materials in a hybrid optoelectronic system.

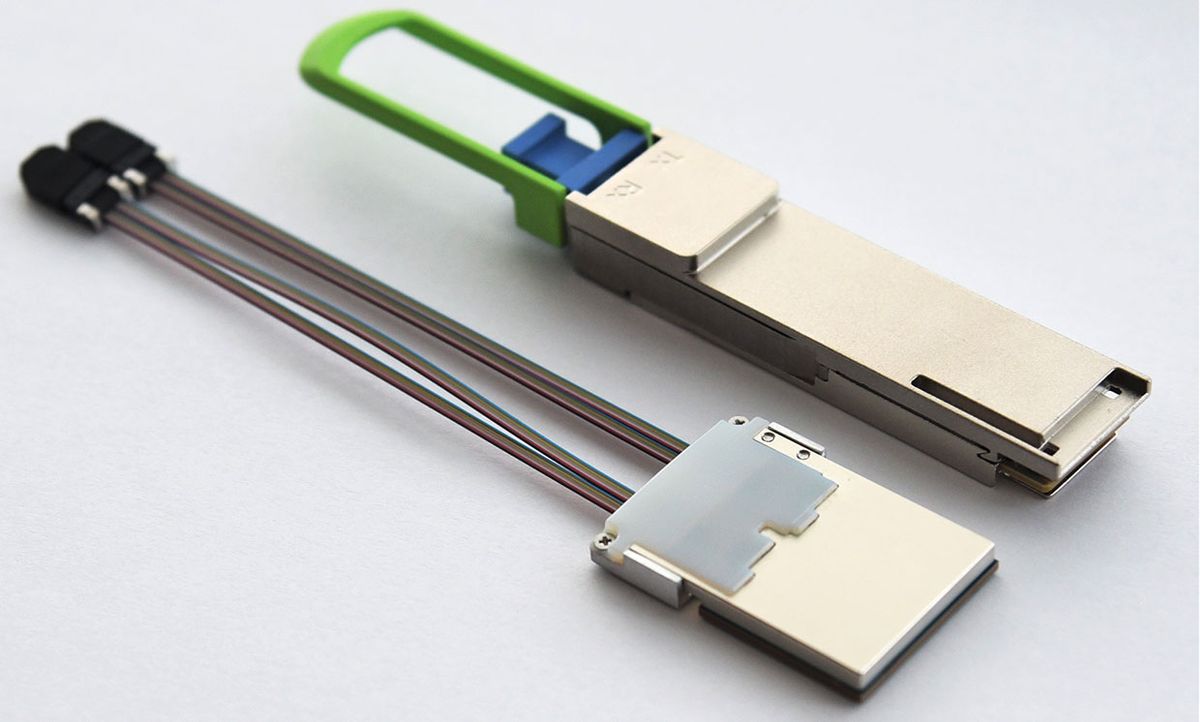

The team at Intel has developed a photonic engine with the equivalent processing power of sixteen 100-GB transceivers, or 4 of the latest 12.8 TB generation. The standout feature of the new chip is its co-packaging, a method of close physical integration of the necessary electrical components with faster, lossless optical ones.

The close integration of the optical components allows Intel’s engine to “break the wall,” of the maximum density of pluggable port transceivers on a switch ASIC, according to Blum. More ports on a switch—the specialized processor that routes data traffic—mean higher processing power, but only so many connectors can fit together before overheating becomes a threat.

The photonic engine brings the optical elements right up to the switch. Optical fibers require less space to connect and improve air flow throughout the server without adding to its heat waste. “With this [co-packaging] innovation, higher levels of bit rates are possible because you are no longer limited by electrical data transfer,” said Blum. Once you get to optical computing, distance is free—2 meters, 200 meters, it doesn’t matter.”

Driving huge amounts of high-speed data over the foot-long copper trays, as is necessary in standard server architectures, is also expensive—especially in terms of energy consumption. “With electrical [computation], as speed goes higher, you need more power; with optical, it is literally lossless at any speed,” said lead device integration engineer Saeed Fathololoumi.

“Power is really the currency on which data centers operate,” added Blum. “They are limited by the amount of power you can supply to them, and you want to use as much of that power as possible to compute.”

The co-packaged photonic engine currently exists as a functional demo back at Intel’s lab. The demonstration at the conference used a P4-programmable Barefoot Tofino 2 switch ASIC capable of speeds reaching12.8 terabits per second, in combination with Intel's 1.6-Tbps silicon photonics engines. “The optical interface is already the standard industry interface, but in the lab we’re using a switch that can talk to any other switch using optical protocols,” said Blum.

It’s the first step toward an all-optical input-output scheme, which may offer future data centers a way to cope with the rapidly expanding data demands of the Internet-connected public. For the Intel team, that means working with the rest of the computing industry to define the initial deployments of the new engines. “We’ve proven out the main technical building blocks, the technical hurdles,” said Fathololoumi. “The risk is low now to develop this into a product.”