A new ultra-fast machine-vision device can process images thousands of times faster than conventional techniques with an image sensor that is also an artificial neural network.

Machine vision technology often uses artificial neural networks to analyze images. In artificial neural networks, components dubbed "neurons" are fed data and cooperate to solve a problem, such as recognizing images. The neural net repeatedly adjusts the strength of the connections or "synapses" between its neurons and sees if the resulting patterns of behavior are better at solving the problem. Over time, the network discovers which patterns are best at computing solutions. It then adopts these as defaults, mimicking the process of learning in the human brain.

Machine vision technology often experiences delays from how cameras have to scan pixels row by row, convert video frames to digital signals and transmit such data to computers for analysis. Lukas Mennel, an electrical engineer at TU Wien, and his colleagues sought to speed up machine vision by cutting out the middleman—they created an image sensor that itself constitutes an artificial neural network that can simultaneously acquire and analyze images.

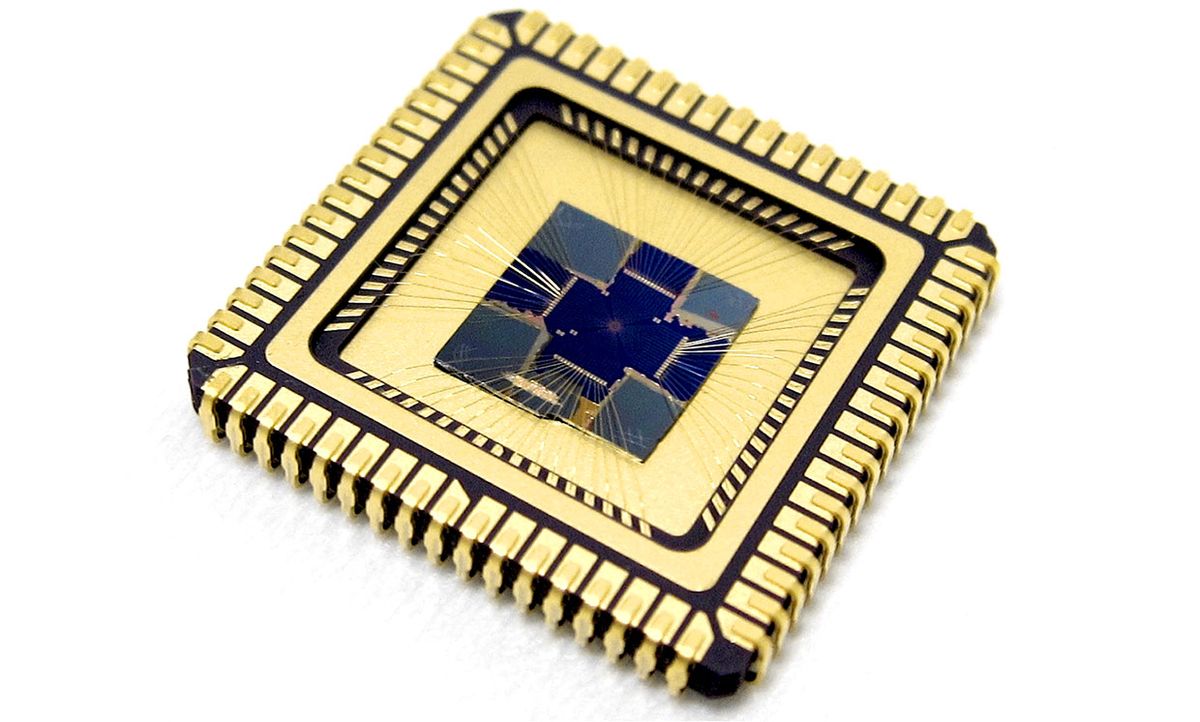

The sensor consists of an array of pixels that each represents a neuron. Each pixel in turn consists of a number of subpixels that each represents a synapse. Each photodiode is based on a layer of tungsten diselenide, a two-dimensional semiconductor with a tunable response to light. Such tunable photoresponsivity allowed each photodiode to remember and respond to light in a programmable way. The scientists then created a neural network based on links between these photodiodes that they could train to, for instance, classify images as either the letters "n," "v," or "z."

"Our image sensor does not consume any electrical power when it is operating," Mennel says. "The sensed photons themselves provide the energy for the electric current."

In experiments, the researchers used lasers to project the letters "v" and "n" onto the neural network image sensor. Conventional machine vision technology is usually capable of processing up to 100 frames per second, with some faster systems capable of working up to 1,000 frames per second, Mennel says. In comparison, "our system works with an equivalent of 20 million frames per second," he says.

Mennel notes the speed at which the system operates is only limited by the speed of the electrons in the circuits. In principle, this strategy could work on the order of picoseconds, or trillionths of a second, or three to four orders of magnitude faster than currently demonstrated, he says.

In addition, the scientists note that in principle, they could use computer simulations to train a neural network and transfer that neural network to the device.

What might such a sensor be used for? "At the moment, the applications are mainly rooted in specific scientific applications—for example, fluid dynamics, combustion processes, or mechanical breakdown processes could profit from faster visual data acquisition," Mennel says. "For more complex tasks like machine vision in autonomous driving, much more complexity is needed."

The scientists describe their findings on 4 March in the journal Nature.

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.