Researchers at IBM reported yesterday that they have created two prototype computer chips whose operation is silicon’s best answer to the hyperefficient functioning of animal brains. Both were fabricated using 45-nanometer silicon-on-insulator technology, and are set up to mimic the arrangement and behavior of neurons and synapses.

But there is one important difference between the chips. One of the 256-neuron chips has 65 536 synapses that make on-chip learning possible. According to an IBM spokesperson, every bit of data to which the chip is exposed causes the chip to “find new neural connections.” In other words, it learns from its experiences and rewires itself in response.

The other design incorporates 262 144 programmable synapses more amenable to off-chip learning. After a day of processing and storing information, the chip is examined by the researchers. The team “teaches” the chip how to more quickly and efficiently deal with information by strengthening or weakening the connections between neurons. “In a sense, they’re telling the chip, ‘You should have handled that problem like this,’” says the IBM spokesperson.

Both of the modalities on display in the new chips seem to be advancing computing beyond the strictly left-brain activities at which computers excel. Cognitive computers based on such biomimetic processors have demonstrated the ability to steer a car through traffic, or recognize that a person is the same even though he or she has changed clothes and done something different with his or her hair.

Unlike today’s computers, whose architecture requires millions of transistors (and tons of electric power) to crunch numbers quickly, the brain is a paragon of efficiency. IBM’s goal, says a spokesperson, “isn’t to build a brain, but to build a computer that takes inspiration from the elegant principles that underlie the brain’s operation.” That disclaimer was probably the standard opening statement regarding IBM’s cognitive computing group. It stands to reason after the contretemps that played out nearly two years ago when a neuroscientist who heads a brain computer group at Ecole Polytechnique Federale de Lausanne (EPFL) accused the head of the IBM brain simulation effort of perpetrating a fraud.

In November 2009, Dharmendra Modha and his colleagues at IBM won a coveted computing award after presenting a paper reporting that they had conducted a simulation that approached the scale of a cat’s brain in terms of the number of neurons and synapses. Henry Markram, of EPFL, cried foul, saying in an open letter to IBM’s chief technical officer and to the media that the Modha team’s brain was “trivial,” “a joke,” and “a PR stunt” and that Modha himself should be strung up by his toes.

IBM has, and continues to insist that Markram, whose research focus is completely different than Modha's, missed the point. As was said in IEEE Spectrum’s exhaustive earlier reporting on this tumult, Modha’s work is about addressing three major roadblocks on the way to brainlike computing: speed, scaling, and parallelism.

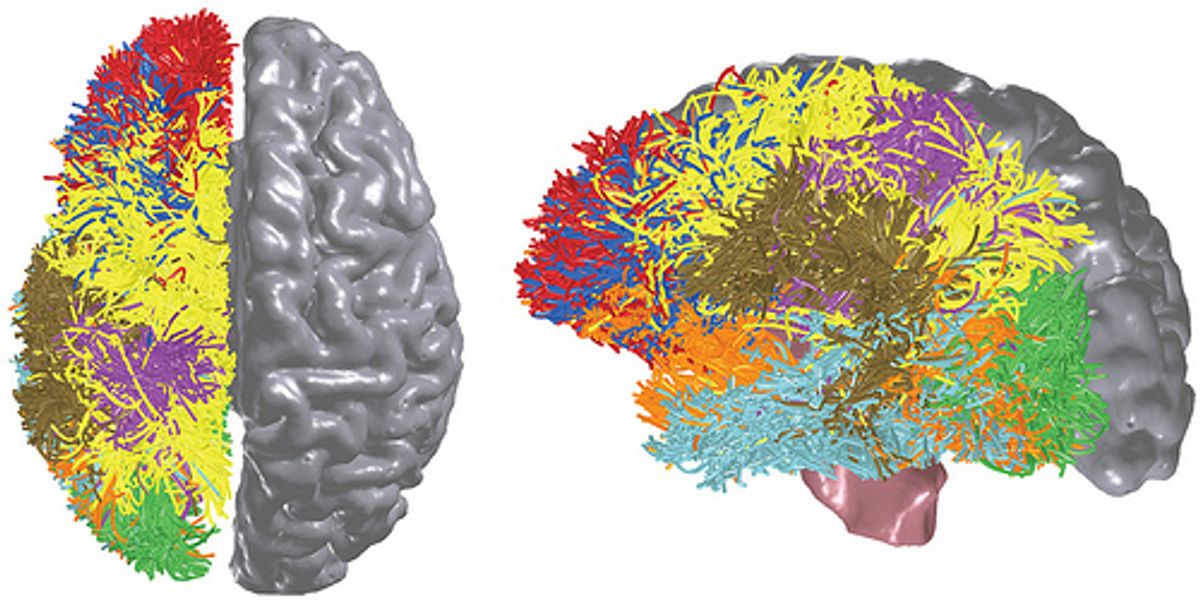

The human brain has about 100 billion neurons connected by more than a quadrillion synapses. The gray matter, which displaces about 1 liter, gets by on a power budget of 20 watts. IBM says its goal is to build a chip with a mere 10 billion neurons comprising 100 trillion synapses. The circuit-based brain would fit inside a 2-liter container and draw about a kilowatt of power. Still, it would represent a dramatic improvement over today’s machines. Roadrunner, the supercomputer that was the first to break the petaflop barrier, weighs 227 tons and draws roughly 3 megawatts.

This is a major initiative to move beyond the von Neumann paradigm that has been ruling computer architecture for more than half a century,” said Modha in a press release. “These chips are another significant step in the evolution of computers from calculators to learning systems,” he said.

Willie Jones is an associate editor at IEEE Spectrum. In addition to editing and planning daily coverage, he manages several of Spectrum's newsletters and contributes regularly to the monthly Big Picture section that appears in the print edition.