The Challenger Disaster: A Case of Subjective Engineering

From the archives: NASA’s resistance to probabilistic risk analysis contributed to the Challenger disaster

Editor’s Note: Today is the 30th anniversary of the loss of the space shuttle Challenger, which was destroyed 73 seconds in its flight, killing all onboard. To mark the anniversary, IEEE Spectrum is republishing this seminal article which first appeared in June 1989 as part of a special report on risk. The article has been widely cited in both histories of the space program and in analyses of engineering risk management.

“Statistics don’t count for anything,” declared Will Willoughby, the National Aeronautics and Space Administration’s former head of reliability and safety during the Apollo moon landing program. “They have no place in engineering anywhere.” Now director of reliability management and quality assurance for the U.S. Navy, Washington, D.C., he still holds that risk is minimized not by statistical test programs, but by “attention taken in design, where it belongs.” His design-oriented view prevailed in NASA in the 1970s, when the space shuttle was designed and built by many of the engineers who had worked on the Apollo program.

“The real value of probabilistic risk analysis is in understanding the system and its vulnerabilities,” said Benjamin Buchbinder, manager of NASA’s two-year-old risk management program. He maintains that probabilistic risk analysis can go beyond design-oriented qualitative techniques in looking at the interactions of subsystems, ascertaining the effects of human activity and environmental conditions, and detecting common-cause failures.

NASA started experimenting with this program in response to the Jan. 28, 1986, Challenger accident that killed seven astronauts. The program’s goals are to establish a policy on risk management and to conduct risk assessments independent of normal engineering analyses. But success is slow because of past official policy that favored “engineering judgment” over “probability numbers,” resulting in NASA’s failure to collect the type of statistical test and flight data useful for quantitative risk assessment.

This Catch 22–the agency lacks appropriate statistical data because it did not believe in the technique requiring the data, so it did not gather the relevant data–is one example of how an organization’s underlying culture and explicit policy can affect the overall reliability of the projects it undertakes.

External forces such as politics further shape an organization’s response. Whereas the Apollo program was widely supported by the President and the U.S. Congress and had all the money it needed, the shuttle program was strongly criticized and underbudgeted from the beginning. Political pressures, coupled with the lack of hard numerical data, led to differences of more than three orders of magnitude in the few quantitative estimates of a shuttle launch failure that NASA was required by law to conduct.

Some observers still worry that, despite NASA’s late adoption of quantitative risk assessment, its internal culture and its fear of political opposition may be pushing it to repeat dangerous errors of the shuttle program in the new space station program.

Basic Facts

System: National Space Transportation System (NSTS)—the space shuttle

Risk assessments conducted during design and operation: preliminary hazards analysis; failure modes and effects analysis with critical items list; various safety assessments, all qualitative at the system level, but with quantitative analyses conducted for specific subsystems.

Worst failure: In the January 1986 Challenger accident, primary and secondary O-rings in the field joint of the right solid-fuel rocket booster were burnt through by hot gases.

Consequences: loss of $3 billion vehicle and crew.

Predictability: long history of erosion in O-rings, not envisaged in the original design.

Causes: inadequate original design (booster joint rotated farther open than intended); faulty judgment (managers decided to launch despite record low temperatures and ice on launch pad); possible unanticipated external events (severe wind shear may have been a contributing factor).

Lessons learned: in design, to use probabilistic risk assessment more in evaluating and assigning priorities to risks; in operation, to establish certain launch commit criteria that cannot be waived by anyone.

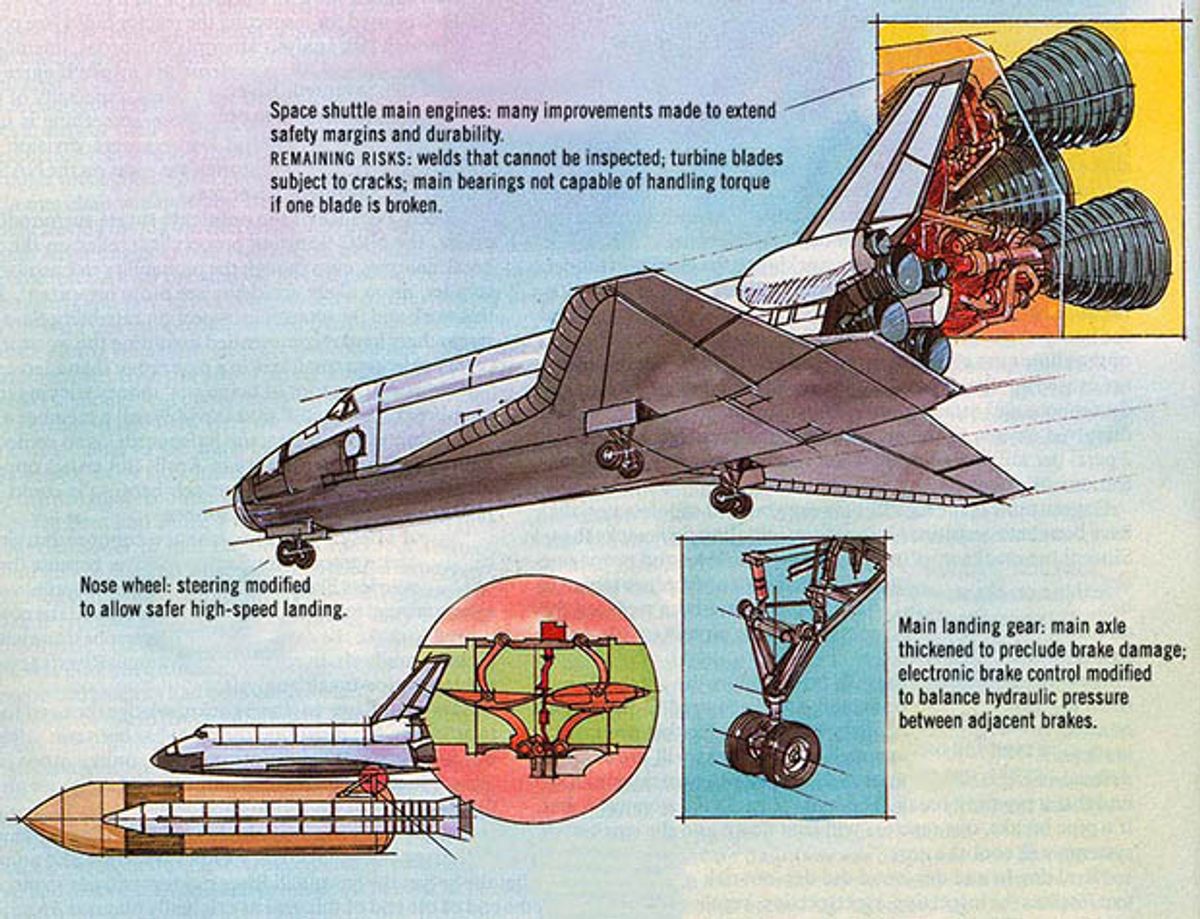

Other outcomes: redesign of booster joint and other shuttle subsystems that also had a high level of risk or unanticipated failures; reassessment of critical items.

NASA’s preference for a design approach to reliability to the exclusion of quantitative risk analysis was strengthened by a negative early brush with the field. According to Haggai Cohen, who during the Apollo days was NASA’s deputy chief engineer, NASA contracted with General Electric Co. in Daytona Beach, Fla., to do a “full numerical PRA [probabilistic risk assessment]” to assess the likelihood of success in landing a man on the moon and returning him safely to earth. The GE study indicated the chance of success was “less than 5 percent.” When the NASA Administrator was presented with the results, he felt that if made public, “the numbers could do irreparable harm, and he disbanded the effort,” Cohen said. “We studiously stayed away from [numerical risk assessment] as a result.”

“That’s when we threw all that garbage out and got down to work,” Willoughby agreed. The study’s proponents, he said, contended “ ‘you build up confidence by statistical test programs. ’ We said, ‘No, go fly a kite, we’ll build up confidence by design.’ Testing gives you only a snapshot under particular conditions. Reality may not give you the same set of circumstances, and you can be lulled into a false sense of security or insecurity.”

As a result, NASA adopted qualitative failure modes and effects analysis (FMEA) as its principal means of identifying design features whose worst-case failure could lead to a catastrophe. The worst cases were ranked as Criticality 1 if they threatened the life of the crew members or the existence of the vehicle; Criticality 2 if they threatened the mission; and Criticality 3 for anything less. An R designated a redundant system [see “How NASA determined shuttle risk,”]. Quantitative techniques were limited to calculating the probability of the occurrence of an individual failure mode “if we had to present a rationale on how to live with a single failure point,” Cohen explained.

The politics of risk

By the late 1960s and early 1970s the space shuttle was being portrayed as a reusable airliner capable of carrying 15-ton payloads into orbit and 5-ton payloads back to earth. Shuttle astronauts would wear shirtsleeves during takeoff and landing instead of the bulky spacesuits of the Gemini and Apollo days. And eventually the shuttle would carry just plain folks: non-astronaut scientists, politicians, schoolteachers, and journalists.

NASA documents show that the airline vision also applied to risk. For example, in the 1969 NASA Space Shuttle Task Group Report, the authors wrote: “It is desirable that the vehicle configuration provide for crew/passenger safety in a manner and to the degree as provided in present day commercial jet aircraft.”

Statistically an airliner is the least risky form of transportation, which implies high reliability. And in the early 1970s, when President Richard M. Nixon, Congress, and the Office of Management and Budget (OMB) were all skeptical of the shuttle, proving high reliability was crucial to the program’s continued funding.

OMB even directed NASA to hire an outside contractor to do an economic analysis of how the shuttle compared with other launch systems for cost-effectiveness, observed John M. Logsdon, director of the graduate program in science, technology, and public policy at George Washington University in Washington, D.C. “No previous space programme had been subject to independent professional economic evaluation,” Logsdon wrote in the journal Space Policy in May 1986. “It forced NASA into a belief that it had to propose a Shuttle that could launch all foreseeable payloads ... [and] would be less expensive than alternative launch systems” and that, indeed, would supplant all expendable rockets. It also was politically necessary to show that the shuttle would be cheap and routine, rather than large and risky, with respect to both technology and cost, Logsdon pointed out.

Amid such political unpopularity, which threatened the program’s very existence, “some NASA people began to confuse desire with reality,” said Adelbert Tischler, retired NASA director of launch vehicles and propulsion. “One result was to assess risk in terms of what was thought acceptable without regard for verifying the assessment.” He added: “Note that under such circumstances real risk management is shut out.”

‘Disregarding data’

By the early 1980s many figures were being quoted for the overall risk to the shuttle, with estimates of a catastrophic failure ranging from less than 1 chance in 100 to 1 chance in 100 000. “The higher figures [1 in 100] come from working engineers, and the very low figures [1 in 100 000] from management,” wrote physicist Richard P. Feynman in his appendix “Personal Observations on Reliability of Shuttle” to the 1986 Report of the Presidential Commission on the Space Shuttle Challenger Accident.

The probabilities originated in a series of quantitative risk assessments NASA was required to conduct by the Interagency Nuclear Safety Review Panel (INSRP), in anticipation of the launch of the Galileo spacecraft on its voyage to Jupiter, originally scheduled for the early 1980s. Galileo was powered by a plutonium-fueled radioisotope thermoelectric generator, and Presidential Directive/NSC-25 ruled that either the U.S. President or the director of the office of science and technology policy must examine the safety of any launch of nuclear material before approving it. The INSRP (which consisted of representatives of NASA as the launching agency, the Department of Energy, which manages nuclear devices, and the Department of Defense, whose Air Force manages range safety at launch) was charged with ascertaining the quantitative risks of a catastrophic launch dispersing the radioactive poison into the atmosphere. There were a number of studies because the upper stage for boosting Galileo into interplanetary space was reconfigured several times.

The first study was conducted by the J. H. Wiggins Co. of Redondo Beach, Calif., and published in three volumes between 1979 and 1982. It put the overall risk of losing a shuttle with its spacecraft payload during launch at between 1 chance in 1000 and 1 in 10, 000. The greatest risk was posed by the solid-fuel rocket boosters (SRBs). The Wiggins author noted that the history of other solid-fuel rockets showed them as undergoing catastrophic launches somewhere between 1 time in 59 and 1 time in 34, but that the study’s contract overseers, the Space Shuttle Range Safety Ad Hoc Committee, made an “engineering judgment” and “decided that a reduction in the failure probability estimate was warranted for the Space Shuttle SRBs” because “the historical data includes motors developed 10 to 20 years ago.” The Ad Hoc Committee therefore “decided to assume a failure probability of 1 x 10-3 for each SRB. “ In addition, the Wiggins author pointed out, “it was decided by the Ad-Hoc Committee that a second probability should be considered… which is one order of magnitude less” or 1 in 10, 000, “justified due to unique improvements made in the design and manufacturing process used for these motors to achieve man rating.”

In 1983 a second study was conducted by Teledyne Energy Systems Inc., Timonium, Md., for the Air Force Weapons Laboratory at Kirtland Air Force Base, N.M. It described the Wiggins analysis as consisting of “an interesting presentation of launch data from several Navy, Air Force, and NASA missile programs and the disregarding of that data and arbitrary assignment of risk levels apparently per sponsor direction” with “no quantitative justification at all.” After reanalyzing the data, the Teledyne authors concluded that the boosters ’ track record “suggest[s] a failure rate of around one-in-a-hundred.”

When risk analysis isn’t

NASA conducted its own internal safety analysis for Galileo, which was published in 1985 by the Johnson Space Center. The Johnson authors went through failure mode worksheets assigning probability levels. A fracture in the solid-rocket motor case or case joints —similar to the accident that destroyed Challenger —was assigned a probability level of 2; which a separate table defined as corresponding to a chance of 1 in 100, 000 and described as “remote,” or “so unlikely, it can be assumed that this hazard will not be experienced.”

The Johnson authors’ value of 1 in 100 000 implied, as Feynman spelled out, that “one could put a Shuttle up each day for 300 years expecting to lose only one.” Yet even after the Challenger accident, NASA’s chief engineer Milton Silveira, in a hearing on the Galileo thermonuclear generator held March 4, 1986, before the U.S. House of Representatives Committee on Science and Technology, said: “We think that using a number like 10 to the minus 3, as suggested, is probably a little pessimistic.” In his view, the actual risk “would be 10 to the minus 5, and that is our design objective.” When asked how the number was deduced, Silveira replied, “We came to those probabilities based on engineering judgment in review of the design rather than taking a statistical data base, because we didn’t feel we had that.”

After the Challenger accident, the 1986 presidential commission learned the O-rings in the field joints of the shuttle’s solid-fuel rocket boosters had a history of damage correlated with low air temperature at launch. So the commission repeatedly asked the witnesses it called to hearings why systematic temperature-correlation data had been unavailable before launch.

NASA’s “management methodology” for collection of data and determination of risk was laid out in NASA’s 1985 safety analysis for Galileo. The Johnson space center authors explained: “Early in the program it was decided not to use reliability (or probability) numbers in the design of the Shuttle” because the magnitude of testing required to statistically verify the numerical predictions “is not considered practical.” Furthermore, they noted, “experience has shown that with the safety, reliability, and quality assurance requirements imposed on manned spaceflight contractors, standard failure rate data are pessimistic.”

“In lieu of using probability numbers, the NSTS [National Space Transportation System] relies on engineering judgment using rigid and well-documented design, configuration, safety, reliability, and quality assurance controls,” the Johnson authors continued. This outlook determined the data NASA managers required engineers to collect. For example, no “lapsed-time indicators” were kept on shuttle components, subsystems, and systems, although “a fairly accurate estimate of time and/or cycles could be derived,” the Johnson authors added.

One reason was economic. According to George Rodney, NASA’s associate administrator of safety, reliability, maintainability and quality assurance, it is not hard to get time and cycle data, “but it’s expensive and a big bookkeeping problem.”

Another reason was NASA’s “normal program development: you don’t continue to take data; you certify the components and get on with it,” said Rodney’s deputy, James Ehl. “People think that since we’ve flown 28 times, then we have 28 times as much data, but we don ’t. We have maybe three or four tests from the first development flights.”

In addition, Rodney noted, “For everyone in NASA that’s a big PRA [probabilistic risk assessment] seller, I can find you 10 that are equally convinced that PRA is oversold… [They] are so dubious of its importance that they won ’t convince themselves that the end product is worthwhile.”

Risk and the organizational culture

One reason NASA has so strongly resisted probabilistic risk analysis may be the fact that “PRA runs against all traditions of engineering, where you handle reliability by safety factors,” said Elisabeth Paté-Cornell, associate professor in the department of industrial engineering and engineering management at Stanford University in California, who is now studying organizational factors and risk assessment in NASA. In addition, with NASA’s strong pride in design, PRA may be “perceived as an insult to their capabilities, that the system they ’ve designed is not 100 percent perfect and absolutely safe,” she added. Thus, the character of an organization influences the reliability and failure of the systems it builds because its structure, policy, and culture determine the priorities, incentives, and communication paths for the engineers and managers doing the work, she said.

“Part of the problem is getting the engineers to understand that they are using subjective methods for determining risk, because they don’t like to admit that,” said Ray A. Williamson, senior associate at the U.S. Congress Office of Technology Assessment in Washington, D.C. “Yet they talk in terms of sounding objective and fool themselves into thinking they are being objective.”

“It’s not that simple,” Buchbinder said. “A probabilistic way of thinking is not something that most people are attuned to. We don’t know what will happen precisely each time. We can only say what is likely to happen a certain percentage of the time.” Unless engineers and managers become familiar with probability theory, they don ’t know what to make of “large uncertainties that represent the state of current knowledge,” he said. “And that is no comfort to the poor decision-maker who wants a simple answer to the question, ‘Is this system safe enough? ’”

As an example of how the “mindset” in the agency is now changing in favor of “a willingness to explore other things,” Buchbinder cited the new risk management program, the workshops it has been holding to train engineers and others in quantitative risk assessment techniques, and a new management instruction policy that requires NASA to “provide disciplined and documented management of risks throughout program life cycles.”

Hidden risks to the space station

NASA is now at work on its big project for the 1990s: a space station, projected to cost $30 billion and to be assembled in orbit, 220 nautical miles above the earth, from modules carried aloft in some two dozen shuttle launches. A National Research Council committee evaluated the space station program and concluded in a study in September 1987: “If the probability of damaging an Orbiter beyond repair on any single Shuttle flight is 1 percent— the demonstrated rate is now one loss in 25 launches, or 4 percent —the probability of losing an Orbiter before [the space station's first phase] is complete is about 60 percent.”

The probability is within the right order of magnitude, to judge by the latest INSRP-mandated study completed in December for Buchbinder’s group in NASA by Planning Research Corp., McLean, Va. The study, which reevaluates the risk of the long-delayed launch of the Galileo spacecraft on its voyage to Jupiter, now scheduled for later this year, estimates the chance of losing a shuttle from launch through payload deployment at 1 in 78, or between 1 and 2 percent, with an uncertainty of a factor of 2.

Those figures frighten some observers because of the dire consequences of losing part of the space station. “The space station has no redundancy —no backup parts,” said Jerry Grey, director of science and technology policy for the American Institute of Aeronautics and Astronautics in Washington, D.C.

The worst case would be loss of the shuttle carrying the logistics module, which is needed for reboost, Grey pointed out. The space station’s orbit will subject it to atmospheric drag such that, if not periodically boosted higher, it will drift downward and with in eight months plunge back to earth and be destroyed, as was the Skylab space station in July 1979. “If you lost the shuttle with the logistics module, you don ’t have a spare, and you can ’t build one in eight months,” Grey said, “so you may lose not only that one payload, but also whatever was put up there earlier.”

Why are there no backup parts? “Politically the space station is under fire [from the U.S. Congress] all the time because NASA hasn’t done an adequate job of justifying it,” said Grey. “NASA is apprehensive that Congress might cancel the entire program”— and so is trying to trim costs as much as possible.

Grey estimated that spares of the crucial modules might add another 10 percent to the space station’s cost. “But NASA is not willing to go to bat for that extra because they ’re unwilling to take the political risk,” he said— a replay, he fears, of NASA’s response to the political negativism over the shuttle in the 1970s.

The NRC space station committee warned: “It is dangerous and misleading to assume there will be no losses and thus fail to plan for such events.”

“Let’s face it, space is a risky business,” commented former Apollo safety officer Cohen. “I always considered every launch a barely controlled explosion.”

“The real problem is: whatever the numbers are, acceptance of that risk and planning for it is what needs to be done,” Grey said. He fears that “NASA doesn’t do that yet.”

In addition to the sources named in the text, the authors would like to acknowledge the information and insights afforded by the following: E. William Colglazier (director of the Energy, Environment and Resources Center at the University of Tennessee, Knoxville) and Robert K. Weatherwax (president of Sierra Energy & Risk Assessment Inc., Roseville, Calif.), the two authors of the 1983 Teledyne/Air Force Weapons Laboratory study; Larry Crawford, director of reliability and trends analysis at NASA headquarters in Washington, D.C.; Joseph R. Fragola, vice president, Science Applications International Corp., New York City; Byron Peter Leonard, president, L Systems Inc., El Segundo, Calif.; George E. Mueller, former NASA associate administrator for manned spaceflight; and Marcia Smith, specialist in aerospace policy, Congressional Research Service, Washington, D.C.

This article first appeared in print in June 1989 as part of the special report “Managing Risk In Large Complex Systems” under the title “The space shuttle: a case of subjective engineering.”

How NASA Determined Shuttle Risk

At the start of the space shuttle’s design, the National Aeronautics and Space Administration defined risk as “the chance (qualitative) of loss of personnel capability, toss of system, or damage to or loss of equipment or property.” NASA accordingly relied on several techniques for determining reliability and potential design problems, concluded the U.S. National Research Council’s Committee on Shuttle Criticality Review and Hazard Analysis Audit in its January 1988 report Post-Challenger Evaluation of Space Shuttle Risk Assessment and Management. But, the report noted, the analyses did “not address the relative probabilities of a particular hazardous condition arising from failure modes, human errors, or external situations,” so did not measure risk.

A failure modes and effects analysis (FMEA) was the heart of NASA’s effort to ensure reliability, the NRC report noted. An FMEA, carried out by the contractor building each shuttle element or subsystem, was performed on all flight hardware and on ground support equipment that interfaced with flight hard ware. Its chief purpose was to i dentify hardware critical to the performance and safety of the mission.

Items that did not meet certain design, reliability and safety requirements specified by NASA’s top management and whose failure could threaten the toss of crew, vehicle, or mission, made up a critical i tems list (CIL).

Although the FMEA/CIL was first viewed as a design tool, NASA now uses it during operations and management as well, to analyze problems, assess whether corrective actions are effective, identify where and when inspection an d maintenance are needed, and reveal trends in failures.

Second, NASA conducted hazards analyses, performed jointly by shuttle engineers and by NASA’s safety and operations organizations. They made use of the FMEA/ CIL, various design reviews, safety analyses, and other studies. They considered not only the failure modes identified In the FMEA, but also other threats p osed by the mission activities, crewmachine interfaces, and the environment. After hazards and their causes were identified, NASA engineers and managers had to make one of three decisions: to eliminate the cause of each hazard, to control the cause if it could not be eliminated, or to accept the hazards that could not be controlled.

NASA also conducted an element i nterface functional analysis (EIFA) to look at the shuttle more nearly as a com plete system. Both the FMEA and the hazards analyses concentrated only on i ndividual elements of the shuttle: the space shuttle’s main engines i n the orbiter, the rest of the orbiter, the external tank, and the solid fuel rocket boosters. The EIFA assessed hazards at the mating of the elements.

Also to examine the shuttle as a system, NASA conducted a one-time critical functions assessment in 1978, which searched for multiple and cascading failures. The information from all these studies fed one way into an overall mission safety assessment.

The NRC committee had several criticisms. In practice, the FMEA was the sole basis for some engineering change decisions and all engineering waivers and rationales for re taining certain high-risk design features. However, the NRC report noted, hazard analyses for some important, high-risk subsystems “were not updated for years at a time even though design changes had occurred or dangerous failures were experienced.” On one procedural flow chart, the report noted, “the ‘Hazard Analysis As Required ’ is a dead-end box with inputs but no output with respect to waiver approval decisions.”

The NAC committee concluded that “the isolation of the hazard analysis within NASA’s risk assessment and management process to date can be seen as reflecting the past weakness of the entire safety organization” —T.E.B. and K.E.