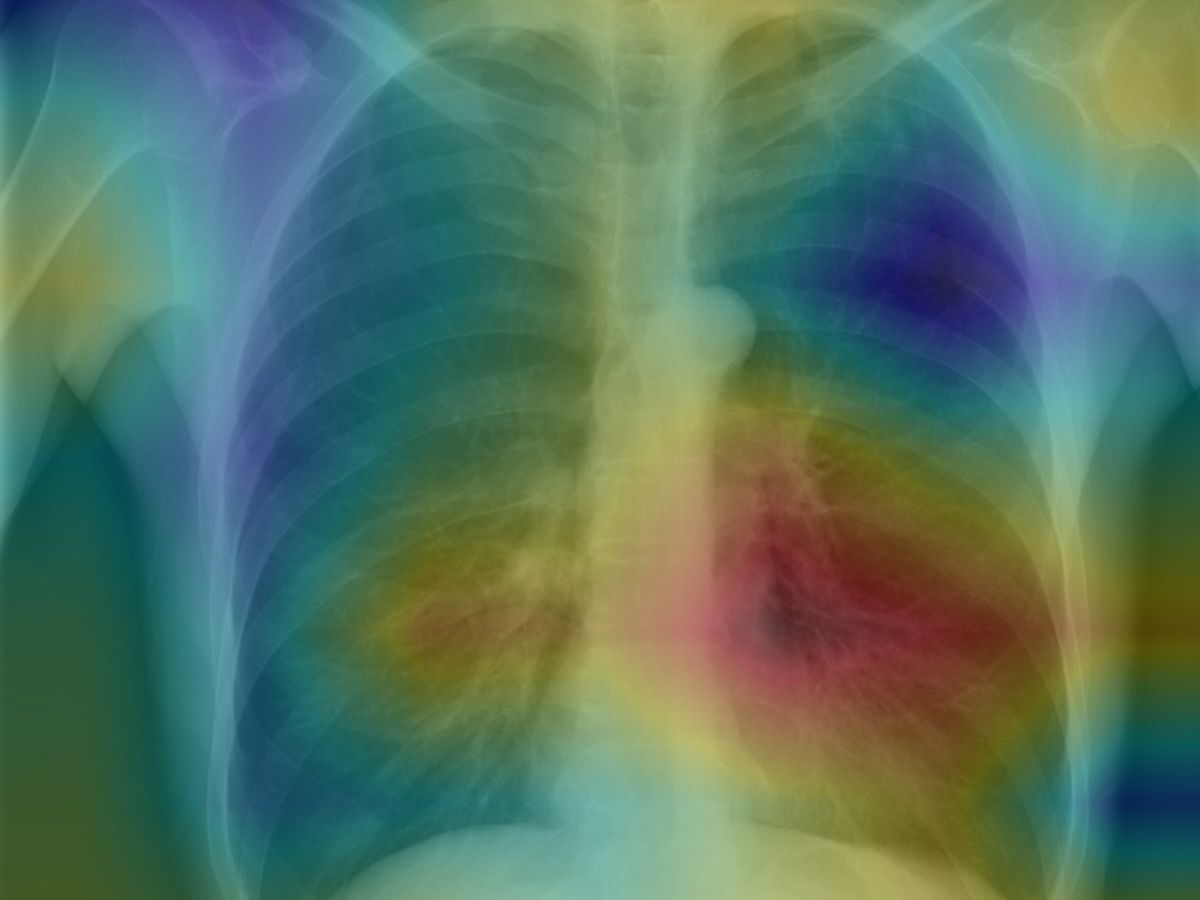

Stanford researchers have developed a machine-learning algorithm that can diagnose pneumonia from a chest x-ray better than a human radiologist can. And it learned how to do so in just about a month.

The Machine Learning Group, led by Stanford adjunct professor Andrew Ng, was inspired by a data set released by the National Institutes of Health on 26 September. The data set contains 112,120 chest X-ray images labeled with 14 different possible diagnoses, along with some preliminary algorithms. The researchers asked four Stanford radiologists to annotate 420 of the images for possible indications of pneumonia. They selected that disease because, according to a press release, it is particularly hard to spot on X-rays, and brings 1 million people to U.S. hospitals each year.

Within a week, the Stanford team had developed an algorithm, called CheXnet, capable of spotting 10 of the 14 pathologies in the original data set more accurately than previous algorithms. After about a month of training, it was ahead in all 14, the group reported in a paper released this week through the Cornell University Library. And CheXnet consistently did better than the four Stanford radiologists in diagnosing pneumonia accurately.

The researchers looked at CheXnet’s performance in terms of sensitivity—that is, whether it correctly identified existing cases of pneumonia, and how well it avoided false positives. While some of the four human radiologists were better than others, CheXnet was better than all of them [See graph below].

The Stanford approach also creates a heat map of the chest x-rays, with colors indicating areas of the image most likely to represent pneumonia; this is a tool that researchers believe could greatly assist human radiologists.

I couldn’t be more thrilled—and hopeful that all the radiologists at Stanford will embrace this technology immediately, because I know firsthand how beneficial it could be.

Last December, my then-18-year-old son went to the Stanford emergency room with an extremely high fever and cough. He had a chest x-ray for suspected pneumonia; it was read as negative so he was given an I.V. for dehydration, medication for his fever, and was sent home.

A week later, he was back in the ER in the middle of the night, this time disoriented, with an even higher fever that wasn’t responding to medication. Again, a chest x-ray was read as negative, and he was tested for every disease one could imagine. But all he was given were fluids, and eventually he was released with no diagnosis.

Two days after that, we got a call from radiology—a routine review of x-rays from the weekend had changed the medical opinion to pneumonia—a diagnosis that had been missed twice. Antibiotics started bringing his fever down within 24 hours.

Next time I bring a kid to the Stanford ER, I’m asking for a CheXnet consult.

Tekla S. Perry is a senior editor at IEEE Spectrum. Based in Palo Alto, Calif., she's been covering the people, companies, and technology that make Silicon Valley a special place for more than 40 years. An IEEE member, she holds a bachelor's degree in journalism from Michigan State University.