A new process for managing the fast-access memory inside a CPU has led to as much as a twofold speedup and to energy-use reductions of up to 72 percent. According to its designers, realizing such stunning gains requires a big shift in what part of the computer controls this crucial memory: Right now that control is hard-wired into the CPU’s circuitry, but the substantial speedup came when the designers let the operating system handle things instead.

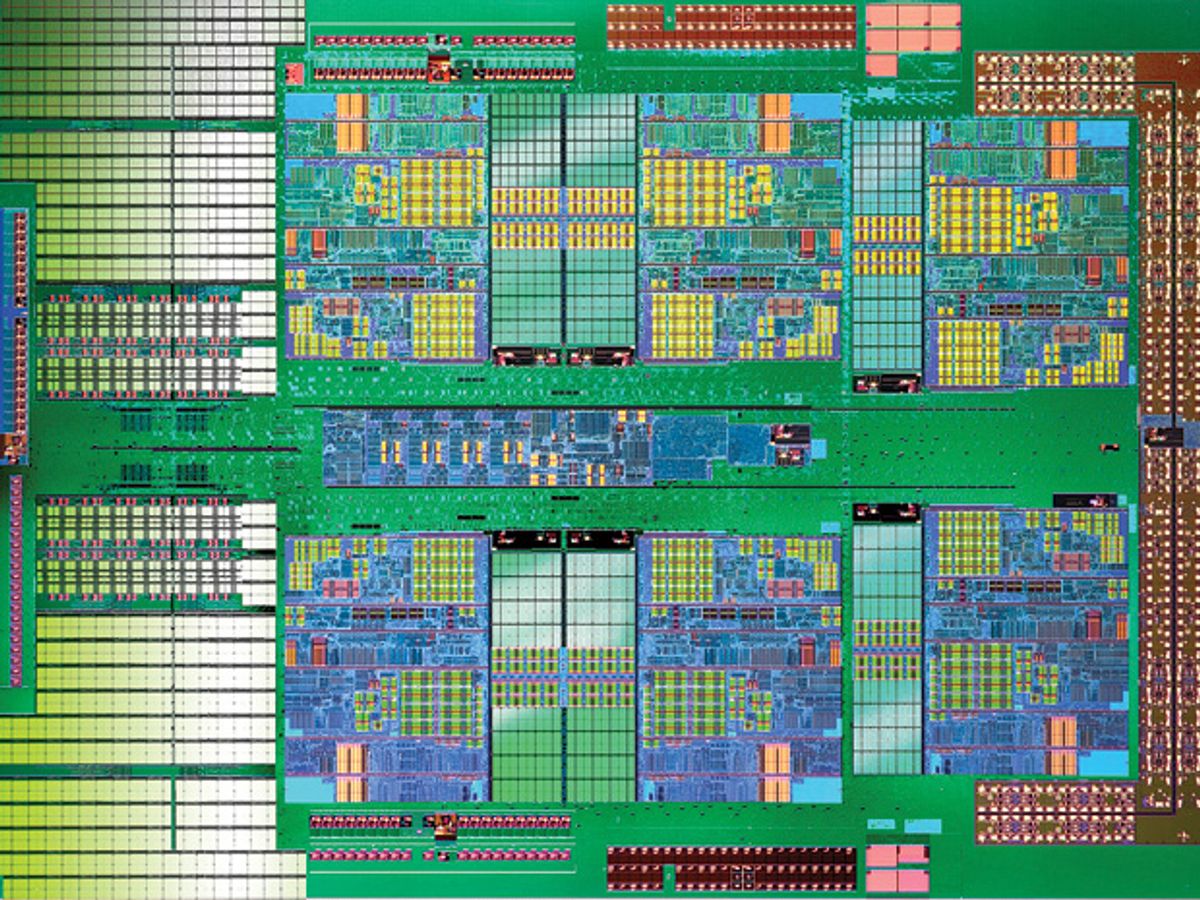

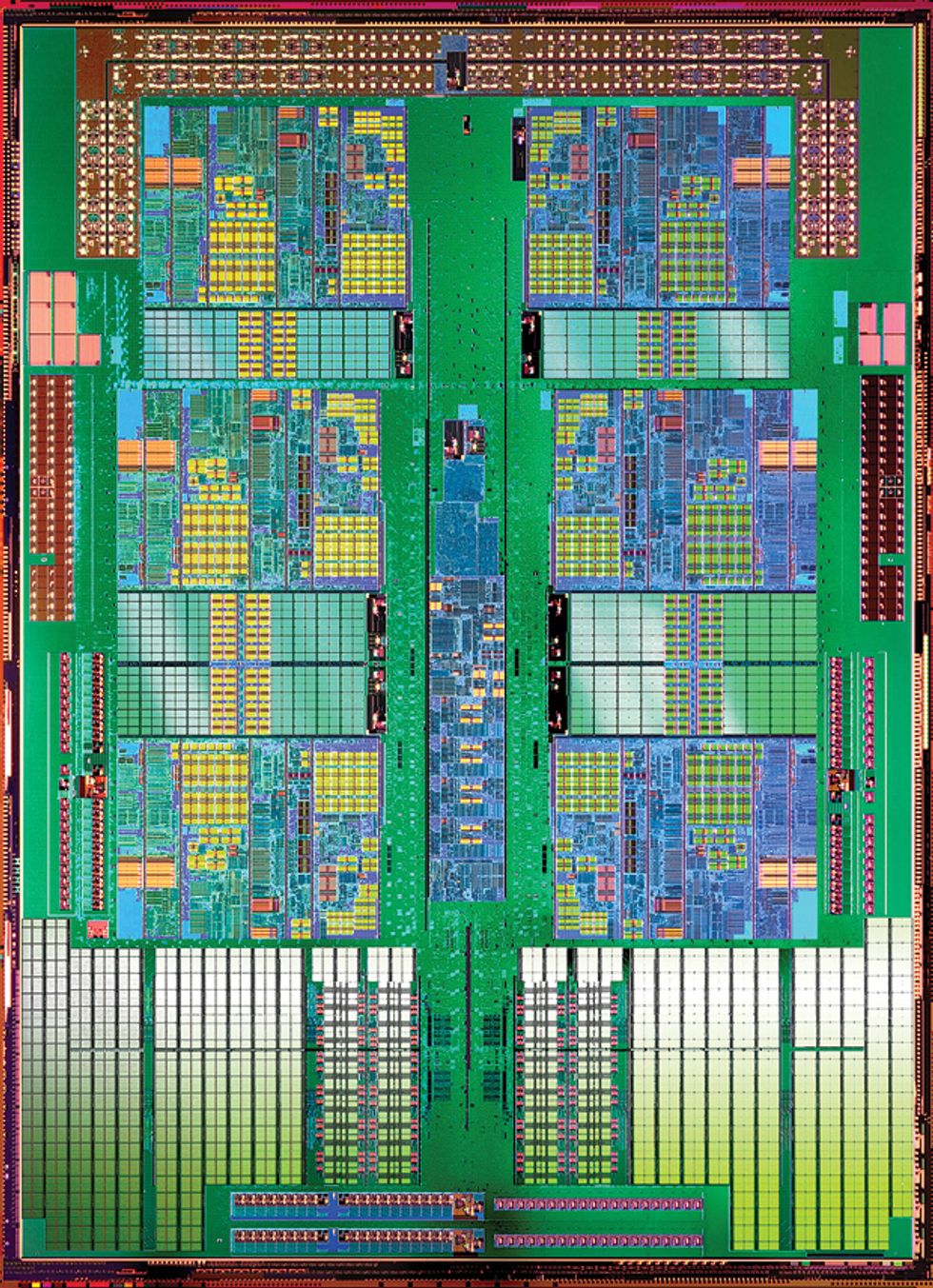

The CPU uses high-speed internal memory caches as a kind of digital staging area. Caches are a CPU’s workbench, whether they’re holding onto instructions a CPU may need soon or data it may need to crunch. And from smartphones to servers, nearly every CPU today manages the flow of bits in and out of its caches using algorithms built into its own circuits.

But, say two MIT researchers, as computers and portable devices accumulate more and more memory and CPU cores, it makes less and less sense to leave cache management entirely up to the CPU. Instead, they say, it might be better to let the operating system share the burden.

In itself, this idea is not completely new. Some of IBM’s Cell processors, as well as Sony’s PlayStation 3—which runs on Cell technology—allow their applications and OS kernels to fiddle with low-level CPU memory management. What’s new about the MIT technology, called Jigsaw, is its middle-ground approach, which enables software to configure some on-chip memory caches but without requiring so much control that programming becomes a memory-management nightmare.

“If you go back six or seven years, you’ll see that everybody was complaining that they launched the PlayStation 3 and nobody could program it well,” says Daniel Sanchez, the assistant professor at MIT’s Computer Science and Artificial Intelligence Laboratory and one of the inventors of Jigsaw.

Today, CPU hardware typically controls all the on-chip caches. So those caches must be designed to handle every conceivable job, from pure floating-point number crunching (which places a small burden on caches) to intensive searches and queries of a computer’s memory banks (which can stretch their limits). Moreover, CPUs have no higher-level knowledge of the kinds of jobs they’re doing. This means a self-contained numerical simulation with complex equations but little need for memory access would run with exactly the same cache resources as would a graph search, a memory-hogging hunt for relationships between stored data.

So Sanchez and his graduate student Nathan Beckmann thought, Why not let the OS trim the cache size for pure computation and swell its ranks for graph search?

The first step, they say, would be to give perhaps 1 percent of the CPU’s footprint to a simple piece of hardware that could monitor in real time the cache activity in each core. Hardware cache monitors would give Jigsaw the independent oversight it would need to play air traffic controller with the CPU’s caches.

Second, Sanchez and Beckmann say, the OS’s kernel needs at most a few thousand more lines of code. That’s not much of an addition, considering that Linux’s kernel in 2012 weighed in with 15 million lines and Apple’s and Microsoft’s kernels unofficially contained tens of millions more than that.

One of Jigsaw’s more prominent features is a software module, to be folded in with the OS, that the researchers call Peekahead. This module was adapted from the Lookahead Cache, developed more than a decade ago by Beijing computer scientists. Peekahead computes the best configuration of CPU caches based on the upcoming jobs it expects the cores to do in the coming clock cycles.

“When you let software be in charge, you have to be careful of your overhead,” Sanchez says. A poorly designed cache management system, he says, might trim the cache to its optimum size and do it again every fraction of a second. But doing so taxes the CPU. And what’s the point of a CPU efficiency algorithm that requires extraordinary amounts of CPU time? “The exact solution is really expensive. So we have to come up with a quick way of getting the job done so that the overhead doesn’t negate the gains you get,” he says.

Linley Gwennap of the Linley Group, a semiconductor consulting firm based in Mountain View, Calif., says he’s impressed with Jigsaw but cautions that it’s not quite ready for chip-fab prime time. “The problem is generally that a scheme that’s effective on one processor may not be effective on another processor with a different hardware design,” he says. “Every time the processor changes, you have to redo your software, which customers generally don’t like.”

Sanchez counters that software applications and utilities would remain unaffected by Jigsaw. “Only the operating system code needs to be aware of that intimate knowledge of the hardware, like the topology of the different portions of the cache,” he says.

Jason Mars, an assistant professor of computer science at the University of Michigan, says Jigsaw works well as a proof of concept, which he says chipmakers might adapt as they see fit.

“The crisp novelty in this work has to do with the codesign between hardware and software,” Mars says. “Much of the prior work was biased in one direction. More was expected to be done in hardware, and there was a little bit less flexibility. Jigsaw really...builds a holistic system that spans both the hardware and the software.”

This article originally appeared in print as "The Cache Machines."