Despite the modern world’s fixation with touchscreen smartphones and tablets, most homes and businesses remain cluttered with objects that lack any digital interfaces. Now, those ordinary objects could get an upgrade thanks to new smart tags that harness reflected Wi-Fi signals to add touch-based controls to any surface.

The idea of an inexpensive tag capable of transforming any object into a smart device is not necessarily new. But most cheap smart tags that lack batteries or complicated electronics can only perform simple functions, such as passively storing and sharing identifying information about an object.

By comparison, new LiveTag technology allows for interactive controls or keypads that can stick onto objects, walls, or even clothing, and let people remotely operate music players or receive hydration reminders based on the amount of liquid remaining in a water bottle.

“These tags can sense the status of everyday objects and humans, and also sense human interactions with plain everyday objects,” says Xinyu Zhang, assistant professor in electrical and computer engineering at the University of California, San Diego.

Zhang and his colleagues at the University of Wisconsin—Madison developed the LiveTag technology after brainstorming about ways to easily incorporate ordinary objects into the Internet of Things without adding costly hardware and batteries. Their LiveTag designs and early prototypes are detailed in a paper [PDF] posted on the University of Wisconsin website.

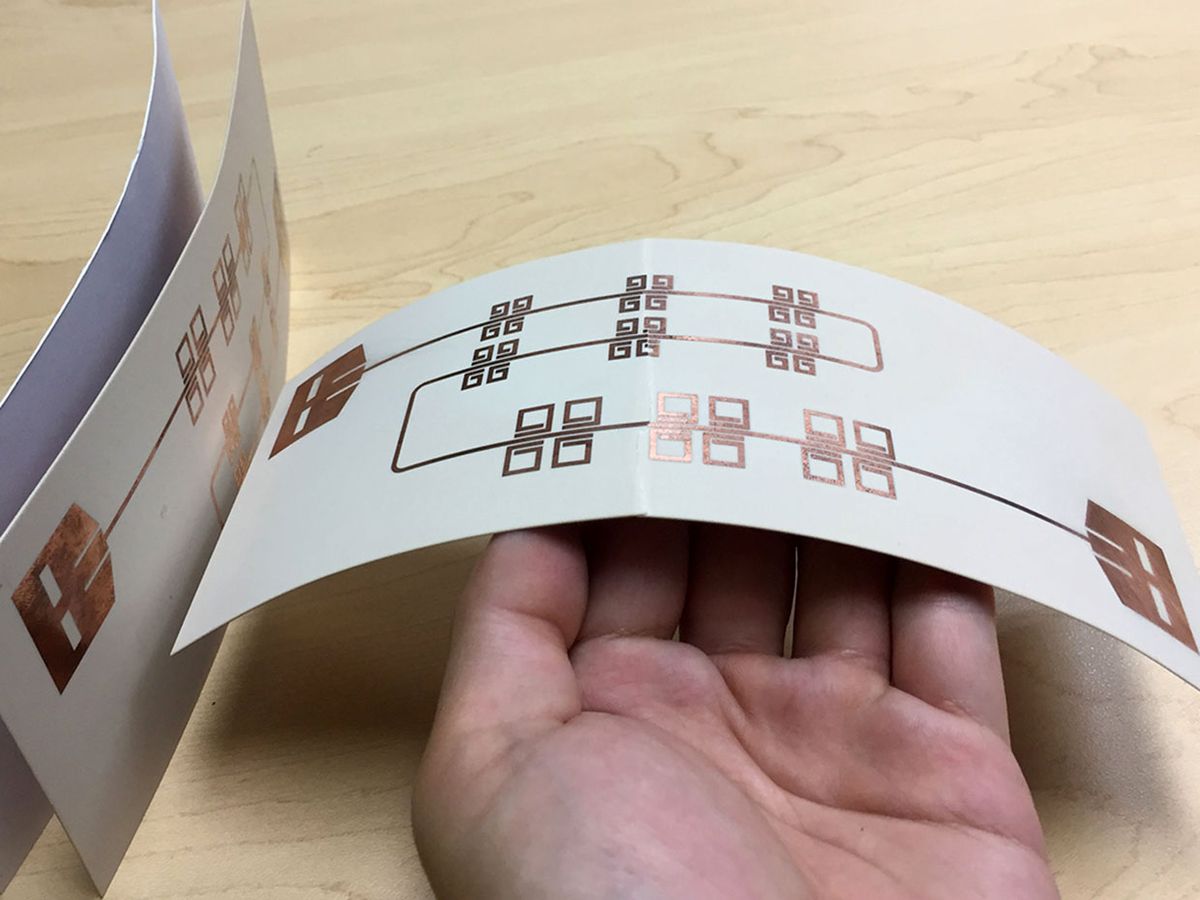

The basic LiveTag technology seems deceptively simple: copper foil printed onto lightweight paper-like materials without any batteries or discrete electronic components. The key is in the geometric copper foil patterns that are designed to absorb Wi-Fi signals of specific frequencies, even as the overall tag generally reflects 2.4/5 GHz signals from nearby Wi-Fi device transmitters.

Each pattern’s selective signal absorption in the midst of the overall reflection creates a “notch” at a specific frequency on the spectrum that can be detected by Wi-Fi device receivers. Together, all of those notches for each specific tag create a unique spectrum signature that makes individual LiveTags stand apart from one another.

Once those notches have been established for each LiveTag, a person’s touch on various points of the tag will change the overall Wi-Fi spectrum signature of the individual tag. Such touch-based change can be detected and interpreted as specific button presses or control inputs—such as hitting the start/pause button on a music controller LiveTag or using a sliding bar for volume control—by customized software or apps running on nearby Wi-Fi devices.

Figuring out the right design for the printed copper foil patterns was no easy task. Zhang and his colleagues had to experiment with many different combinations of shapes, sizes, and materials for the LiveTags and run all those combinations through computer simulations.

Furthermore, the researchers had to create customized multi-antenna beamforming algorithms that work with Wi-Fi devices to detect the notches in the reflected Wi-Fi spectrum. This was necessary because it can be tricky to distinguish the reflected signal from the LiveTag from all the other Wi-Fi signal reflections coming from walls, objects, and even people walking around in a room.

To filter out those other signal reflections, the algorithms use a Wi-Fi device’s transmitter antennas to project beams in many different directions. As a result, most objects end up having many different reflection signatures that can be screened out by the algorithm as background noise, whereas the LiveTag notches remain clear and consistent in the spectrum detected by the Wi-Fi device receivers.

To show off the possibilities of LiveTag, Zhang and his colleagues demonstrated how touching the buttons and volume bar on a music controller tag could remotely trigger a Wi-Fi receiver—a demo that could presumably be replicated with an actual smart speaker or music-playing device. They also stuck a customized LiveTag onto an ordinary plastic bottle and showed how the tag could detect varying water levels inside the bottle through the same touch-responsive interaction. That technique could potentially be paired with a smartphone app to provide runners or cyclists with periodic reminders to drink water and avoid dehydration.

Some of the more common past examples of smart tags have typically relied upon radio frequency identification (RFID) chips that can be printed as flexible paper-like tags. Such RFID tags can provide identification and location-based information when attached to objects such as clothing in retail stores. Researchers have even shown how RFID tags embedded in clothing or bedsheets could act as health monitors for patients in hospitals. But when it comes to human touch, researchers have only been able to show when a person is or is not touching an RFID tag, Zhang explains. Furthermore, RFID tags require an external RFID reader that can limit their usage in many situations.

Companies such as the Norwegian-based Thinfilm have also created smart tags or labels based on near-field communications (NFC) chips that can be read by many smartphones. But Thinfilm smart labels are focused on storing and sharing product information, and do not seem to offer any sort of complicated touch-responsive interaction.

Zhang and his colleagues had briefly considered turning to computer vision technology based on deep learning algorithms that could automatically identify when people are interacting with certain objects. They soon gave up on this idea because of privacy concerns about having video cameras watching people in their homes or businesses.

Despite its early promise, the LiveTag concept has some serious limitations. For one thing, the smart tags only work within a three-foot range of Wi-Fi devices; a highly restrictive range that will need to expand for LiveTag to be widely used. Zhang believes the team can boost LiveTag’s range with more transmitter antennas or by adding more redundant patterns on the tags to improve notch visibility in the Wi-Fi spectrum.

Another challenge is that the 2.4 GHz Wi-Fi spectrum generally has a limited bandwidth that can only accommodate so many notches, because each notch takes up a certain amount of bandwidth within the spectrum range. That means the current LiveTag prototypes can only have between 5 and 9 notches that reflect the different “touch points” for controls. The researchers may try expanding upon frequency-specific notches with touch points that are associated with selective changes in the Wi-Fi radio signal phases.

Still, even the early version of LiveTag has attracted interest from potential industry and research partners who are all proposing different applications, Zhang says. People in the healthcare field have reached out to his group with ideas about using such smart tags to monitor the daily activities and interactions of elderly people based on doors they open or objects they pick up. “This looks to them like quite an attractive solution, because it is non-intrusive and doesn’t require battery maintenance and doesn’t infringe on privacy,” Zhang says.

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.