Smart Antennas Could Open Up New Spectrum For 5G

Future cellular networks could exploit the huge bandwidths available in millimeter-wave spectrum

People in real estate joke that there are only three things that matter in the business of buying and selling property: location, location, location. The same could be said for radio spectrum. The frequencies used for cellular communications have acquired the status of waterfront lots—highly coveted and woefully scarce. And like beach-home buyers in a bidding war, mobile operators must constantly jockey for these prime parcels, sometimes shelling out as much as tens of billions of dollars for just a small sliver of the electromagnetic pie.

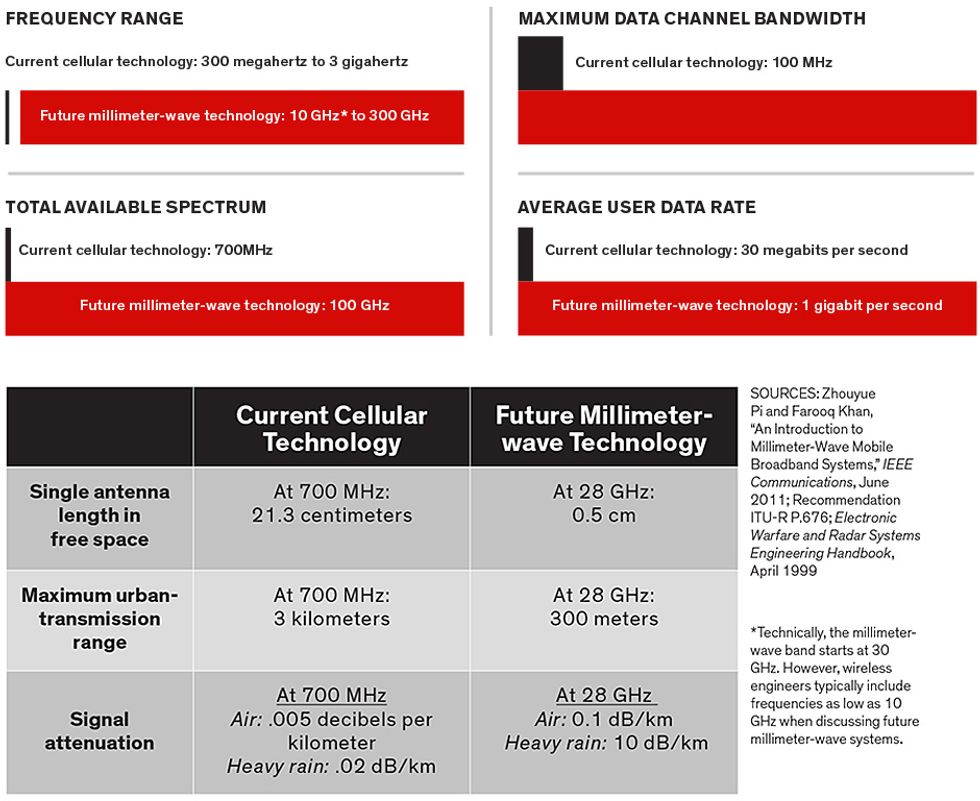

That’s because the cellular industry, throughout its four-decade existence, has relied exclusively on a strip of spectrum known as the ultrahigh frequency band, which comprises only about 1 percent of all regulated spectrum. Wireless engineers have long considered this frequency range—between 300 megahertz and 3 gigahertz—to be the “sweet spot” for mobile networking. Wavelengths here are short enough to allow for small antennas that can fit in handsets but still long enough to bend around or penetrate obstacles, such as buildings and foliage. Transmitted even at low power, these waves can travel reliably for up to several kilometers in just about any radio environment—be it in the heart of Tokyo or the farmlands of Iowa.

The trouble is, no matter how much operators are willing to pay for this spectrum, they can no longer get enough of it. The use of smartphones and tablets is soaring, and as people browse the Web, stream videos, and share photos on the go, they are moving more data over the airwaves than ever before. Mobile traffic worldwide is about doubling each year, according to reports from Cisco and Ericsson, and that exponential growth will likely continue for the foreseeable future. By 2020, the average mobile user could be downloading a whopping 1 terabyte of data annually—enough to access more than 1,000 feature-length films.

Wireless standards groups have devised all sorts of clever fixes to expand the capacity of today’s fourth-generation (4G) LTE cellular networks, including ones involving multiple antennas, smaller cells, and smarter coordination between devices. But none of these solutions will sustain the oncoming traffic surge for more than the next four to six years. Industry experts agree that fifth-generation (5G) cellular technology will need to arrive by the end of this decade. And to roll out these new networks, operators will undoubtedly need new spectrum. But where to find it?

By 2020, the average mobile user could be downloading 1 terabyte of data annually—enough to access more than 1,000 feature films

Fortunately, it just so happens that there’s an enormous expanse above 3 GHz that, until now, has been largely overlooked. We’re talking about the millimeter waves.

By the ITU definition, the millimeter-wave band, also called the extremely high frequency band, spans from 30 to 300 GHz. In our use of the term, however, we also include most of the neighboring superhigh frequencies, from about 10 to 30 GHz, because these waves propagate similarly to millimeter waves. We estimate that government regulators could make as much as 100 GHz of this spectrum available for mobile communications—more than 100 times as much bandwidth as cellular networks access today. By tapping it, operators could offer hundreds of times the data capacity of 4G LTE systems, enabling download rates of up to tens of gigabits per second while keeping consumer prices relatively low.

If you think that scenario sounds too good to be true, you’ve got plenty of company. In fact, until very recently, most wireless experts would have said just as much. Historically, operators rejected millimeter-wave spectrum because the necessary radio components were expensive and because they believed those frequencies would propagate poorly between traditional towers and handsets. They also worried that millimeter waves would be excessively absorbed or scattered by the atmosphere, rain, and vegetation and wouldn’t penetrate indoors.

But those beliefs are now rapidly fading. Recent research is convincing the cellular industry to take a second look at this vast and underutilized spectrum.

Although new to mobile communications, millimeter-wave technology has a surprisingly long and storied history. In 1895, a year before Italian radio pioneer Guglielmo Marconi awed the public with his wireless telegraph, an Indian polymath named Jagadish Chandra Bose showed off the world’s first millimeter-wave signaling apparatus in Kolkata’s town hall. Using a spark-gap transmitter, he reportedly sent a 60-GHz signal through three walls and the body of the region’s lieutenant governor to a funnel-shaped horn antenna and detector 23 meters away. As proof of its journey, the message triggered a simple contraption that rang a bell, fired a gun, and exploded a small mine.

More than half a century passed, however, before Bose’s inventions left the laboratory. Soldiers and radio astronomers were the first to use these millimeter-wave components, which they adapted for radar and radio telescopes. Automobile makers followed suit several decades later, tapping millimeter-wave frequencies for cruise control and collision-warning systems.

In 1895, a year before the wireless telegraph, Jagadish Chandra Bose created the first millimeter-wave apparatus, sending a 60-gighertz signal to a horn antenna and detector 23 meters away

The telecommunications community initially took notice of this new spectrum frontier during the dot-com boom of the late 1990s. Cash-flush start-ups figured that the abundant bandwidth still up for grabs in some millimeter-wave bands would be ideal for local broadband networks, such as those in homes and businesses, and for delivering “last-mile” Internet services to places where laying cable was too difficult or too expensive. With great fanfare, government regulators around the world, including ones in Europe, South Korea, Canada, and the United States, set aside or auctioned off huge allotments of millimeter-wave spectrum for these purposes.

But consumer products were slow to come. Companies quickly realized that millimeter-wave RF circuits and antenna systems were quite expensive. The semiconductor industry simply didn’t have the technical capability or market demand to manufacture commercial-grade devices fast enough to operate at millimeter-wave frequencies. So for nearly two decades, this enormous swath of bandwidth lay all but vacant.

That, however, is changing. Thanks in part to Moore’s Law and the growing popularity of automatic parking and other radar-based luxuries in cars, it’s now possible to package an entire millimeter-wave radio on a single CMOS or silicon-germanium chip. So millimeter-wave products are finally hitting the mass market. Many high-end smartphones, televisions, and gaming laptops, for example, now include wireless chip sets based on two competing millimeter-wave standards: Wireless High Definition (WirelessHD) and Wireless Gigabit (WiGig).

These technologies aren’t meant for communication between, say, a smartphone and a cell tower. Rather, they’re used to transfer large amounts of data, such as uncompressed video, short distances between machines without cumbersome Ethernet or HDMI cables. Both WirelessHD and WiGig systems operate at around 60 GHz in a frequency band typically about 5 to 7 GHz wide—give or take a couple of gigahertz depending on the country. Such bandwidths contain magnitudes more spectrum than even the fastest Wi-Fi network can access, enabling rates up to about 7 gigabits per second.

Equipment makers for cellular networks are likewise beginning to take advantage of the ultrawide bands available in millimeter-wave spectrum. Several suppliers, including Ericsson, Huawei, Nokia, and the start-up BridgeWave, in Santa Clara, Calif., are now using millimeter waves to provide high-speed line-of-sight connections between base stations and backbone networks, eliminating the need for costly fiber links.

Yet even as millimeter waves are enabling new indoor and fixed wireless services, many experts have remained skeptical that these frequencies could support cellular links, such as to a tablet in a taxi zipping through Times Square. A major concern is that millimeter-wave mobile networks won’t be able to provide coverage everywhere, particularly in cluttered outdoor environments such as cities, because they can’t always guarantee a line-of-sight connection from a base station to a handset. If, for instance, a smartphone user were to suddenly pass behind a tree or duck into a covered entryway, a millimeter-wave transmission probably couldn’t penetrate these obstacles.

But whether the signal would actually drop out in such a situation is another story. And, as it turns out, it’s a pretty interesting one.

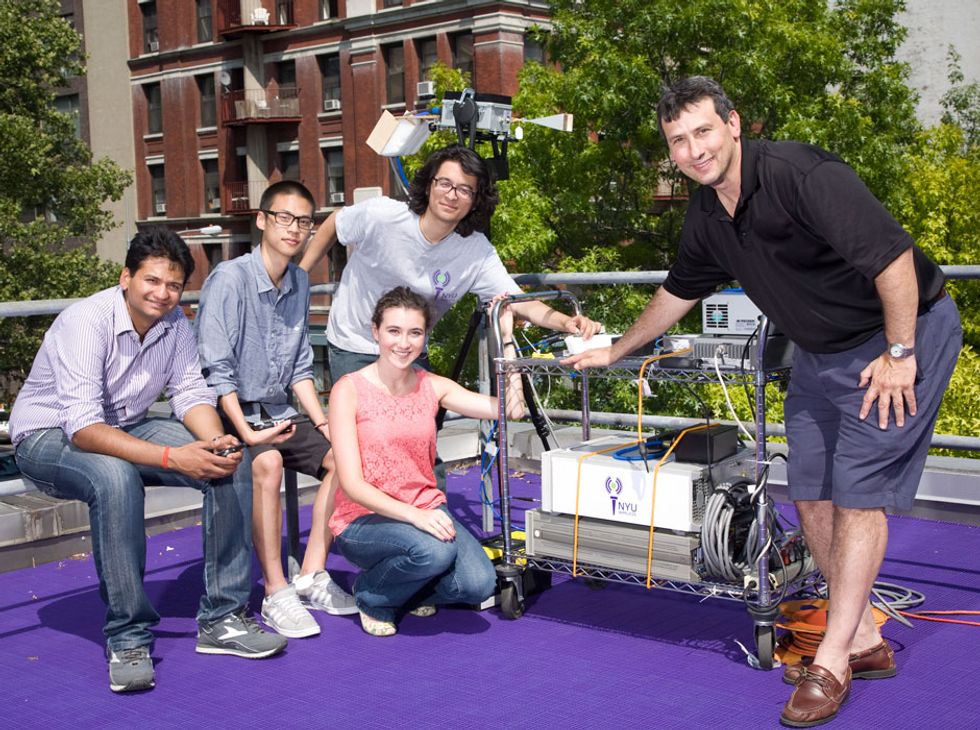

In August 2011, one of the authors, Rappaport—then at the University of Texas at Austin—began working with students on an extensive study of millimeter-wave behavior in the urban jungle. We built a wideband signaling system known as a channel sounder, which allowed us to analyze how a millimeter-wave transmission scatters and reflects off objects in its path—and how quickly these signals lose energy. Then we placed four transmitters on university rooftops and distributed several dozen receivers around campus.

The type of antenna we chose for these experiments is known as a horn antenna, an evolution of the one Bose originally constructed more than 100 years ago. Like a megaphone, it directs electromagnetic energy in a concentrated beam, thereby increasing gain without requiring more transmitting or receiving power. By mounting our antennas on rotatable robotic platforms, we could point the beams in any direction.

Such beam steering will be a key component of future millimeter-wave mobile systems, on both the base station and handset ends of the network. In the real world, as opposed to our experimental setup, mobile equipment such as smartphones and tablets will require electrically steerable antenna arrays that are much smaller and a lot more sophisticated than the ones we used for our tests. More on that later.

In total, we sampled over 700 different combinations of transmitter-receiver positions using frequencies around 38 GHz. This spectrum band is a good candidate for cellular systems because it has already been designated for commercial use in many parts of the world but so far is only lightly occupied.

To the great surprise of our mobile-industry colleagues, we found that this millimeter-wave spectrum can provide remarkably good coverage. Our measurements showed, for instance, that a handset doesn’t need a line-of-sight path to link with a base station. The highly reflective nature of these waves turns out to be an advantage rather than a weakness. As they bounce off solid materials such as buildings, signs, and people, the waves disperse throughout the environment, increasing the chance that a receiver will pick up a signal—provided it and the transmitter are pointed in the proper directions.

Of course, as with any wireless system, the likelihood of losing a connection increases as the receiver moves away from the transmitter. We have observed that for millimeter-wave signals transmitted at low power, outages start occurring at around 200 meters. This limited range may have been a problem for earlier generations of cellular systems, in which a typical cell radius extended up to several kilometers. But in the past decade or so, operators have had to significantly shrink cell sizes in order to expand capacity. In especially dense urban centers, such as downtown Seoul, South Korea, they have begun deploying small cells—compact base stations that fit on lampposts or bus-station kiosks—with ranges no larger than about 100 meters.

And there’s another reason small cells may be ideal for millimeter-wave communications. It’s well known that rain and air can attenuate millimeter waves over large distances, causing them to lose energy more quickly than the longer, ultrahigh frequencies used today. But previous research has shown that over relatively short ranges of a few hundred meters, these natural elements have little effect on most millimeter-wave frequencies, although there are a few exceptions.

To bolster our measurement data, we took our channel-sounding system to New York City, one of the most challenging radio environments in the world. There, in 2012 and 2013, we studied signal propagation at 28 and 73 GHz, two other commercially viable bands, and the results were nearly identical to our findings in Austin. Even on Manhattan’s congested streets, our receivers could link with a transmitter 200 meters away about 85 percent of the time. By combining energy from multiple signal paths, more advanced antennas could extend the coverage range beyond 300 meters.

A New Spectrum Frontier

Future 5G mobile networks could tap vast spectrum reserves with millimeter-wave devices. Here’s how this emerging technology stacks up against today’s cellular systems

We also tested how well these frequencies penetrate common building materials and found that although they pass through drywall and clear glass without losing much energy, they’re almost completely blocked by brick, concrete, and heavily tinted glass. So while users might get some reception between rooms or through transparent windows, operators will typically need to install repeaters or wireless access points to bring signals indoors.

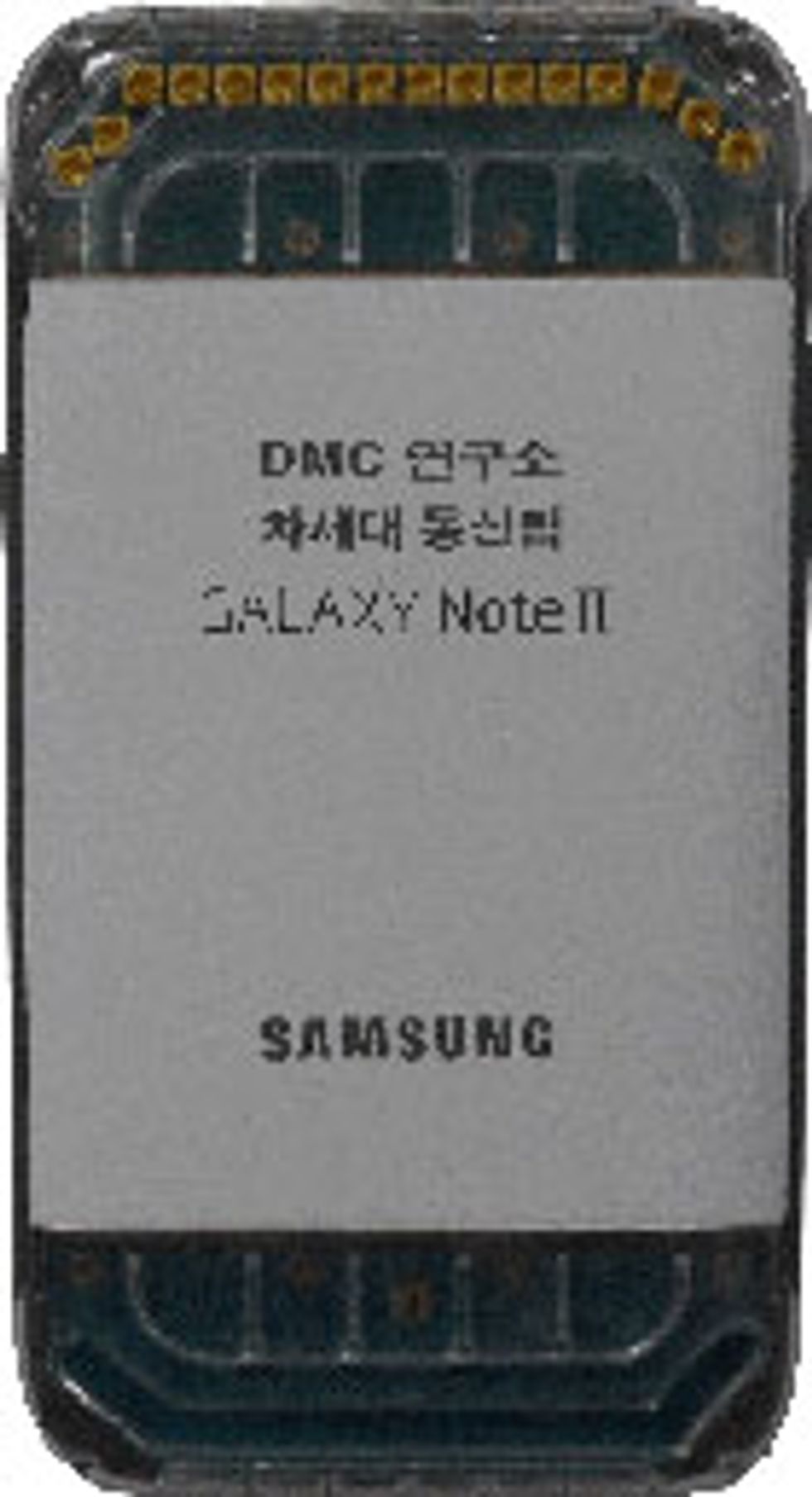

Encouraged by early measurements of millimeter-wave behavior in Austin, the other two of us (Roh and Cheun) and our colleagues at Samsung Electronics Co., in Suwon, South Korea, began building a prototype communication system for a commercial cellular network. In place of bulky, motorized horn antennas, we used arrays of rectangular metal plates called patch antennas. A big benefit of these antennas is their size, which as a rule of thumb must be at least half the wavelength of the signal frequency. Because we designed our prototype to work at 28 GHz (about 1 centimeter), each patch antenna could be very small—just 5 millimeters across, not quite the diameter of an aspirin tablet.

A single 28-GHz patch antenna wouldn’t be of much use for cellular transmissions, because gain decreases as antenna size shrinks. But by arranging tens of these tiny panels in a grid pattern, we can magnify their collective energy without increasing transmission power. Such antenna arrays have long been used for radar and space communications, and many chipmakers, including Intel, Qualcomm, and Samsung, are now incorporating them into WiGig chip sets. Like a horn antenna or satellite dish, an array increases gain by focusing radio waves in a directional beam. But because the array creates this beam electronically, it can steer the beam quickly, allowing it to find and maintain a mobile connection.

An array that locks its beam on a moving target is called an adaptive, or smart, antenna array. It works like this: As each patch antenna in the array transmits (or receives) a signal, the waves interfere constructively to increase gain in one direction while canceling one another out in other directions. The larger the array, the narrower the beam. To steer this beam, the array varies the amplitude or phase (or both) of the signal at each patch antenna. In a mobile network, a transmitter and receiver would connect with each other by sweeping their beams rapidly, like a searchlight, until they found the path with the strongest signal. They would then sustain the link by evaluating the signal’s characteristics, such as its direction of arrival, and redirecting their beams accordingly.

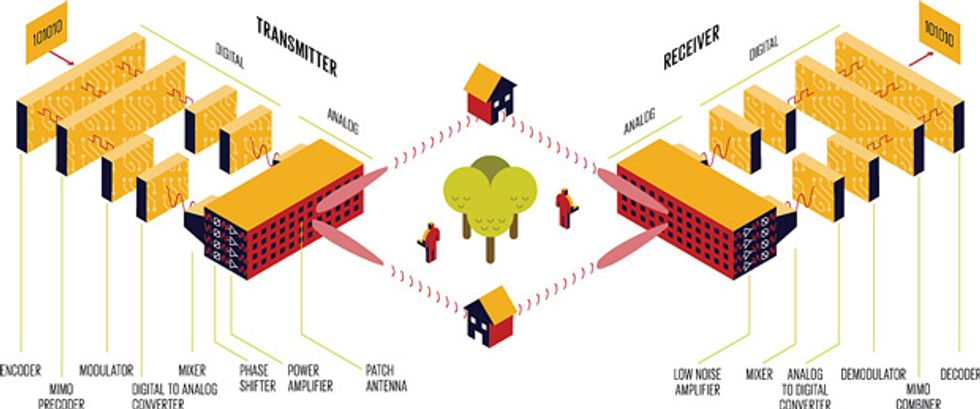

This beam forming and steering can be done in a couple of different ways. It can be done in the analog stage with electronic phase shifters or amplifiers, just before a signal is transmitted (or just after it’s received). Or it can be done digitally, before the signal is converted into analog (or after it’s digitized). There are pros and cons to both approaches. While digital beam forming offers better precision, it’s also more complex—and hence more costly—because it requires separate computational modules and power-hungry digital-to-analog (or analog-to-digital) converters for each patch antenna. Analog beam forming, on the other hand, is simpler and cheaper, but because it uses fixed hardware, it is less flexible.

To get the best of both worlds, we’ve designed a hybrid architecture. We use phase shifters on the analog front end to form sharp, directional beams, which increase our antenna’s communication range. And we use digital processing on the back end to separately control different subsections of the array. The digital input lets us do more advanced tricks, such as aim separate beams at several handsets simultaneously or send multiple data streams to a single device, thereby increasing its download rate. Such spatial multiplexing techniques are known as multiple-input, multiple-output, or MIMO.

For example, in our 28-GHz prototype system, which Samsung announced in May 2013, we equipped each transmitter and receiver with a 64-antenna array about the size of a Post-it note. However, we divided this array digitally into two 32-antenna MIMO channels. Each channel used 500 MHz of spectrum and was capable of forming a 10-degree-wide beam. In a laboratory test, we used these independent beams to transmit nearly error-free data at more than 500 megabits per second to two mobile stations at once. In another test, we used both channels to connect with just one station, achieving a data rate of more than 1 Gb/s. For comparison, a typical data rate with a 4G LTE connection in New York City averages around 10 Mb/s and in theory can be as high as 50 Mb/s.

When we took our prototype outdoors in Suwon, a city near Seoul, we showed that it could maintain similar data rates even when we moved the mobile stations in random directions at up to 8 kilometers per hour—about the speed of a fast jog. We also tested the system’s range using transmission power comparable to that of current 4G LTE networks. Even in non-line-of-sight conditions, we found that a mobile receiver could reliably connect with a transmitter up to almost 300 meters away, which supports the measurement results from Austin and New York City. When the stations were in sight of one another, their range was expanded to almost 2 km. We believe that even longer distances are possible, but our experimental license didn’t allow us to test them.

Remember, too, that this prototype was just a proof-of-concept system. By using wider bandwidths, narrower beams, or more MIMO channels, real-world networks could achieve even higher data rates and larger coverage ranges. For instance, computer simulations of imagined small-cell networks using three-dimensional city models suggest that operators could reasonably provide data rates upwards of several gigabits per second.

One important limitation, though, will be the space available in handsets and base stations for these sophisticated antenna arrays. At Samsung, we’ve begun exploring arrangements of 28-GHz patch antennas in the Galaxy Note II. So far we’ve found that it’s possible to fit as many as 32 of these little radiators around the smartphone’s top and bottom edges while still providing 360 degrees of coverage. We expect future millimeter-wave base stations to be able to house 100 or more antennas.

These hardware experiments, and the measurement campaigns in Austin and New York City, have convinced us that millimeter-wave cellular communication will be not just feasible but revolutionary. The work of both our groups, however, is only the beginning. Engineering full-scale millimeter-wave networks will require robust statistical models of millimeter-wave channels, streamlined beam-forming algorithms, and new power-efficient air-interface standards, among many other design challenges. Government regulators will also have to take the initiative in making millimeter-wave spectrum available for cellular services.

Meanwhile, as industry groups worldwide begin considering candidates for 5G technologies, including schemes for better interference management and dense small-cell architectures, they’re recognizing that millimeter-wave systems will be a key part of this mix. By 2020, when the first commercial 5G networks will likely start rolling out, millimeter-wave bands will no longer be regarded as the abandoned backwoods lots of radio real estate. They’ll be the most fashionable destinations of all.

This article originally appeared in print as “Mobile’s Millimeter-wave Makeover.”

About the Authors

Theodore S. Rappaport is the founding director of New York University’s wireless research center. With coauthors Wonil Roh and Kyungwhoon Cheun, vice presidents at Samsung Electronics, he is helping to realize future 5G networks. “I haven’t been this excited about cellular technology since I got my Ph.D. in 1987, when the industry was just getting started,” Rappaport says.