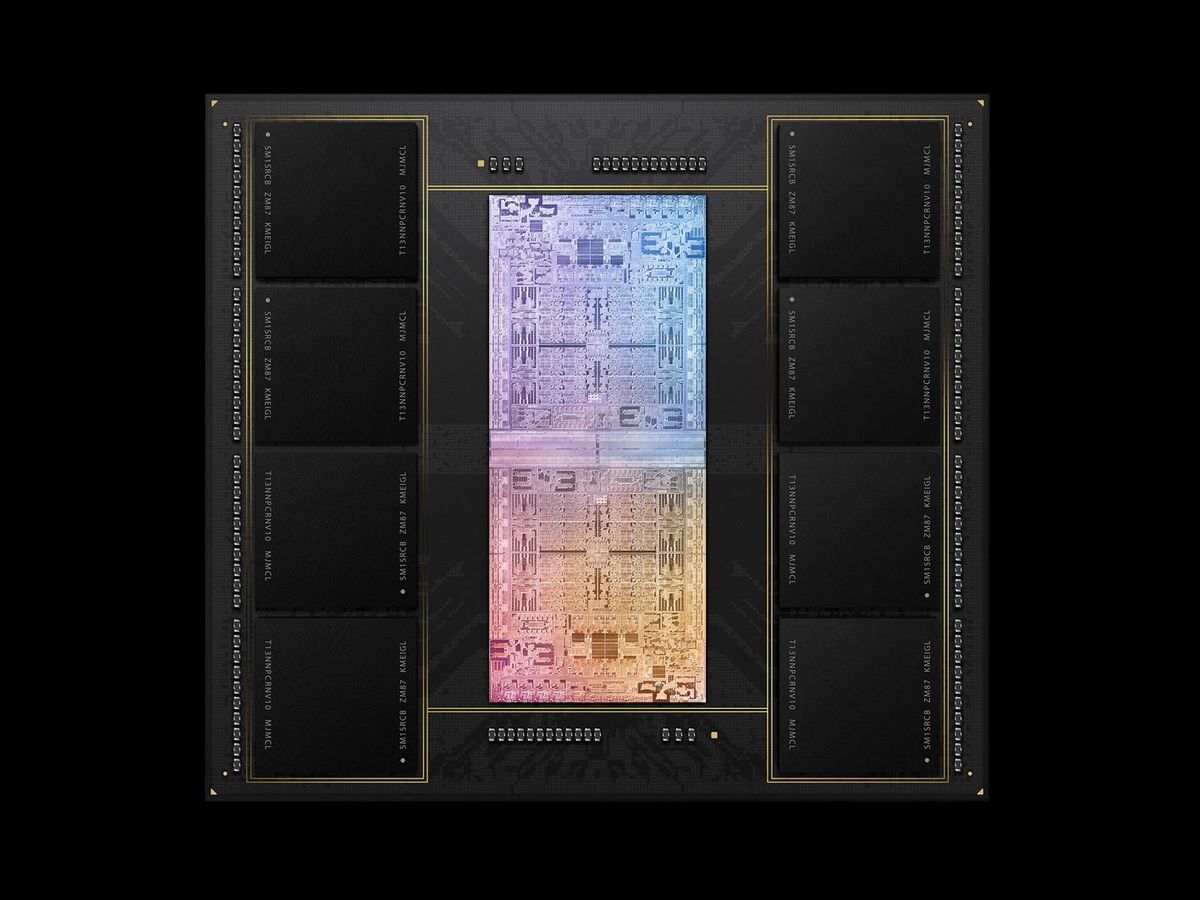

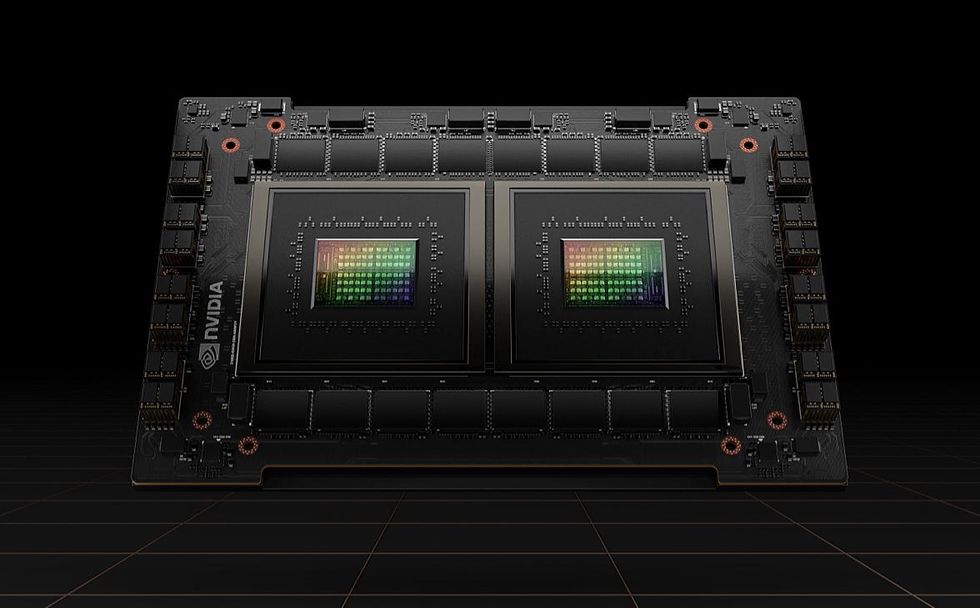

Apple once again surprised enthusiasts and analysts with its announcement of the M1 Ultra, a variant of the M1 Max that effectively fuses two chips into one. The result is a dual-chip design viewed by software as a single piece of silicon. Nvidia delivered similar news at the GPU Technology Conference 2022, where CEO Jensen Huang announced that the company will fuse two of the company’s new Grace CPU processors into a single “Superchip.”

These announcements target different markets. Apple has its sights set on the consumer and professional workstation world, while Nvidia intends to compete in high-performance computing. Yet the divergence in purpose only underscores the broad challenges rapidly bringing the era of monolithic chip design to an end.

Multichip design isn’t new, but the idea has surged in popularity in the last five years. AMD, Apple, Intel, and Nvidia have all dabbled to varying degrees. AMD has pursued chiplet design with its EPYC and RYZEN processors. Intel plans to follow suit with Sapphire Rapids, an upcoming architecture for the server market built on the use of chiplets it calls “tiles.” Now Apple and Nvidia have joined the party—though with designs that target significantly different markets.

The shift toward multichip design is driven by the challenge of modern chip manufacturing. Miniaturization of transistors has slowed, yet growth in transistor counts in leading-edge designs shows no sign of slowing.

Apple’s M1 Ultra has 114 billion transistors and a die area (or fabrication area) of roughly 860 square millimeters (an official figure for the M1 Ultra is unavailable, but a single M1 Max chip has a die area of 432 mm2). The transistor count of Nvidia’s Grace CPU is still under wraps, but the Hopper H100 GPU announced alongside the Grace CPU includes 80 billion transistors. For perspective, AMD’s 64-core EYPC Rome processor, released in 2019, has 39.5 billion transistors.

Transistor counts this high push modern chip production to its extremes, making multichip design more attractive. “Multichip module packaging has enabled the chip players to give better power efficiency and performance [with regard] to monolithic designs, as the die size for chips becomes larger and wafer-yield issues become more prominent,” Akshara Bassi, a research analyst at Counterpoint Research, said in an email. Aside from Cerebras, a startup attempting to build chips that span the entirety of a silicon wafer, the chip industry seems in agreement that monolithic design is becoming more trouble than it’s worth.

This shift towards chiplets has occurred in step with support from manufacturers. Taiwan Semiconductor Manufacturing Co. is a leader, offering a suite of advanced packaging called 3DFabric. Technologies that fall under the umbrella of 3DFabric are used by AMD in some EPYC and RYZEN processor designs and are almost certainly used by Apple for M1 Ultra (Apple has not confirmed this, but the M1 Ultra is produced by TSMC). Intel has its own packaging technologies, such as EMIB and Foveros. Though originally meant for Intel’s own use, the company’s chip-manufacturing technology is becoming relevant to the broader industry as Intel Foundry Services opens its doors.

“The ecosystem around the foundational semiconductor design, manufacturing, and packaging has progressed to the point of supporting the design nodes to economically and reliably produce chiplet-based solutions,” Mark Nossokoff, a senior analyst at Hyperion Research, said in an email. “The software design tools to seamlessly integrate the various chiplets’ functionality have also matured to optimize targeted solution performance.”

Chiplets are here to stay, but for the moment, it’s a world of silos. AMD, Apple, Intel, and Nvidia are using their own interconnect designs meant for specific packaging technologies.

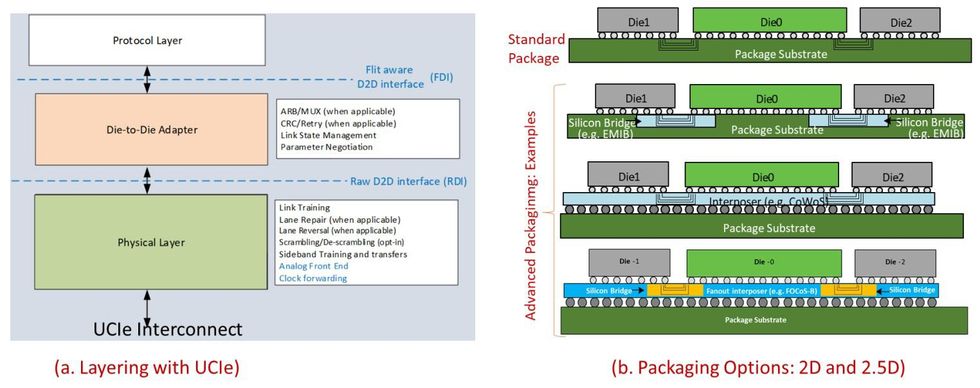

Universal Chiplet Interconnection Express hopes to bring the industry together. Announced on 2 March 2022, this open standard offers a “standard” 2D package that targets “cost-effective performance” and an “advanced” package that targets leading-edge designs. UCIe also supports off-package connection through PCIe and CXL, opening up the potential for connecting multiple chips across multiple machines in a high-performance compute environment.

UCIe is a start, but the standard’s future remains to be seen. “The founding members of initial UCIe promoters represent an impressive list of contributors across a broad range of technology design and manufacturing areas, including the HPC ecosystem,” said Nossokoff, “but a number of major organizations have not as yet joined, including Apple, AWS, Broadcom, IBM, NVIDIA, other silicon foundries, and memory vendors.”

Bassi points out that Nvidia may be particularly reluctant to participate. The company has opened up its own NVLink-C2C interconnect for custom silicon integration, making it a potential competitor for UCIe.

But while the fate of interconnects like UCIe and NVLink-C2C will determine the rules of the game, they’re unlikely to change the game being played. Apple’s M1 Ultra could be considered the canary in the coal mine. Multichip design is no longer reserved to data centers—it’s coming to a home computer near you.

- 3 Ways Chiplets Are Remaking Processors - IEEE Spectrum ›

- AMD Tackles Coming "Chiplet" Revolution With New Chip Network ... ›

- Intel's View of the Chiplet Revolution - IEEE Spectrum ›

- “PACMAN” Hack Can Break Apple M1’s Last Line of Defense - IEEE Spectrum ›

Matthew S. Smith is a freelance consumer-tech journalist. An avid gamer, he is a former staff editor at Digital Trends and is particularly fond of wearables, e-bikes, all things smartphone, and CES, which he has attended every year since 2009.