There’s an expectation in robotics that in order to be useful, robots have to be able to adapt to unstructured environments. Unstructured environments generally refer to places outside of a robotics lab or other controlled or semi-controlled situation, and could include anything from your living room to a tropical rainforest. An enormous amount of effort and creativity goes into designing robots that can reliably operate in places like these, with a focus on developing methods of sensing, locomotion, and manipulation that handle all kinds of different situations. It’s a very hard problem; even for humans, it’s hard, so we do a very human thing. We cheat.

Cheating, in this context, means instead of adapting to an environment, you instead adapt the environment itself, modifying it so you can complete different tasks. Humans do this all the time, by using stepping stools to reach high places, adding stairs and ramps to overcome obstacles, attaching handles to objects to make manipulation easier, and so on. A robot that could do similar sorts of things has the potential to be far more capable than a robot that is simply passively adaptable, and at the IEEE International Conference on Robotics and Automation last week, we saw some new research that’s making it happen.

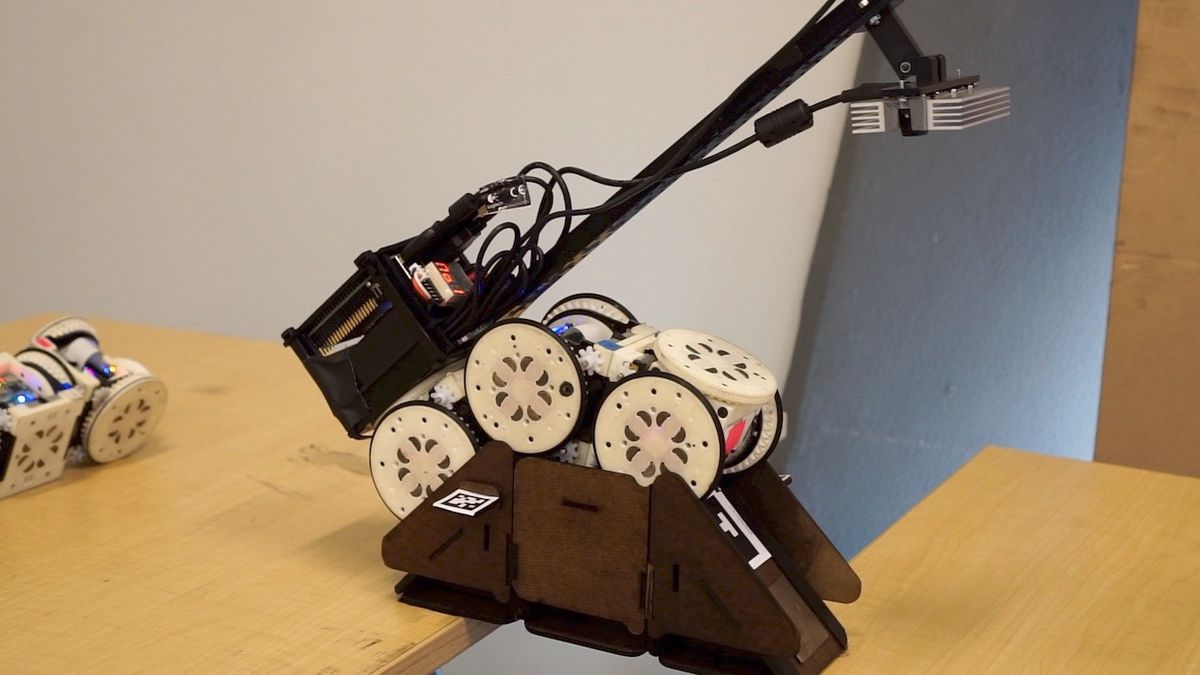

SMORES-EP is a robot from the University of Pennsylvania’s ModLab. It’s made up of an arbitrary number of independent wheeled cubes that can attach to one another magnetically in multiple configurations to form a larger cooperative robot that’s more capable than any single module alone. While robots like these are generally comprised of identical modules, it’s not all that much of a stretch to consider ways in which it might be beneficial to incorporate other objects into the system that might be actively or passively useful.

For example, modular robots can have trouble dealing with crossing gaps or climbing up stairs or ledges, because modular robots don’t scale well beyond a handful of individual modules. Rather than trying to find a way for the robot to handle obstacles like these, researchers from UPenn and Cornell decided to teach the robot to modify its environment by giving it access to blocks and ramps that it could (autonomously) use to make obstacles less obstacle-y.

This behavior is completely autonomous: The system is given a high-level task to accomplish, and the ramps and blocks are placed in the environment for it to use if it decides that they’d come in handy, but it doesn’t have explicit instructions about what to do every time. The video above shows some example tasks, but the system has no problem generalizing to other tasks in other environments that may require different environment augmentations.

Ramps and blocks are just two examples of objects that robots could use to augment their environment. It’s easy to imagine how a robotic system could carry augmentations with it (or the materials to construct them), or perhaps even scavenge materials locally, building things like ramps out of dirt or rocks. Heterogeneous teams of robots could include construction robots that modify obstacles so that scout robots can traverse them. And mobility is just one example of environmental augmentation: Perception is a challenge for robots, but what if you had a robot with lots of fancy sensors scout out an environment, and then place fiducials or RFID markers all over the place so that other robots with far cheaper sensors could easily navigate around and recognize objects? Of course, doing things like this may have an impact on any humans in the environment as well, which is something that the robots will likely have to consider. There’s a lot of potential here, and we’re excited to see what the researchers make of it.

“Perception-Informed Autonomous Environment Augmentation With Modular Robots,” by Tarik Tosun, Jonathan Daudelin, Gangyuan Jing, Hadas Kress-Gazit, Mark Campbell, and Mark Yim from the University of Pennsylvania and Cornell University, was presented at ICRA 2018 in Brisbane, Australia.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.