Usually, if your robot is warm to the touch, it’s symptomatic of some sort of horrific failure of its cooling system. Robots aren’t supposed to be warm— they’re supposed to be steely and cold. Or at least, steely and ambient temperature. Heat is almost always a byproduct that needs to be somehow accounted for and dealt with. Humans and many other non-reptiles expend a lot of energy keeping at a near-constant temperature, and as it turns out, being warmish all the time provides a lot of fringe benefits, including the ability to gather useful information about things that we touch. Now robots can have this ability, too.

Most of the touch sensors used by robots are force detectors. They can tell how hard a surface is, and sometimes what kind of texture it has. You can also add some temperature sensors into the mix to tell you whether the surface is warm or cold. However, most of the time, objects around you aren’t warm or cold, they’re ambient—whatever the temperature is around them is the temperature they are.

When we humans touch ambient temperature things, we often experience them as feeling slightly warmer or colder than they really are. There are two reasons for this: The first reason is that we’re toasty warm, so we’re feeling the difference in temperature between our skin and the thing. The second reason is that we’re also feeling how much the thing is sucking up our toasty warmness. In other words, we’re measuring how quickly the thing is absorbing our body heat, and in even more other words, we’re measuring its thermal conductivity. Try it: Something metal will feel cooler to you than something fabric or wood, even if they’re both the same temperature, because the metal is more thermally conductive and is sucking the heat out of you faster. The upshot of this is that we have the ability to gather additional data about materials that we touch because our fingers are warm.

Joshua Wade, Tapomayukh Bhattacharjee, and Professor Charlie Kemp from Georgia Tech presented a paper at an IROS workshop last month introducing a new kind of robotic skin that incorporates active heating. When combined with traditional force sensing, the active heating results in a multimodal touch sensor that helps to identify the composition of objects.

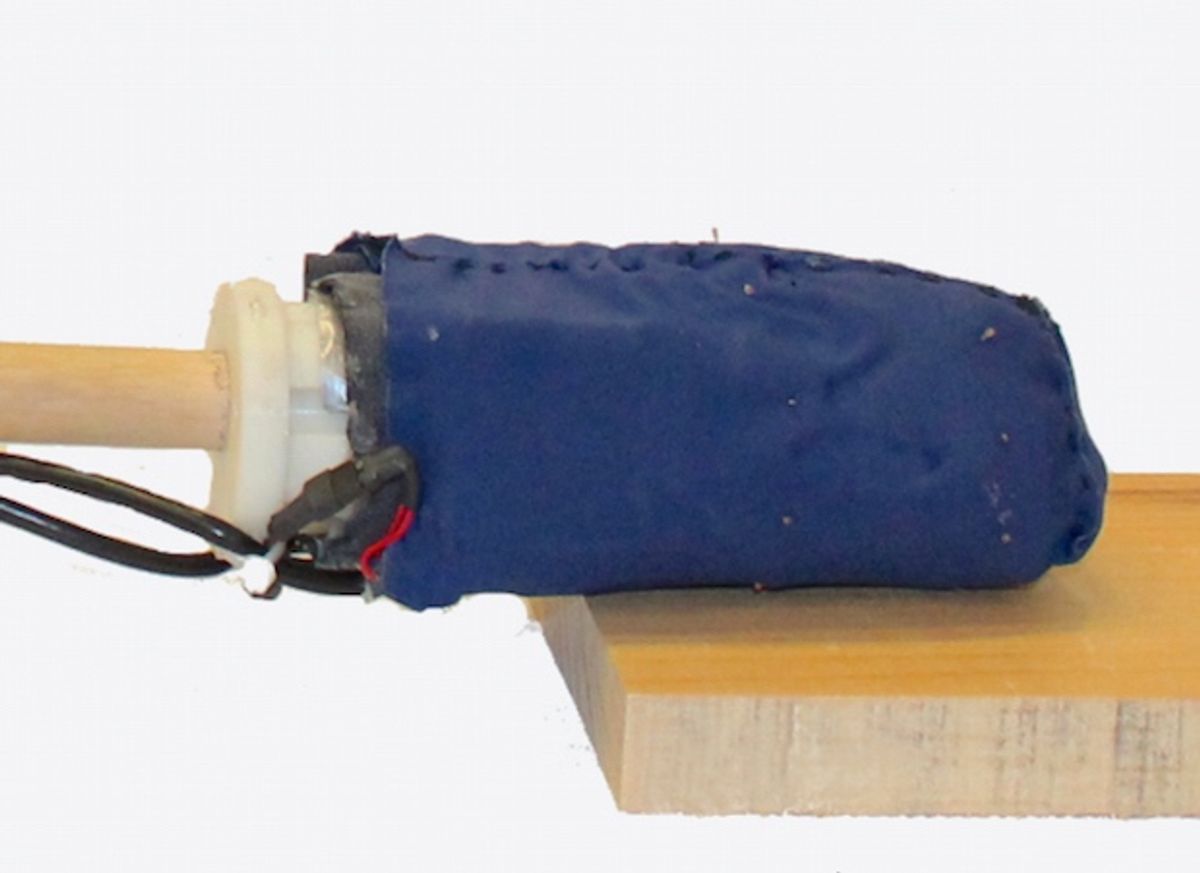

Okay, so it’s not much to look at, but the combination of force and active thermal sensing works significantly better than force sensing alone. The fabric is made of an array of “taxels,” each of which consists of resistive fabric sandwiched between two layers of conductive fabric, two passive thermistors, and two active thermistors placed on top of a carbon fiber resistive heating strip. Using all three of these sensing modalities to validate each other, the researchers were able to identify wood and aluminum by touch up to 96 percent of the time while pressing on it, or 84 percent of the time with a sliding touch.

We should mention that this isn’t the first active thermal sensor—the BioTac sensor from SynTouch also incorporates a heater, although it’s only a fingertip, as opposed to the whole-arm fabric-based tactile skin that Georgia Tech is working on.

Tapo Bhattacharjee told us that there are plenty of different potential applications for a sensor like this. “A robot could use this skin for manipulation in cluttered or human environments. Knowing the haptic properties of the objects that a robot touches could help in devising intelligent manipulation strategies, [for example] a robot could push a soft object more than say a hard object. Or, if the robot knows it is touching a human, it can be more conservative in terms of applied forces.”

“Force and Thermal Sensing With a Fabric-Based Skin, by Joshua Wade, Tapomayukh Bhattacharjee, and Charles C. Kemp from Georgia Tech, was presented at the Workshop on Multimodal Sensor-Based Robot Control for HRI and Soft Manipulation at IROS 2016 in Seoul, South Korea.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.