Most of the discussion around robots and ethics lately has been about whether autonomous cars will decide to run over the nearest kitten or a slightly farther away basket full of puppies. Or something like that. Whether or not robots can make ethical decisions when presented with novel situations is something that lots and lots of people are still working on, but it’s much easier for robots to be ethical in situations where the rules are a little bit clearer, and also when there is very little chance of running over cute animals.

At ICRA last month, researchers at Georgia Tech presented a paper on “an intervening ethical governor for a robot mediator in patient-caregiver relationship.” The idea is that robots will become part of our daily lives, and they are much, much better than humans at paying close and careful attention to things, without getting distracted or bored, forever. So robots with an understanding of ethical issues would be able to observe interactions between patients and caregivers, and intervene when they notice that something’s not going the way it should. This is important, and we need it.

In the United States, there are about a million people living with Parkinson’s disease. Robotic systems like exoskeletons and robot companions are starting to help people with physical rehabilitation and emotional support, but it’s going to be a while before we have robots that are capable of giving patients with Parkinson’s all the help that they need. In the meantime, patients rely heavily on human caregivers, which can be challenging for both parties at times. Parkinson’s is specifically tricky for human-human interactions because declining muscle control means that patients frequently have trouble conveying emotion through facial expressions, and this can lead to misunderstandings, or worse things.

To test if a robot mediator could help in such cases, the Georgia Tech researchers—Jaeeun Shim, Ronald Arkin, and Michael Pettinati—developed an intervening ethical governor (IEG). It is basically a set of algorithms that encodes specific ethical rules, and determines what to do in different situations. In this case, the IEG uses indicators like voice volume and face tracking to evaluate whether a “human’s dignity becomes threatened due to other’s inappropriate behavior” in a patient-caregiver interaction. If that happens, the IEG specifies how and when the robot should intervene.

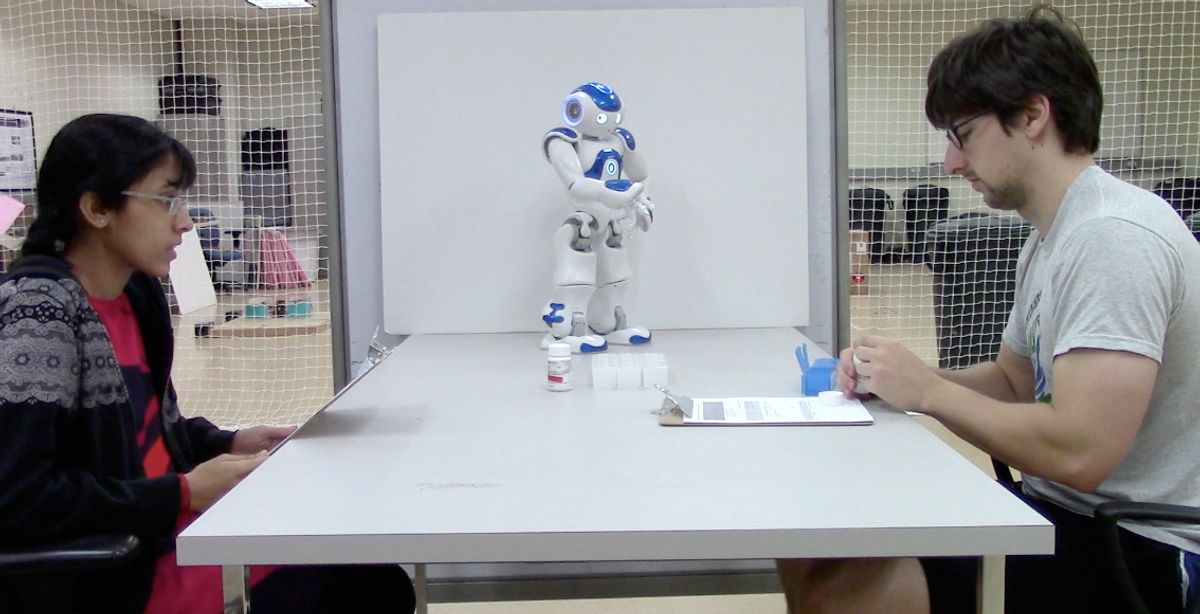

To embody their IEG, the researchers used a Nao humanoid, which has good sensing capabilities (a microphone array and camera) and can do speech synthesis (for the intervention bit). They then conducted simulated, scripted interactions between two grad students to see how the robot would react:

In the final part of the project, the researchers recruited a group of people (older adults who could potentially be using the system) to watch these interactions and describe their reactions to them. It was a small number of participatants (nine, with average age of 71), but at this stage the IEG is still a proof-of-concept, so the researchers were mostly interested in qualitative feedback. Based on the responses from the study participants, the researchers were able to highlight some important takeaways, like:

Safety is most important

“I think anything to protect the patient is a good thing.”

“That’s a high value. That’s appropriate there, because it gives real information, not just commanding.”

The robot should not command or judge

“I think that [commanding] puts the robot in the spot of being in a judgment … I think it should be more asking such as ‘how can I help you?’ … But the robot was judging the patient. I don’t think that’s why we would want the robot.”

“He [the patient] should not be criticized for leaving or forgetting to do something by the robot. The caregiver should be more in that position.”

“If the robot stood there and told me to ‘please calm down,’ I’d smack him.”

Ah yes, it wouldn’t be a social robotics study if it didn’t end with someone wanting to smack a robot. The researchers, to their credit, are taking this feedback to heart, and working with experts to tweak the language a bit, for example by changing “please calm down” to “let’s calm down,” which is a bit less accusatory. They’re also planning on improving the system by incorporating physiological data to better detect patients’ and caregivers’ emotional statuses, which could improve the accuracy of the robot’s intervention.

We should stress that there’s no way a robot can replace empathetic interactions between two people, and that’s not what this project is about. Robots, or AI systems in general, can potentially be effective mediators, making sure that caregivers and patients act ethically and respectfully towards each other, helping to improve relationships rather than replace them.

“An Intervening Ethical Governor for a Robot Mediator in Patient-Caregiver Relationship: Implementation and Evaluation,” by Jaeeun Shim, Ronald Arkin, and Michael Pettinati from Georgia Tech, was presented at ICRA 2017.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.