This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

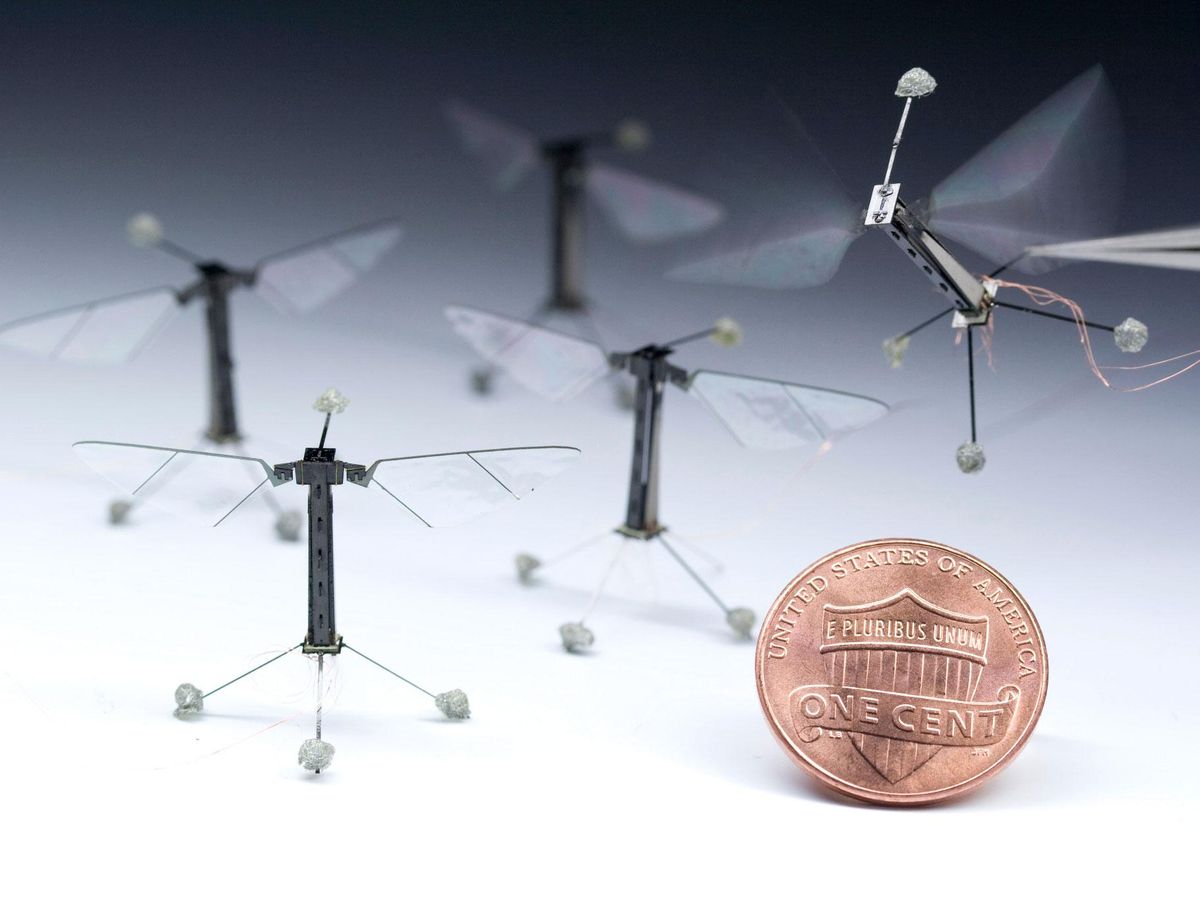

Since becoming the first insect-inspired robot to take flight, Harvard University’s famous little robotic bee, dubbed RoboBee, has achieved novel perching, swimming, and sensing capabilities, among others.

More recently RoboBee has hit another milestone: precision control over its heading and lateral movement, making it much more adept at maneuvering. As a result, RoboBee can hover and pivot better in midair, more similarly to its biological inspiration, the bumblebee. The advancement is described in a study published this past December in IEEE Robotics and Automation Letters.

The higher level of control over flight will be beneficial in a wide range of scenarios where precision flight is needed. For instance, consider needing to explore sensitive or dangerous areas—a task to which RoboBee could be well suited—or if a large group of flying robots must navigate together in swarms.

“One particularly exciting area is in assisted agriculture, as we look ahead towards applying these vehicles in tasks such as pollination, attempting to achieve the feats of biological insects and birds,” explains Rebecca McGill, a Ph.D. candidate in materials science and mechanical engineering at Harvard, who helped codesign the new RoboBee flight model.

But achieving precision control with a flapping-winged robot has proven challenging and for a good reason. Helicopters, drones, and other fixed-wing vehicles can tilt their wings and blades, and incorporate rudders and tail rotors, to change their heading and lateral movement. Flapping robots, on the other hand, must move their wings up and down at different speeds in order to help the robot rotate while upright in midair. This type of rotational movement induces a force called yaw torque.

However, flapping-wing micro-aerial vehicles (FWMAVs) such as RoboBee have to precisely balance the upstroke and downstroke speeds within a single fast-flapping cycle to generate the desired yaw torque to turn the body. “This makes yaw torque difficult to achieve in FWMAVs ,” explains McGill.

To address this issue, McGill and her team developed a new model that analytically maps out how the different flapping signals associated with flight affect forces and torques, determining the best combination for yaw torque (along with thrust, roll torque, and pitch torque) in real time.

“The model improves our understanding of how much yaw torque is produced by different flapping signals, giving better, controllable yaw performance in flight,” explains McGill.

In the team’s study, they tested the new model through 40 different flight scenarios with RoboBee, while varying the control inputs and observing the thrust and torque response for each flight. With its new model, the RoboBee was able to fly in a circle while keeping its gaze focused on the center point, mimicking a scenario in which the vehicle focuses a camera on a target while circling around it.

“Our experimental results revealed that yaw torque filtering can be mitigated sufficiently…to achieve full control authority in flight,” says Nak-seung Patrick Hyun, a postdoctoral fellow at Harvard who was also involved in the study. “This opens the door to new maneuvers and greater stability, while also allowing utility for onboard sensors.”

McGill and Hyun note that advances like this will not only help robots in the field with tasks such as pollination and emergency response, but also provide us with more insights into biology as well. “Flapping-wing robots are exciting because they give us the chance to explore and learn about insect and bird-flight mechanisms through imitation, creating a ‘two-way’ path of discovery towards both robotics and biology,” explains Hyun, noting that the team is interested in studying aggressive aerial maneuvers through their new RoboBee flight model.

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.