For conventional computers, benchmarks can represent a rite of passage of sorts into a new era of computing. As artificial intelligence and machine learning become more and more ubiquitous, for instance, AI and ML benchmarks help everyone understand and measure precisely how well one neural net performs compared to other systems and to reference architectures. Not surprising, then, that the emerging field of quantum-computer benchmarking will be helping test and improve next-generation quantum processors, researchers say.

A quantum computer with great enough complexity—for instance, enough components known as quantum bits or “qubits”—could theoretically achieve a quantum advantage where it can find the answers to problems no classical computer could ever solve. In principle, a quantum computer with 300 qubits fully devoted to computing (not error correction) could perform more calculations in an instant than there are atoms in the visible universe.

However, researchers at Sandia National Laboratories note that it is currently difficult to accurately predict a quantum processor’s capability—that is, the set of quantum programs it can run successfully. This is because the current benchmarking programs used to analyze these devices scale poorly to quantum computers with many qubits. Existing quantum benchmarks are also not flexible enough to supply detailed looks on processor capabilities on many different potential applications, they say.

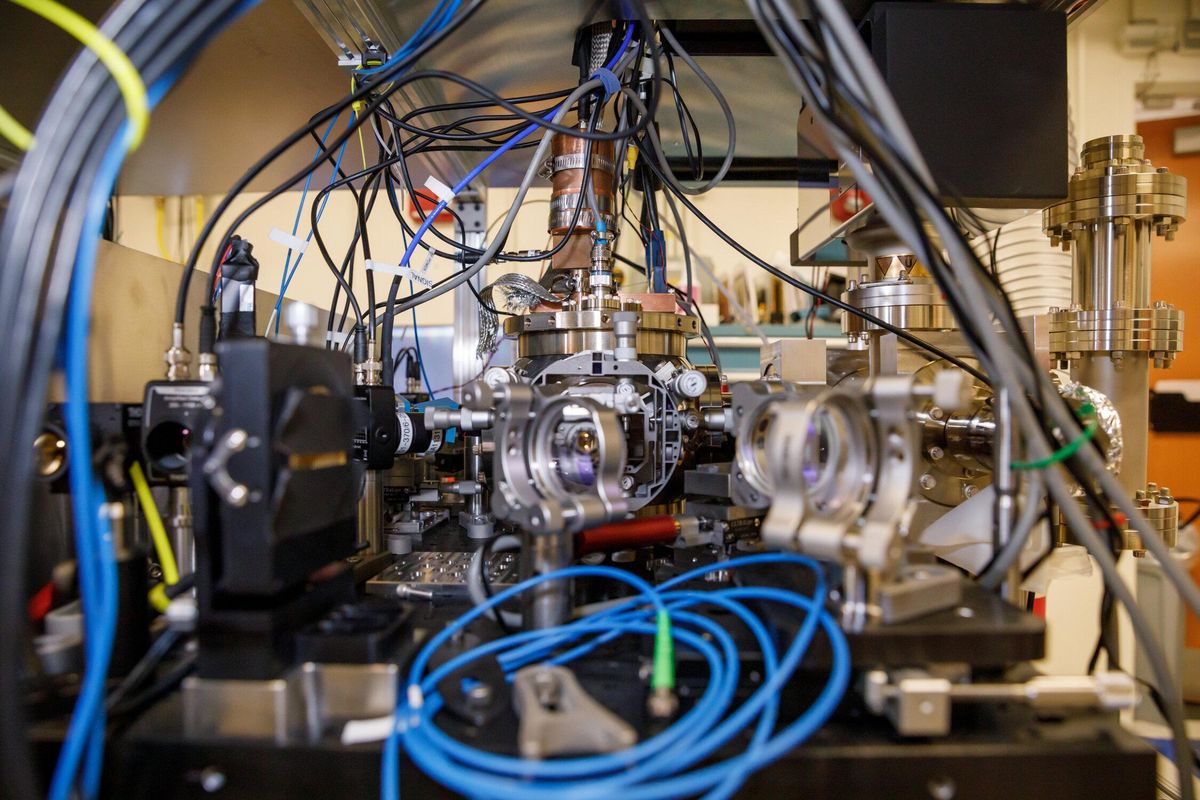

“It’s surprisingly difficult to benchmark state-of-the-art quantum computers, because most benchmarks rely on comparing the results of a quantum computation with the correct output, computed on a conventional computer,” says study lead author Tim Proctor, a physicist at Sandia National Laboratories’ Quantum Performance Laboratory in Albuquerque, New Mexico. “That conventional computation becomes utterly infeasible as the number of qubits increases, which was central to Google’s famous ‘quantum supremacy’ demonstration.”

The new benchmarks detail a quantum computer’s performance on different tasks—compared to existing standards that generate just one number.

In a new study, Proctor and his colleagues developed a novel technique for creating benchmarks for quantum computers that they call circuit mirroring. This method transforms any quantum program into an ensemble of closely related benchmark programs dubbed “mirror circuits,” which each perform a set of calculations and then reverses it.

The mirror circuits are each at least as difficult to execute as the quantum program they are based on. However, unlike many quantum programs, mirror circuits have simple, easy-to-predict outcomes. As such, mirror circuits provide a way to verify a quantum processor’s capabilities using benchmarks similar to quantum programs that the quantum computers may actually run, the researchers say.

“Quantum computers are complicated, and quantifying their performance is complicated too. Our methods can be used to design benchmarks for studying all kinds of aspects of a quantum computer’s performance,” Proctor says. “Ultimately, we think that benchmarks built using our method could be used to design a set of comprehensive tests for quantum computers. This would make it possible to accurately compare quantum computers, and to find out which hardware is best for which task.”

Using circuit mirroring, the scientists first designed two families of benchmark programs—one that ran quantum processors through randomized sequences of operations, the other with highly structured procedures. They next executed these benchmarks on 12 publicly accessible quantum computers from IBM and Rigetti Computing to map out their capabilities.

Quantum benchmarking currently relies mostly on randomized programs. However, Proctor notes such randomized benchmarks may not serve as well as tests of the more structured programs that quantum computers employ to implement quantum algorithms.

A competing standard, cycle benchmarking, is customized to each algorithm—and measures error rates for sets of quantum gates.

Indeed, Proctor and his colleagues found that how well quantum computers executed randomized mirror circuits did not predict how well they did with more orderly mirror circuits. The performance of some quantum processors depended heavily on the level of mirror circuit structure, while others showed almost no sign of such a link.

“A particularly nice feature of our benchmarks is they’re designed to provide a lot of detail—they tell us about a quantum computer’s performance on different tasks,” Proctor says. “This contrasts to most existing methods that, by design, describe a quantum computer’s performance with one number.”

One potential flaw of benchmarks that employ the reversal technique used in mirror circuits is how they may fail to detect many key errors. “We ensure that our benchmarks are sensitive to all errors by inserting a random element in between the forward and reverse circuits, the two parts that comprise the bulk of a mirror circuit,” Proctor says. “In the paper, we prove that our benchmarks are sensitive to all errors.” The scientists detailed their findings December 20 in the journal Nature Physics.

Circuit mirroring faces a number of competitors in the arena of quantum benchmarking. For example, theoretical physicist Joseph Emerson at the University of Waterloo, in Canada, notes a method that he and his colleagues have developed known as cycle benchmarking was proved an effective benchmarking strategy on the quantum computers at the Advanced Quantum Testbed at Lawrence Berkeley National Laboratory.

“Cycle benchmarking was shown to be preferable in the context of understanding application-specific performance—it is a scalable strategy for benchmarking the performance of the quantum hardware that is tailored to each specific algorithm or application of interest,” says Emerson, head of quantum strategy at Keysight Technologies.

Proctor notes that whereas cycle benchmarking measures error rates for sets of quantum gates—the quantum-computing version of the logic gates that conventional computers use to perform computations—circuit mirroring measures a quantum computer’s performance on complete computations. As such, he suggests they are fundamentally different tools.

Circuit mirroring can theoretically be combined with many other benchmarks, such as quantum volume, to create scalable versions of these yardsticks, “which we think will be really exciting to the community,” Proctor says. “We certainly haven’t thought of all the interesting things you can do with our technique, and we’re excited to see what the research community comes up with.”

- Google's Quantum Computer Exponentially Suppresses Errors ... ›

- How Much Has Quantum Computing Actually Advanced? - IEEE ... ›

- None ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.