A large universal quantum computer is still an engineering dream, but machines designed to leverage quantum effects to solve specific classes of problems—such as D-Wave Systems’ computers—are alive and well. But an unlikely rival could challenge these specialized machines: computers built from purposely noisy parts.

This week at the IEEE International Electron Device Meeting (IEDM 2022), engineers unveiled several advances that bring a large-scale probabilistic computer closer to reality than ever before.

Quantum computers are unrivaled for any algorithm that relies on quantum’s complex amplitudes. “But for problems where the numbers are positive, sometimes called stochastic problems, probabilistic computing could be quite competitive,” says Supriyo Datta, professor of electrical and computer engineering at Purdue University and one of the pioneers of probabilistic computing.

Stochastic problems include a whole slew of relevant practical challenges, like optimizing the path a taxi should take to pick up several passengers or finding the molecular structure of proteins to aid in drug discovery. What these problems have in common is that they benefit from some amount of noise, allowing the computer to widely explore a complex landscape of possibilities to find a good option.

Everyday computers can solve stochastic problems, but implementing probabilistic noise in software quickly gets very costly. “Transistors are deterministic, very precise objects,” says Kerem Camsari, assistant professor of electrical and computer engineering at the University of California, Santa Barbara and Datta’s collaborator. “In order for them to do probability, you have to use a lot of transistors to simulate indeterminism. But instead you could start from something that’s already very noisy and build probabilistic computers out of it.”

Back in 2017, Datta, Camsari, and colleagues first put forth the idea for hardware devices that embrace the noise. They termed the building blocks of these devices p-bits, the probabilistic analogue of qubits. Instead of encoding 0 or 1 deterministically, each p-bit switches between the two randomly, spending about half its time in each.

“We think there are two points to improve the p-bit computer. The first one is that we need a really good single p-bit. Next, we need a way to build a really large array, and this large array needs to work like a CMOS chip.” —Lang Zeng

A single p-bit is pure noise, not carrying any information. The power of probabilistic computers comes from coupling many p-bits together. Through this coupling, complex correlations in switching between 0s and 1s can build up, allowing the machine as a whole to efficiently solve optimization problems, at least in theory.

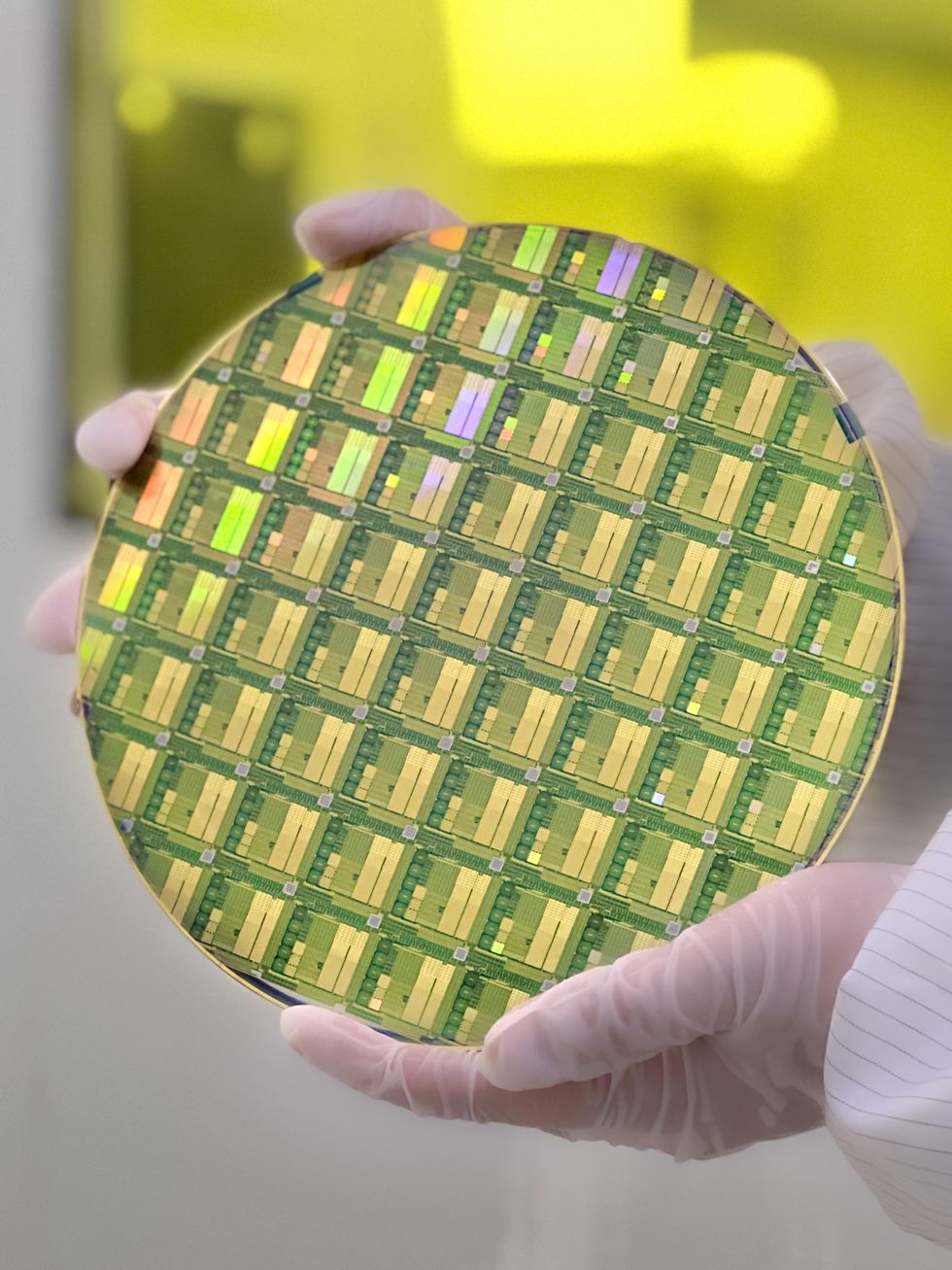

The first and most popular p-bit candidate was a small magnetic tunneling junction (MTJ). These simple memory devices become unstable when they are very small. And instability is exactly what a p-bit needs. “In the beginning, I would often tell my colleagues who are working in magnetic memory, ‘Could you give us your bad, bad devices?’” Datta recalls.

However, building large arrays of coupled p-bits out of MTJs proved challenging.

“We think there are two points to improve the p-bit computer,” says Lang Zeng, a professor in the School of Integrated Circuit Science and Engineering at Beihang University, in China. “The first one is that we need a really good single p-bit. Next, we need a way to build a really large array, and this large array needs to work like a CMOS chip.”

These difficulties are partially addressed by advances reported at this week’s conference.

One p-bit breeds many

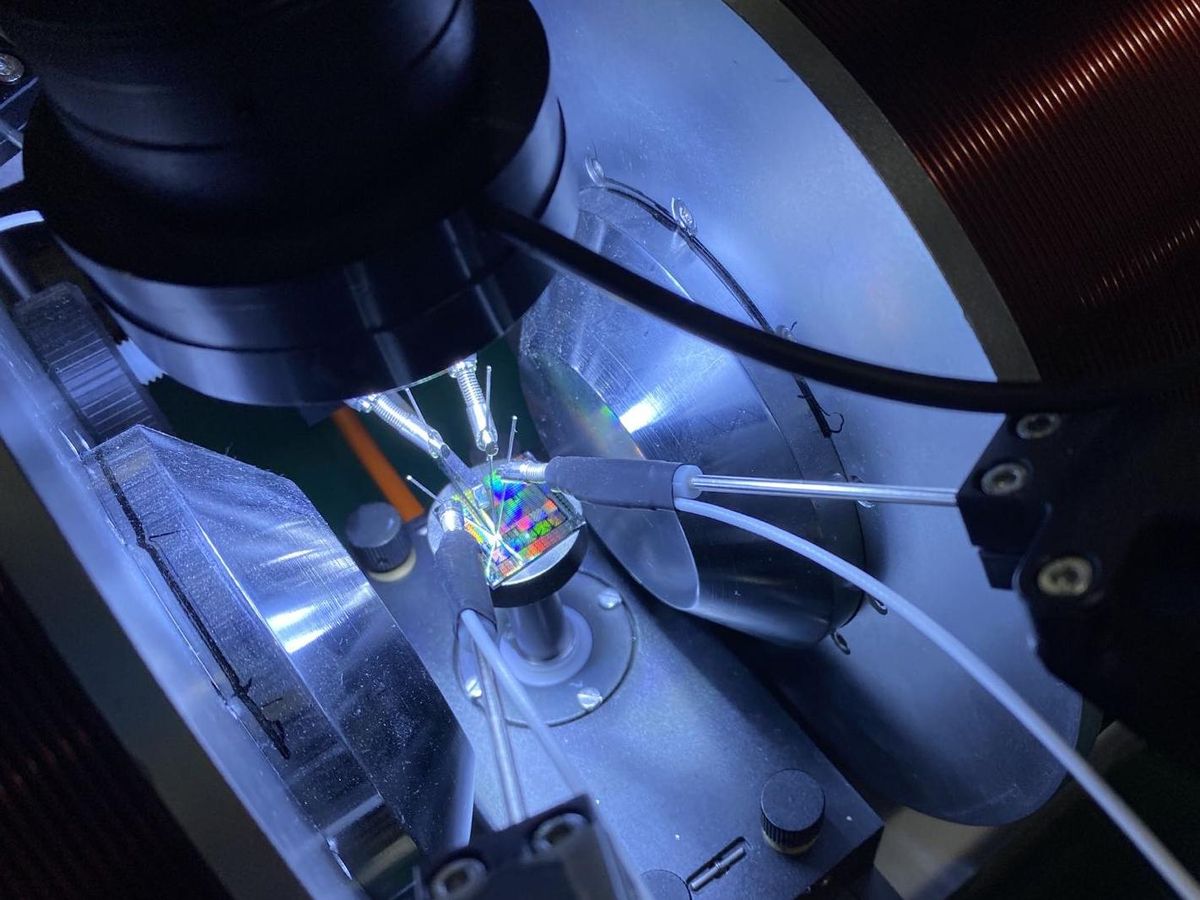

Coupling many p-bits together is tricky. To sidestep this issue, Camsari’s team at UC Santa Barbara, in collaboration with researchers at Tohoku University, in Japan, opted for a hybrid approach. They used a single noisy MTJ as a seed that drives many software p-bits on a field-programmable gate array (FPGA).

“What we thought was, if it is difficult to get randomness with traditional computers, why don’t we get these nanodevices and get the randomness for free from them, and then give it to the classical computer, and endow it with true randomness,” Camsari says.

With their hybrid hardware-software p-bits, the researchers used a quantumlike algorithm to find prime factors of integers up to 26 bits long. This demonstrates that they could solve a class of problems similar to those solved by D-Wave’s computers, potentially at a fraction of the energy cost.

“A detailed cost comparison is very difficult,” Camsari notes. “However, our proof of concept showed that a hybrid probabilistic computer could work at 20 watts with up to millions of p-bits.”

Faster p-bits work together

P-bits need to talk to each other to build up useful computers. The speed with which one MTJ can sync up with another’s flips between 0 and 1 has been a limitation so far, taking several milliseconds. To avoid this issue, Zeng’s group at Beihang University used a novel mechanism that allowed them to shorten this time to several microseconds, with a path toward getting to the nanosecond level.

This technique uses the spin-orbit torque effect, which allows for fast switching of the junction layer in an MTJ. “We proposed this design in 2017,” Camsari recalls, “but we gave up because it was too difficult.” Zeng’s team was able to overcome these difficulties and manufacture spin-torque-compatible p-bits.

However, the device-to-device variation remained large. “We found that each device had its own character, just like human beings,” Zeng says. To quash this individuality, they propose a simplified coupling scheme that they call 1-bit quantization. In this scheme, a p-bit “source” still flips between 0 and 1, spending some fraction of its time in each. A target p-bit will flip to 1 if the source p-bit it is coupled to spends more than half of its time in the 1 state, and to 0 if the source spends more than half its time in 0. In other words, the coupling strength between the target and the source is quantized into 0 and 1.

Using fast coupled p-bits with 1-bit-quantization coupling, the team built a probabilistic computer and used it to factor numbers up to 945. The researchers say building significantly larger p-bit computers capable of solving more complex optimization problems should be straightforward.

Noisy flash memory for the win

Instead of magnetic tunneling junctions, a collaboration between Georgia Tech, Intel, and the Korea Advanced Institute of Science and Technology implemented a probabilistic computer inside flash memory.

This approach used the intrinsic temporal noise of FinFETs in a type of flash memory as an analog source of randomness. “Usually really large noise is a bad thing for memory devices,” says Anni Lu, a graduate researcher at Georgia Tech. “But for us, we want to utilize large noise.”

Lu and her collaborators applied their probabilistic FinFET computer to the traveling salesman problem—a classic optimization problem that tries to find the shortest path for a salesman to visit every city on a given map. They used a similar quantum-inspired optimization protocol to that of Camsari’s team.

To speed up the calculation, they clustered cities into groups and optimized the salesman’s commute between groups, and then within each group independently. With this trick, as well as their novel hardware implementation, they were able to solve the problem for 150 cities using 1/500 as much energy as any previous technique.

Beyond optimization problems, engineers believe probabilistic computers may prove useful for machine learning. Camsari’s team at UC Santa Barbara is also planning to explore deep-learning algorithms with p-bits. Reinforcement learning, for example, requires randomness for the “learner” to explore the environment, and Lu and her collaborators at Georgia Tech are working on implementing a probabilistic solution.

- Intel Starts R&D Effort in Probabilistic Computing for AI - IEEE ... ›

- Waiting for Quantum Computing? Try Probabilistic Computing - IEEE ... ›

Dina Genkina is an associate editor at IEEE Spectrum focused on computing and hardware. She holds a PhD in atomic physics and lives in Brooklyn.