We’ve all seen this moment in the movies—on board, say, a submarine or a spaceship, the chief engineer will suddenly cock their ear to listen to the background hum and say “something’s wrong.” Bosch is hoping to teach a computer how to do that trick in real life, and is going all the way to the International Space Station to test its technology.

Considering the amount of data that’s communicated through non-speech sound, humans do a remarkably poor job of leveraging sound information. We’re very good at reacting to sounds (especially new or loud sounds) over relatively short timescales, but beyond that, our brains are great at just classifying most ongoing sounds as “background” and ignoring them. Computers, which have the patience we generally lack, seem like they’d be much better at this, but the focus of most developers has been on discrete sound events (like smart home devices detecting smoke alarms or breaking glass) rather than longer term sound patterns.

Why should those of us who aren’t movie characters care about how patterns of sound change over time? The simple reason is because our everyday lives are full of machines that both make a lot of noise and tend to break expensively from time to time. Right now, I’m listening to my washing machine, which makes some weird noises. I don’t have a very good idea of whether those weird noises are normal weird noises, and more to the point, I have an even worse idea whether it was making the same weird noises the last time I ran it. Knowing whether a machine is making weirder noises than it used to be, could potentially clue me in to an emerging problem, one that I could solve through cheap preventative maintenance rather than an expensive repair later on.

Bosch, the German company that almost certainly makes a significant percentage of the parts in your car as well as appliances, power tools, industrial systems, and a whole bunch of other stuff, is trying to figure out how they can use deep learning to identify and track the noises that machines make over time. The idea is to be able to identify subtle changes in sound to warn of pending problems before they happen. And one group of people very interesting in getting advanced warning of problems are the astronauts floating around in the orbiting bubble of life that is the ISS.

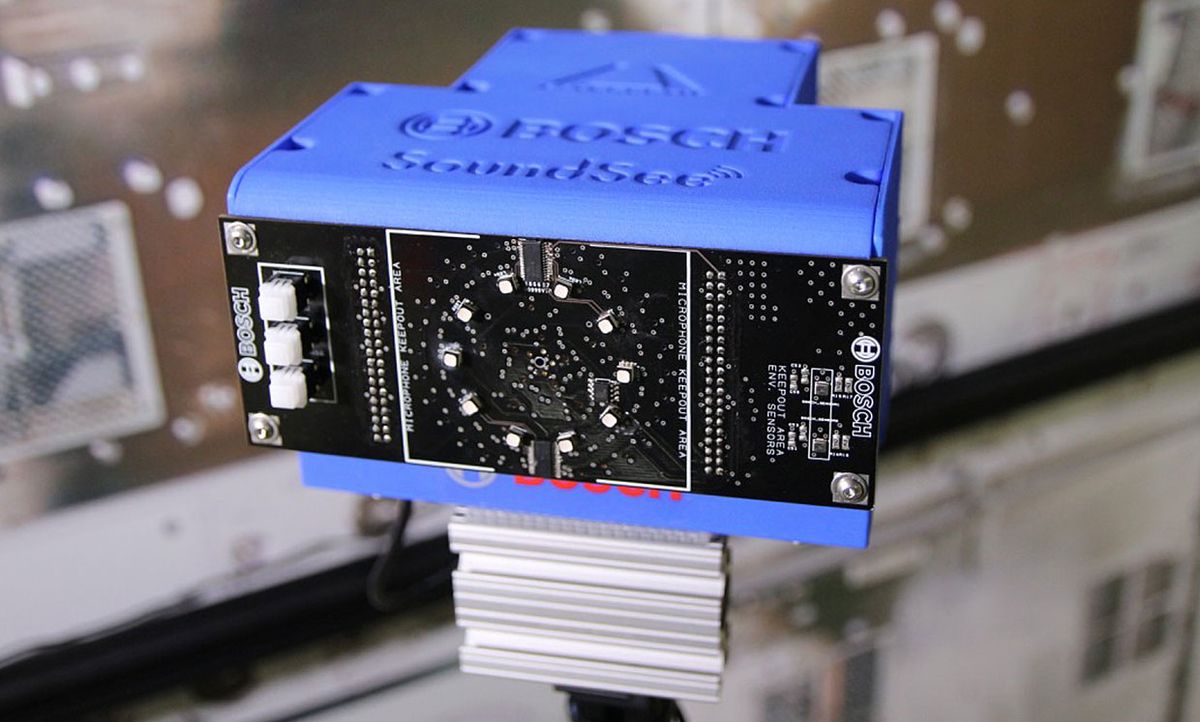

The SoundSee directional microphone array is Bosch’s payload for NASA’s Astrobee robot, which we’ve written about extensively. Astrobee had its first autonomous flight aboard the ISS just last month, and after the robot finishes getting checked out and calibrated, SoundSee will take up residence in one of Astrobee’s modular payload bays. Once installed, it’ll go on a variety of missions, both passively recording audio as Astrobee goes about its business as well as recording targeted audio of specific systems.

One of SoundSee’s first tasks will be to make sound intensity surveys of the ISS, a fairly dull job that astronauts currently spend about two hours doing by hand every few months. Ideally, SoundSee and Astrobee will be able to automate this task. But the more interesting mission (especially for Earth applications) will be the acoustic monitoring of equipment, listening to the noises made by systems like the Environmental Control and Life Support System (ECLSS) and the Treadmill with Vibration Isolation and Stabilization (TVIS).

The audio that SoundSee records with its microphone array will be sent back down to Bosch, where researchers will use deep audio analytics to filter out background noise as well as the noise of the robot itself, with the goal of being able to isolate the sound being made by specific systems. By using deep learning algorithms trained on equivalent systems on Earth, Bosch hopes that SoundSee will be able to provide a sort of “internal snapshot” of how that system is functioning. Or as the case may be, not functioning, in plenty of time for astronauts to make repairs.

“We’re working on unsupervised anomaly detection algorithms,” explains Sam Das, principal researcher and SoundSee project lead at Bosch, “and we have some deep learning-based approaches that could detect a gradual or sudden change of the machine’s operating characteristics.” SoundSee won’t be able to predict everything, he says, but “it will be a line of defense to track slow deviation from normal dynamical models, and tell us, ‘Hey, you should go check this out.’ It may be a false alarm, but our system will be trained to listen for suspicious behavior. These kinds of subtle, long-term patterns and variations could give us surprisingly rich information about system degradation. That’s the ultimate goal, that we’d be able to identify these things way before any other sensing capability.”

Das says that you can think of SoundSee as analogous to training a vision-based system to analyze someone walking. First, you’d train the system on what a normal walking gait looks like. Then, you’d train the system to be able to identify when someone falls. Eventually, the system would be able to identify stumbles, then muscle cramps, and the end goal would be a system that could say, ‘it looks like one of your muscles might be just starting to cramp up, better take it easy!’

The reason to put the SoundSee system on a mobile robot, rather than use a distributed array of stationary microphones, it to be able to combine localization information with the audio data, which Das says provides much more useful data. “A moving platform means that you can localize sources of sound. Now, we can fuse the information from audio we’re getting at different points, aggregate that information along the motion trajectory, and then take that a step further by creating a sound map of the environment.”

This concept extends to operations on Earth as well, and Das sees one of the first potential applications of the SoundSee technology as warehouse environments full of mobile robots. “There are a lot of features of this experiment that could be immediately applied on a manufacturing floor or warehouse where you have ground robots moving around—think of deploying SoundSee for each machine, and you’d have a virtual inspector for physical infrastructure monitoring.”

Longer term, it’s pretty obvious where this kind of technology is destined, especially coming from Bosch, the world’s largest automotive parts supplier. Having a SoundSee-like system in your car already trained on algorithms for what normal operation sounds like would be able to predict maintenance needs and precisely identify emerging mechanical issues, almost certainly before they become audible to you, and very likely way before you’d have any other way of knowing.

“Sound can give you so much more information about the environment,” says Das. “From the HVAC system in your house to the engine in your car, the operating state of machines and their functional health can be revealed by audio patterns.” And all we have to do is listen.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.