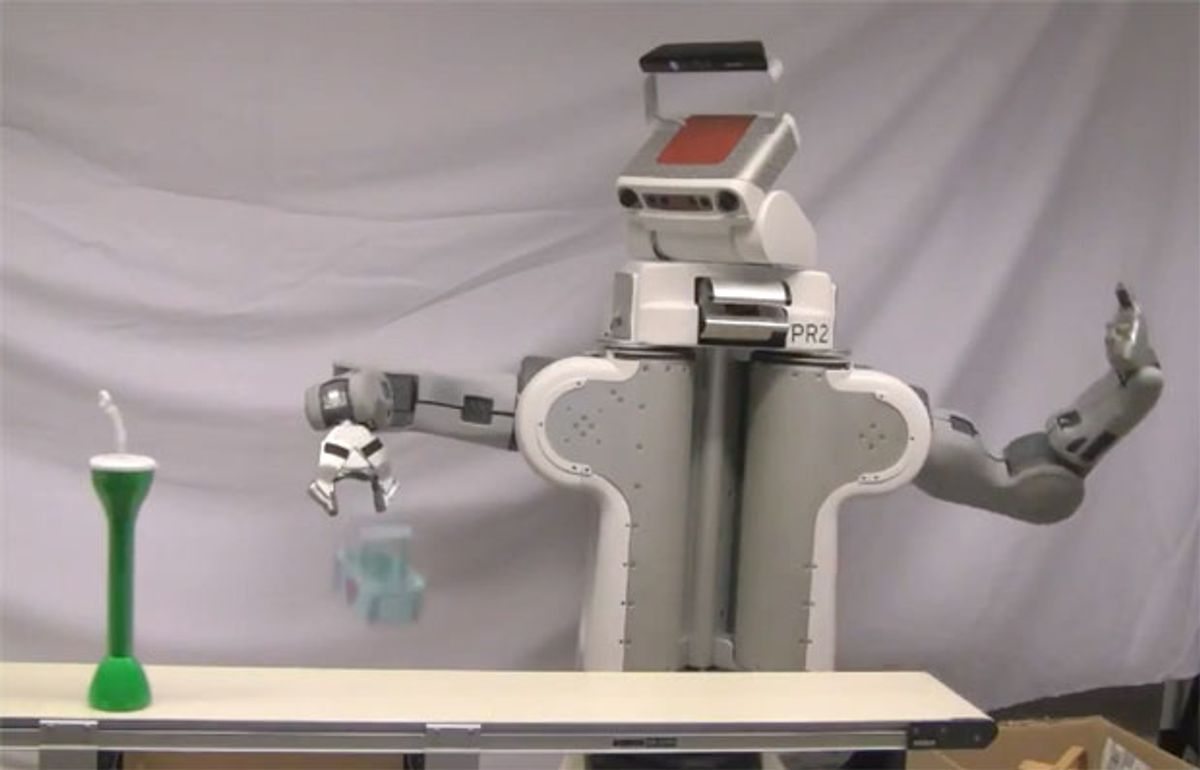

As much as we love the PR2, it's not a robot that anyone would likely describe as "quick." Not that it's trying to be quick or anything, but it does have a tendency towards being absurdly slow, generally because it's doing very complicated things.

However, for a robot like the PR2 to be useful in any sort of versatile industrial setting (which is slowly but surely becoming a huge market for robotics), speed, efficiency, and reliability is very important. Some talented roboticists have been working away at this problem, and they've managed to get a PR2 to pick and place (or at least, pick and drop) objects at a rate of one every seven seconds from a conveyor belt moving at over a foot per second. This is quite possibly the fastest I have ever seen a PR2 move.

For the PR2 to pull this off, it has to combine 3D object recognition, object pose estimation, collision-free arm and gripper trajectory generation, and grasping, which is a lot to do in under seven seconds. It's the grasping bit that's especially tricky, since a delay in the grasping task of just 100 milliseconds means that the object's position will have changed enough to cause the robot to miss. The grasps themselves were learned from humans, with a user teaching the robot a set of several workable ways to pick up each object, while arm movements were planned using the CMU's SBPL (Search Based Planning Library).

Overall, the robot managed a success rate of 87 percent at picking objects off of the conveyor, which is really quite good, considering that (as far as the hardware goes) it's not optimized for this sort of work. Better grippers would have helped, and the primary constraint on the speed of the pick and place was that the PR2's arms just can't move any faster.

Now, if this all reminds you of a certain other two-armed factory robot, well, yeah. It's not like PR2 is going to be stealing jobs from Baxter anytime soon, but teaching mobile manipulation platforms to robustly perform tasks like this may eventually lead to more robots like Baxter doing all kinds of work all over the place. Plus, both robots rely on ROS, so maybe they can even learn something from each other.

"Perception and Motion Planning for Pick-and-Place of Dynamic Objects," by Anthony Cowley, Benjamin Cohen, William Marshall, Camillo J. Taylor, and Maxim Likhachev from UPenn's GRASP Lab, Lehigh University, and CMU, has been submitted to IROS 2013. For the record, Anthony Cowley and Benjamin Cohen are two of the guys behind the (now legendary) POOP SCOOP demo. No word on how much overlap there is between the two, but we have to assume that they've at least cleaned PR2's grippers since then.

[ GRASP Lab ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.