An already-beleaguered Intel is now facing more fire, thanks to Nvidia announcing last Monday that it will start making CPUs for facilities like data centers and supercomputers.

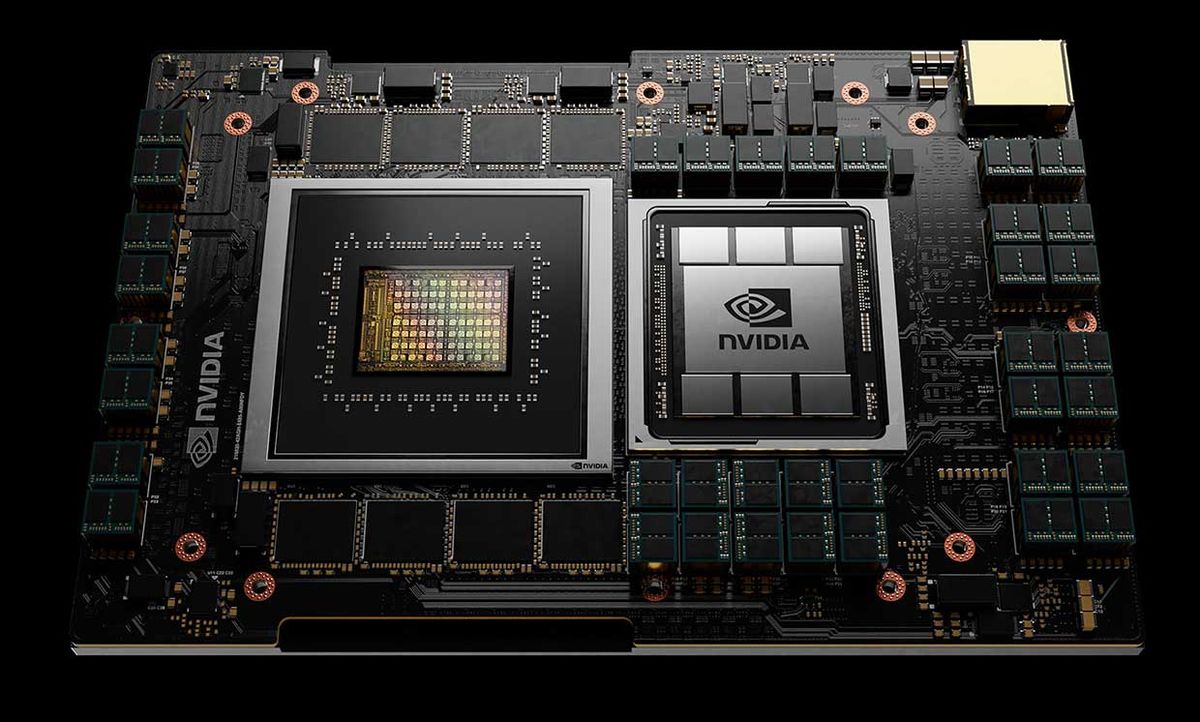

Named Grace (after computer science pioneer Grace Hopper), Nvidia’s CPU hopes to make a splash in the high-performance computing space. Nvidia says Grace is set to launch in 2023, but Los Alamos National Laboratory in the US and the Swiss National Computing Centre have already announced plans for Grace-based supercomputers.

Nvidia is famed as a maker of graphics cards; certainly, PC gaming and cryptocurrency mining are the company’s bread-and-butter. But Nvidia technology already powers a whole range of high-performance, GPU-intensive applications—including AI, finance, imaging, genome sequencing, signal processing, and computational fluid dynamics.

“It’s specialty applications, really,” says Douglas Lyon, a professor of computer engineering at Fairfield University in Connecticut. “They’re killer apps, no doubt. They’re important apps, yes indeed. But they’re not Microsoft Word.”

There’s good reason Nvidia may want to delve deeper into a space they already have experience with. Many of those applications inhabit a lucrative world. “It’s attracting a lot of research, a lot of investment dollars, a lot of intellectual capital, and it will continue to do so,” says Lyon.

Grace will use architecture by ARM, the British firm that licenses its designs to other manufacturers. An Nvidia-ARM system aimed at the AI and HPC market could represent a potent coupling, too. Because while Intel continues to dominate the Top500 list of the world’s fastest HPC systems, last year Japan’s ARM-based Fugaku supercomputer (with more than seven million cores, running at 442 petaflops) secured the number one spot on the list, pointing down a higher-efficiency path toward exascale that the famously trim ARM architecture may yet enable.

Nvidia is in the process of attempting to acquire ARM from its current owner SoftBank, but the deal has run into regulatory headwinds and many—including Lyon—feel that it deserves antitrust scrutiny.

It’s little help for Intel, which might feel that Nvidia’s announcement is but the latest in a series of blows.

Intel has already faced setbacks in home computers, long one of its wheelhouses. Since AMD launched its Ryzen processors in 2017, they have continually gained ground at Intel’s expense. Earlier this year, AMD took the lead in desktop processors for the first time since 2006.

And Apple—which already made its own chips for mobile phones—broke with the company in a high-profile split last year, announcing that they would start making their own processors for their laptops and desktops.

Not long afterwards, Intel announced it was joining forces with IBM to boost semiconductor manufacturing, but it’s not clear what benefit that partnership will bring.

Intel utterly dominates the processor market in data centers, but even there Intel is being challenged by AMD. It helps that TSMC, which supplies AMD’s chips, has leapt ahead of Intel in fabrication technology. Many analysts do not doubt that Nvidia’s move will add to the pressure Intel is facing.

That said, Intel still has some notable advantages. If Nvidia’s ARM acquisition does go through, Lyon thinks, it may punch a hole in the market of firms that licence out their designs. That may leave a space for the likes of Intel, which has always designed and manufactured its own chips.

And Intel might perform better at certain tasks that are better suited to its hardware. Today, Nvidia’s technology might have an advantage in certain tasks like pattern recognition and genomics, according to Lyon, but in others, such as playing board games or creating expert systems, it’s not quite as good.

“Anybody can put a whole pile of CPUs in a box,” says Lyon. “The critical problem has always been to program them.”