Engineers at North Carolina State University and at Intel have come up with a solution to one of the modern microprocessor’s most persistent problems: communication between the processor’s many cores. Their answer is a dedicated set of logic circuits they call the Queue Management Device, or QMD. In simulations, integrating the QMD with the processor’s on-chip network, at a minimum, doubled core-to-core communication speed, and in some cases, boosted it much farther. Even better, as the number of cores was increased, the speed-up became more pronounced.

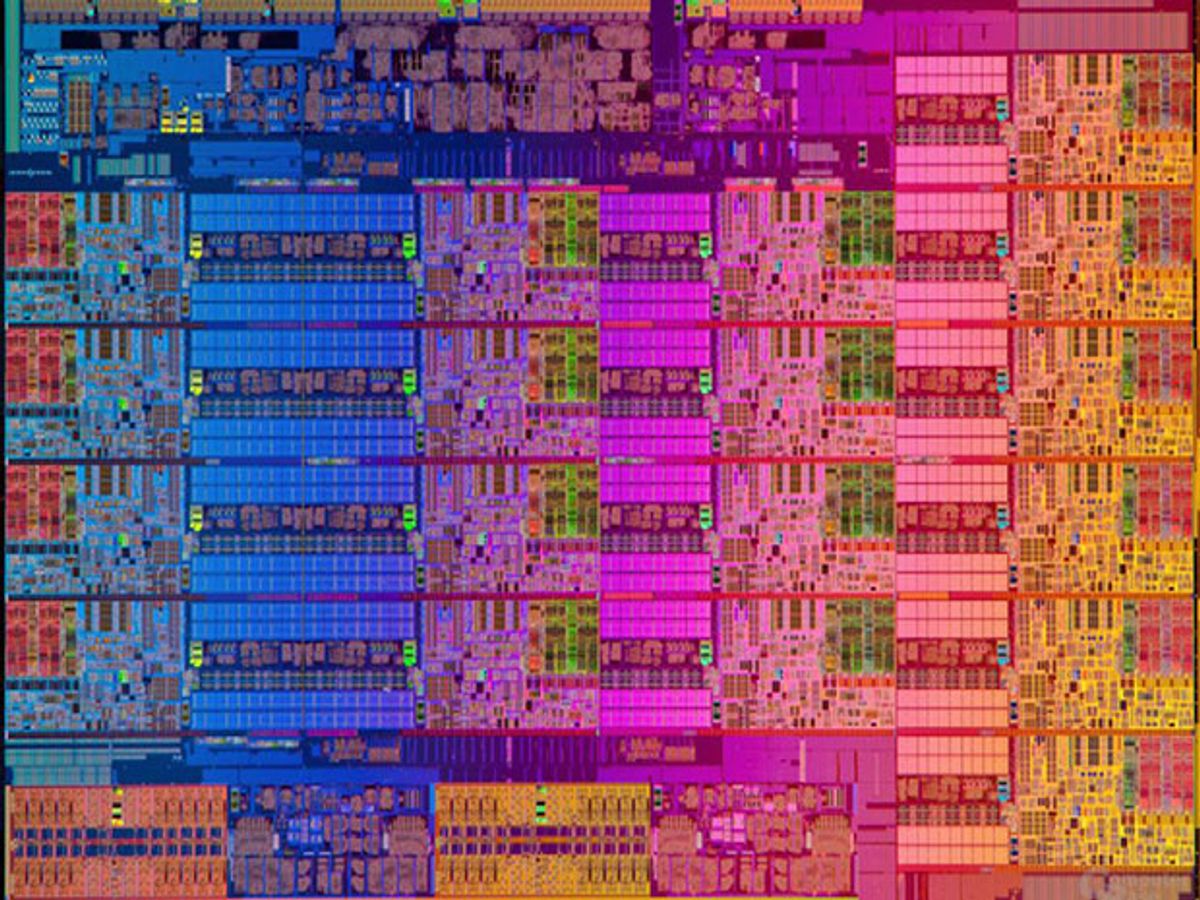

In the last decade, microprocessor designers started putting multiple copies of processor cores on a single die as a way to continue the rate of performance improvement computer makers had enjoyed without chip-killing hot spots forming on the CPU. But that solution comes with complications. For one, it meant that software programs had to be written so that work was divided among processor cores. The result: Sometimes different cores would need to work on the same data or have to coordinate the passing of data from one core to another.

To prevent the cores from wantonly overwriting each other’s information, processing data out of order, or committing other errors, multicore processors use lock-protected software queues. These are data structures that coordinate the movement of and access to information according to software-defined rules. But all that extra software comes with significant overhead, which only gets worse as the number of cores increases. “Communications between cores is becoming a bottleneck,” says Yan Solihin, a professor of electrical and computer engineering who led the work at NC State.

The solution—born of a discussion with Intel engineers and executed by Solihin's student, Yipeng Wang, at NC State and at Intel—was to turn the software queue into hardware. This effectively turned three multistep software queue operations into three simple instructions—add data to the queue, take data from the queue, and put data near where it’s going to be needed next. Compared with just using the software solution, the QMD sped up a sample task such as packet processing—like network nodes do on the Internet—by a greater and greater amount the more cores were involved. For 16 cores, QMD worked 20 times as fast as the software could.

Once they realized this result, the engineers reasoned that the QMD might be able to do a few other tricks—turning more software into hardware. They added more logic to the QMD and found that it could speed up several other core communications-dependent functions, including MapReduce, a technology Google pioneered for distributing work to different cores and collecting the results.

They aren’t done yet. “The next step is to figure out other types of hardware accelerators that would be useful,” says Solihin. “We have to improve performance by improving energy efficiency. The only way to do that is to move some software to hardware. The challenge is to figure out which software is used frequently enough that we could justify implementing it in hardware. There is a sweet spot,” he says.

Intel engineer Ren Wang is presenting the QMD speed-up results at the 25th Annual Conference on Parallel Architectures and Compilation Techniques, in Haifa, Israel this week.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.