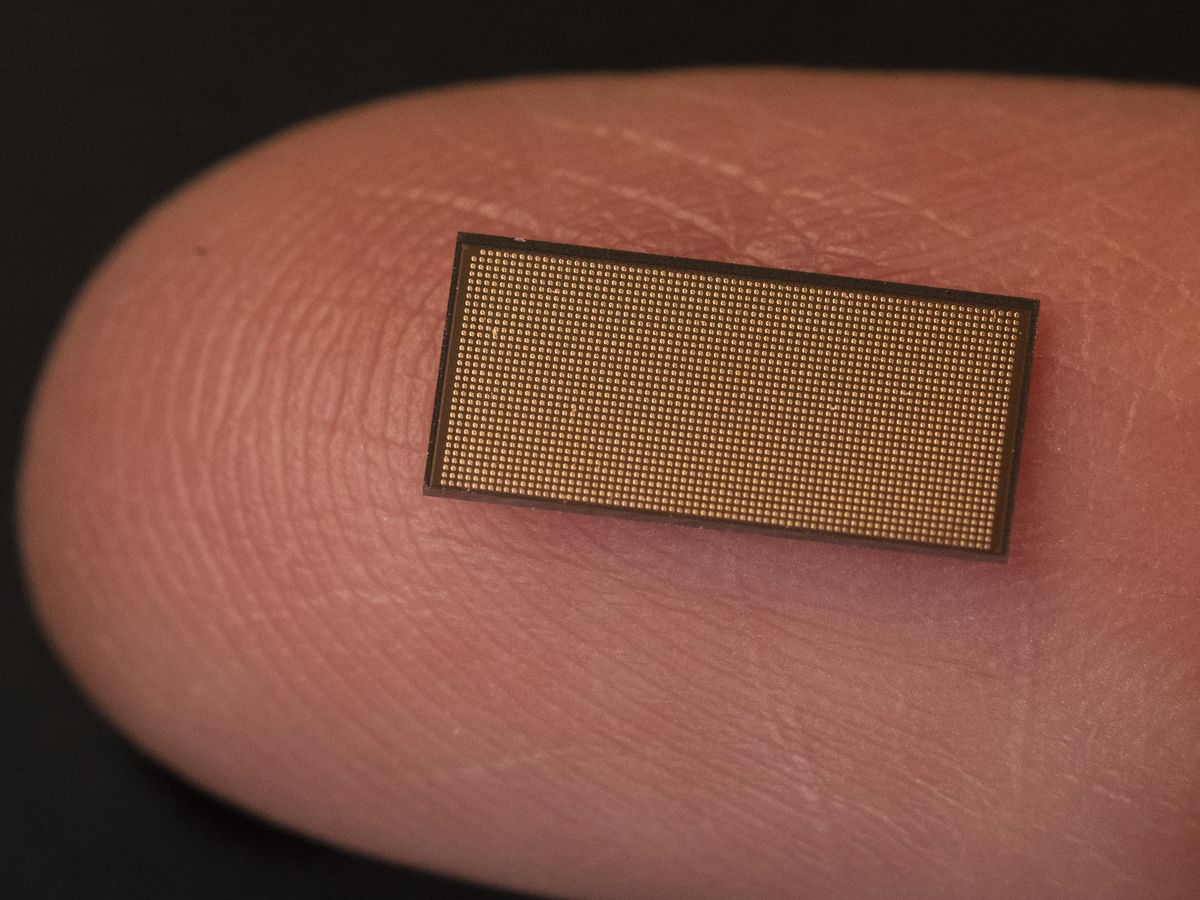

Many AIs may depend on things called neural networks, but there's very little about them that works in the way human and animal brains do. Intel has been experimenting with computers that think more like a brain does for several years now, racking up some impressive if quirky results with their Loihi neuromorphic chip. Now Loihi is getting its first upgrade, and it's a pretty big one. Using a manufacturing process called Intel 4 that's not yet available for commercial chips, the company packed in up to eight-times as many artificial neurons into a chip that's half the area of Loihi. That, and a host of changes motivated by the past few years of experiments, make the Loihi 2 faster and more flexible, says Mike Davies director of Intel's neuromorphic computing lab.

Unlike the artificial neurons in conventional AI, which store information as weights that measure the strength of connection between neurons, Loihi's neurons carry information in the timing of digitally-represented spikes, which is more analogous to what goes on in your brain. Neural computation is triggered by these spikes, so there's no need for a central clock to keep things synchronous. And much of the chip will be idle when there is no event to observe, saving power.

Some 250 research partners have been using Loihi systems for things like controlling drones or robot arms, optimizing train schedules, searching databases, and learning to identify different odors. "The results have been quite encouraging," says Davies. Some energy efficiency gains were "orders of magnitude" and there were also gains in energy efficiency and the amount of data needed for the system to learn. (The results are tabulated in the May 2021 issue of Proceedings of the IEEE.)

But these experiments also pointed to a set of limitations that Davies' team wanted to address in the next generation. For one, Davies says, the neural network model Loihi used wasn't flexible enough to do all the things Intel and its partners wanted. They also found that the model of neural activity as a binary spike where only the timing information was conveyed from one neuron to another limited the precision of Loihi's calculations. Other hinderances included congestion between multiple Loihi chips and challenges to integrating the system with conventional computers.

Loihi 2 tackles these issues using the same basic architecture, but with a set of circuits that were "redesigned from the ground up," according to Davies. Here's a summary of what's new:

New spike messages to improve precision: The spike signals in original-flavor Loihi contained only timing information. This there-or-not information, is called a binary-value spike message. Loihi 2 allows spikes that have both timing and magnitude parameters, without much of an energy or performance penalty. "We can solve the same problems with fewer resources if we have this magnitude that can be sent alongside" the timing information, says Davies.

Enhanced programmability: The previous Loihi was designed for a specific spiking-neural-network (SNN) model. Loihi 2 neuromorphic cores can now also do arithmetic, comparison, program control flow and other operations that allow the chip to perform and expanded set of SNN models.

Faster learning: Loihi basically supported one set of brain-inspired learning rules. Loihi 2 changes this in a way that lets it perform some of the latest learning algorithms, as well as an approximation of the backpropagation algorithm used in deep learning. The change means algorithms that could only be done as proof-of-concept on Loihi can be scaled up on Loihi 2 allowing it to learn more quickly.

More of everything: As mentioned above, Loihi 2 is built using a manufacturing process so advanced the company doesn't yet even use its own commercial chips. That means a million neurons per chip versus Loihi's 125,000. What's more, the circuits and memory that implement those neurons and their memory were optimized leading to between 2x and 160x more resources for neuromorphic computing, depending on what kind of network is running.

Speedier circuits: Loihi 2's redesigned circuits mean a doubling of processing speeds when updating the state of neurons, five-fold faster synaptic operations, and as much as a 10-fold bump in the speed of spike generation. All told, the chip can now process neuromorphic networks up to 5000-times faster than biological neurons

New chip interfaces: Loihi 2 chips support 4x faster asynchronous chip-to-chip signaling, as well as a feature that reduces interchip bandwidth needs 10-fold. It's also all set to do communications in a 3D chip-stacking arrangement and has an Ethernet interface and one for emerging event-based sensors, such as Prophesee's camera chips.

As is a common theme with chips having new architectures, software is the key to getting any use out of it. "Software continues to hold back the field," says Davies. "There hasn't been the emergence of a single software framework as you see in the deep learning world."

"Because the emergence of a single framework hasn't happened, we're now offering something ourselves," he says. The new software framework, Lava, takes the lessons of the previous three-and-a-half years of research projects and attempts to offer a common platform that support them all. Lava is an open-source framework that supports systems that do event-based, asynchronous message passing, not just Loihi or Loihi 2.

There are no specific plans to commercialize Loihi 2, says Davies. "This is still a research chip that we are going to be offering to research partners." Davies says that the technology behind Loihi is likely to first appear as an acceleration core on a system-on-chip that is performing a specific algorithm rather than as a general purpose chip.

Intel may not be ready to make a business out of neuromorphic chips, but that doesn't mean others aren't. Sydney-based Brainchip received its first shipment of finished event-based neural processor chips in August and is hoping to help customers develop low-power systems where things like incremental and one-shot learning would be helpful.

- A Neuromorphic Chip That Makes Music - IEEE Spectrum ›

- How Analog and Neuromorphic Chips Will Rule the Robotic Age ... ›

- Neuromorphic Chips Are Destined for Deep Learning—or Obscurity ... ›

- Event-Based Camera Chips Are Here, What’s Next? ›

- From Intel Intern to Inventor of the Year ›

- Reconfigurable AI Device Shows Brain-Like Promise - IEEE Spectrum ›

- Brain-Inspired Chips Good for More than AI, Study Says ›

- Brain Connection Maps Help Neuromorphic Chips - IEEE Spectrum ›

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.