Three of the biggest companies making quantum computers today—Google, Intel, and Microsoft—are betting on supercooled devices operating at close to absolute zero. But there’s a problem: These cold cathedrals of quantum computing cannot tolerate the extra heat given off by the conventional computing chips that control them.

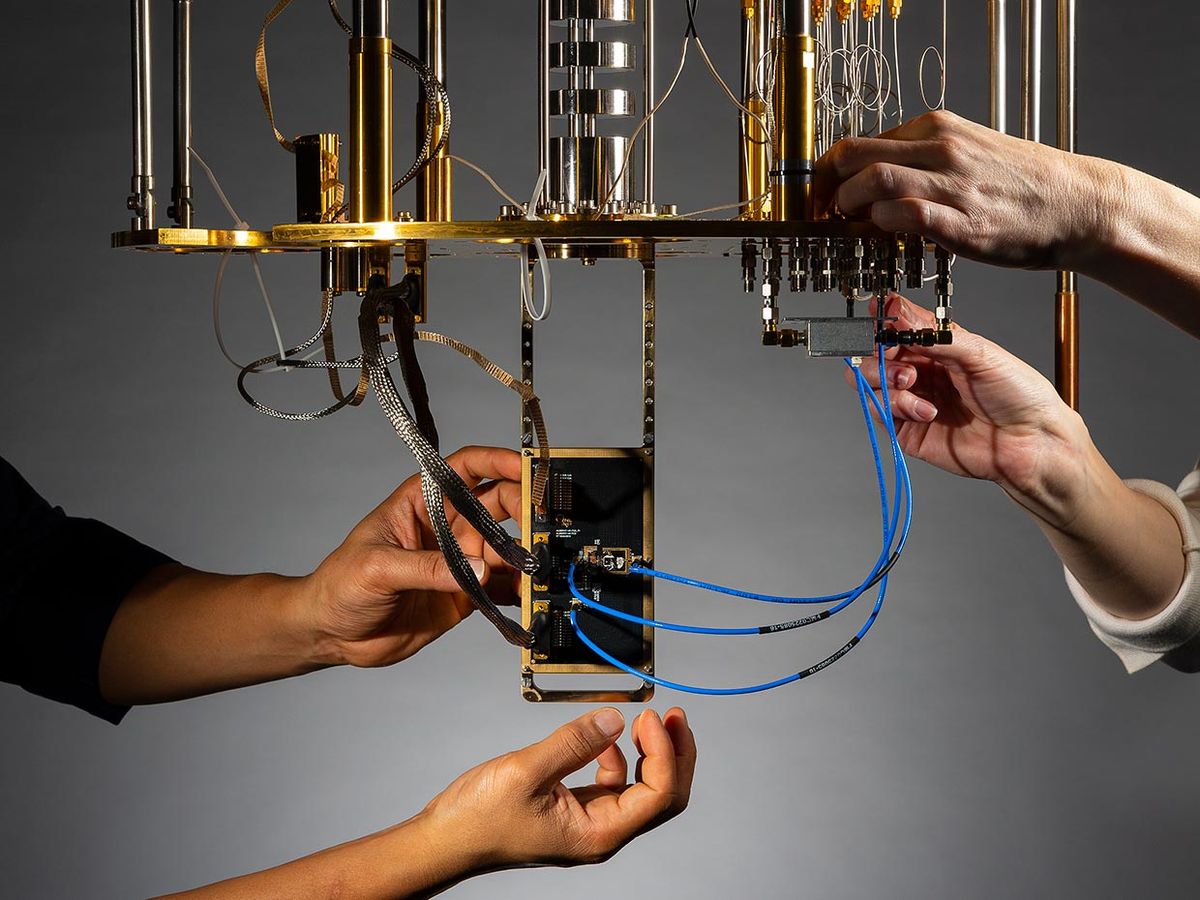

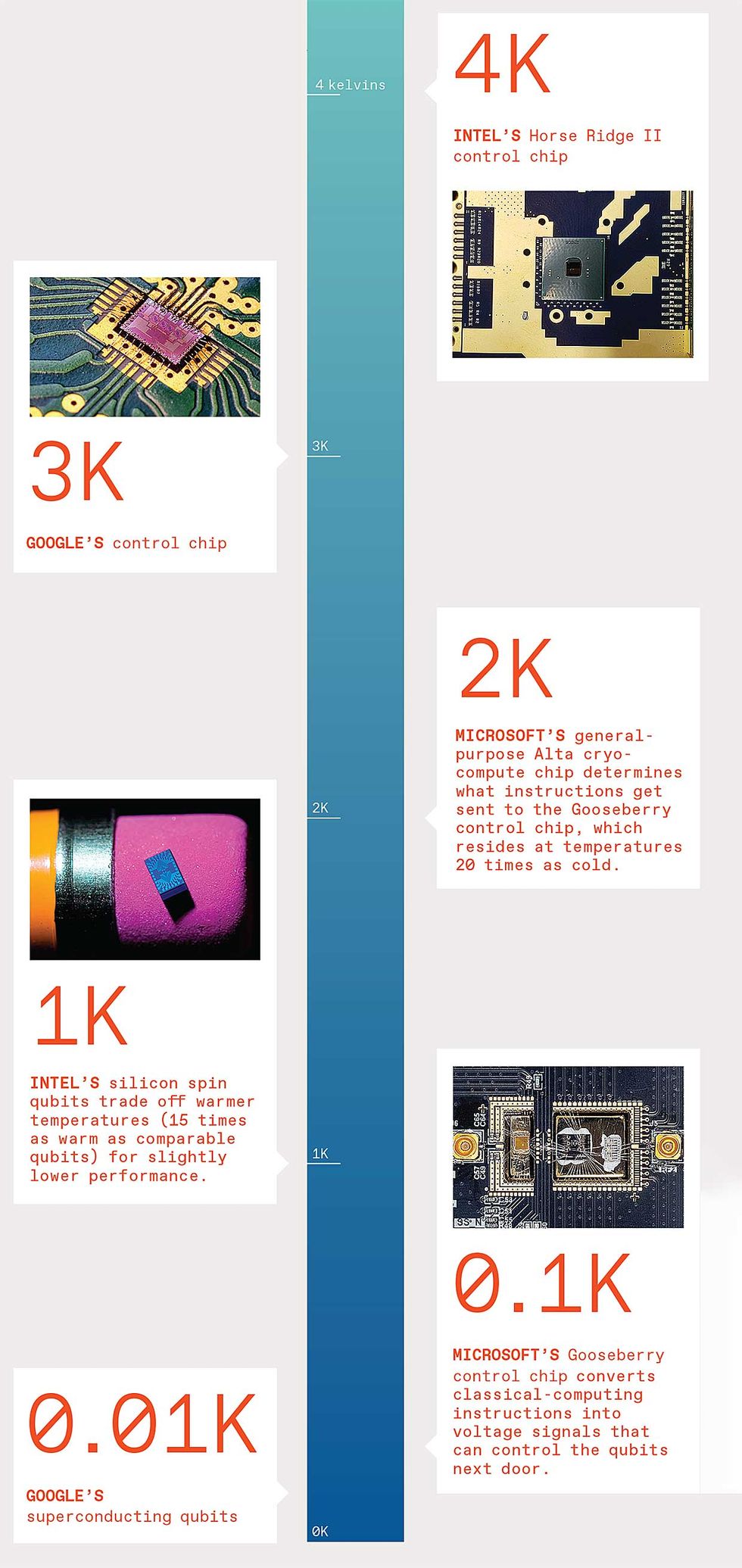

This means the classical and quantum-computing components must be separated, despite their marriage by design. The control chips usually reside at room temperature on top of the quantum-computing stack, while the quantum bits (qubits) remain in the coldest depths of dilution refrigerators. The dilution fridges involve helium-3 and helium-4 isotopes to supercool the environment, lowering temperatures from a baseline of 4 kelvins (–269.15 ºC) at the top to about 10 millikelvins at the bottom.

Cables running up and down the hardware stack connect each qubit with its control chip and other conventional computing components higher up. Such unwieldy setups with just dozens of qubits would become an “engineering nightmare” if scaled up to the number of qubits necessary for practical quantum computing, says Fabio Sebastiano, research lead for the quantum-computing division at QuTech, in Delft, Netherlands. He compared the approach to trying to connect each of the 10 million pixels in a smartphone camera to their readout electronics using 1-meter cables.

That is why these three tech giants have been developing either qubits that operate at warmer temperatures or control chips that operate at colder temperatures—while minimizing heat from power dissipation. The companies hope to shrink the operating temperature difference and possibly unite classical and quantum-computing components in the same integrated chips or packages.

This article appears in the May 2021 print issue as “Fast, Cold, and Under Control.”

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.