Moore’s Law Might Be Slowing Down, But Not Energy Efficiency

Miniaturization may be tough, but there's still room to drive down power consumption in modern computers

No one can say exactly when the era of Moore’s Law will come to a close. Nevertheless, semiconductor experts like us can’t resist speculating about that day because it will mark the end of an extraordinary period of history, with uncertain implications for one of the world’s most important industries.

Here’s what we do know. The last 15 years have seen a big falloff in how much performance improves with each new generation of cutting-edge chips. So is the end nigh? Not exactly, because even though the fundamental physics is working against us, it appears we’ll have a reprieve when it comes to energy efficiency.

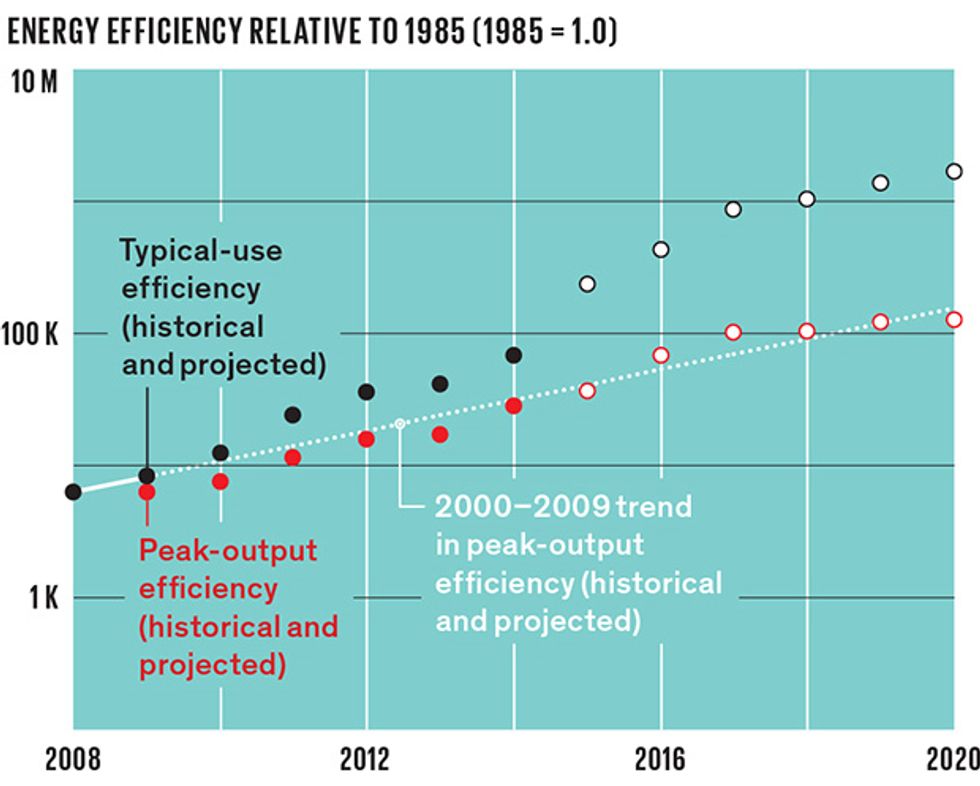

There are many ways to gauge a computer’s efficiency, but one of the most easily calculated metrics is peak-output efficiency, which measures the efficiency of a processor when it’s running at its fastest.

Peak-output efficiency is typically quoted as the number of computations that can be performed per kilowatt-hour of electricity consumed. And according to a peer-reviewed paper published in 2011 in the IEEE Annals of the History of Computing, it doubled like clockwork every year and a half or so for more than five decades.

This trend started well before the first microprocessor, way back in the mid-1940s. But it began to come to an end around 2000. Growth in both peak-output efficiency and performance started to slow, weighed down by the physical limitations of shrinking transistors. Chipmakers turned to architectural changes—such as putting multiple computing cores in a single microprocessor—but they weren’t able to maintain historical growth rates.

These days, we’ve found, it takes about 2.7 years for peak-output efficiency to double. That’s a substantial slowdown. Historically, a decade of doubling boosted efficiency by a factor of a hundred; at current rates, it would take 18 years to see a hundredfold gain.

Fortunately, the news isn’t all bad. Our computing needs have changed. For years after Moore’s landmark 1965 paper, computers were expensive, relatively rare, and regularly pushed to their computing peak. Now that they’re ubiquitous and cheap, the emphasis in chip design has shifted from fast CPUs in stationary machines to ultralow-power processing in mobile appliances, such as laptops, cellphones, and tablets.

Today, most computers run at peak output only a small fraction of the time (a couple of exceptions being high-performance supercomputers and Bitcoin miners). Mobile devices such as smartphones and notebook computers generally operate at their computational peak less than 1 percent of the time based on common industry measurements. Enterprise data servers spend less than 10 percent of the year operating at their peak. Even computers used to provide cloud-based Internet services operate at full blast less than half the time.

In this new regime, a good power-management design is one that minimizes how much energy a device consumes when it’s idle or off. And the better indicator of energy efficiency is how much electricity a computer consumes on average—not when it’s operating at full blast.

We’ve recently defined a measure of efficiency that’s more in sync with how chips are used nowadays, which we call “typical-use efficiency.” Like peak-output efficiency, it’s measured in computations per kilowatt-hour. This time, however, it’s calculated by dividing the number of computations performed over the course of a year by the total electricity consumed—a weighted sum of the energy a processor and its supporting circuitry use in different modes over that same period. For example, a laptop might operate at peak power when its user is playing a game, but this only happens a tiny fraction of the time. Other common activities, such as word processing or video playback, might consume a tenth as much electricity, since only a fraction of the chip is needed for these functions, and smart power management can actively shut off circuitry between keystrokes and video frames.

Encouragingly, typical-use efficiency seems to be going strong, based on tests performed since 2008 on Advanced Micro Devices’ chip line. Through 2020, by our calculations for an AMD initiative, typical-use efficiency will double every 1.5 years or so, putting it back to the same rate seen during the heyday of Moore’s Law.

These gains come from aggressive improvements to circuit design, component integration, and software, as well as power-management schemes that put unused circuits into low-power states whenever possible. The integration of specialized accelerators, such as graphics processing units and signal processors that can perform certain computations more efficiently, has also helped keep average power consumption down.

Of course, as with any exponential trend, this one will eventually end, and circuit designers will have become victims of their own success. As idle power approaches zero, it will constitute a smaller and smaller fraction of the energy consumed by a computer. In a decade or so, energy use will once again be dominated by the power consumed when a computer is active. And that active power will still be hostage to the physics behind the slowdown in Moore’s Law.

Over the next few decades, we’ll have to rethink the fundamental design of computers if we want to keep computing moving forward at historical rates. In the meantime, steady improvements in everyday energy efficiency will give us a bit more time to find our way.

This article originally appeared in print as “Efficiency’s Brief Reprieve.”

About the Authors

Jonathan Koomey is a research fellow at the Steyer-Taylor Center for Energy Policy and Finance at Stanford University. IEEE Fellow Samuel Naffziger is an Advanced Micro Devices corporate fellow. They began collaborating on computing efficiency in 2014, as part of 25x20, an AMD energy-efficiency initiative that is targeting a 25X improvement in PC efficiency by 2020.