Scientists have demonstrated that monkeys using a brain-machine interface can not only control a computer with their minds but also "feel" the texture of virtual objects in a computer.

This is the first-ever demonstration of bidirectional interaction between a primate brain and a virtual object.

In the experiment, described in paper published today in the journal Nature, Duke University scientists equipped monkeys with brain implants that allowed the animals to control a virtual arm, shown on a computer screen, using only their thoughts. This part of the experiment was not a new result -- scientists, including the Duke team, have previously demonstrated that the brain can control advanced robotic devices and even learn to operate them effortlessly.

What's new is that, this time, the scientists are using the brain-machine interface not only to extract brain signals but also to send signals to the brain. The device is actually a brain-machine-brain interface. The monkeys were able to interpret the signals fed to their brains as a kind of artificial tactile sensation that allowed them to identify the "texture" of virtual objects.

"Someday in the near future, quadriplegic patients will take advantage of this technology not only to move their arms and hands and to walk again, but also to sense the texture of objects placed in their hands, or experience the nuances of the terrain on which they stroll with the help of a wearable robotic exoskeleton," study leader Miguel Nicolelis, a professor of neurobiology at Duke, in Durham, N.C., said in a statement.

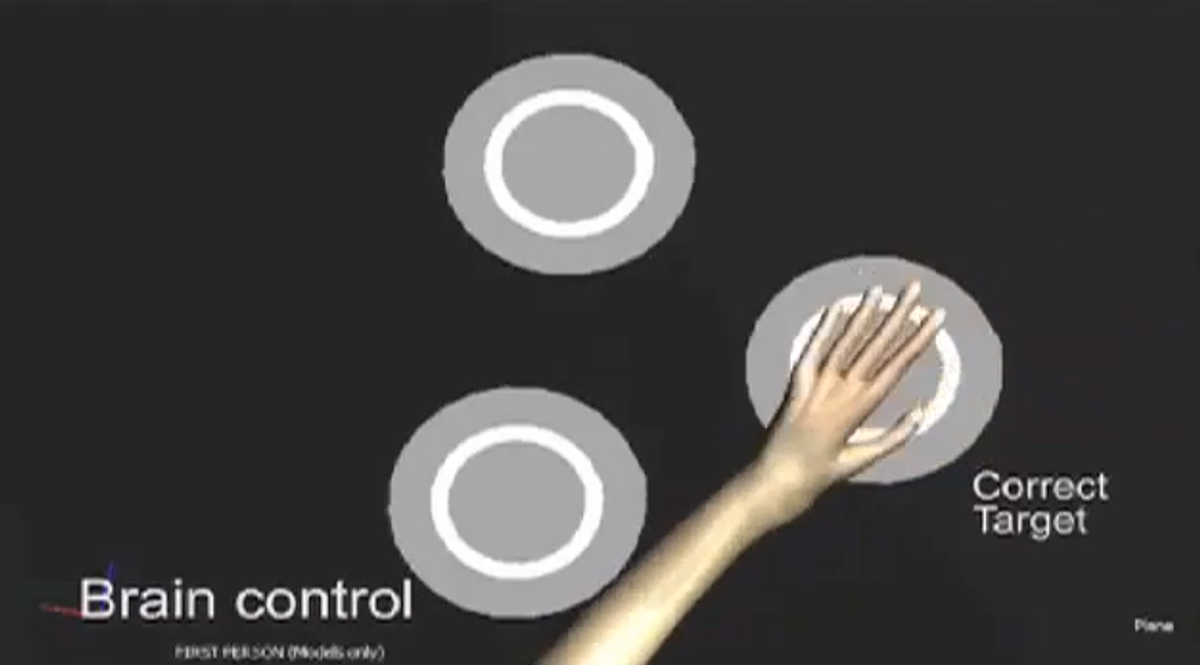

Initially, the monkeys used their real hands to operate a controller and move their virtual limbs on the screen. During this part of the experiment, the researchers recorded brain signals to learn how to correlate the brain activity to the movement of the virtual arm [see illustration above]. Next, the researchers switched from hand control to brain control, using the brain signals to directly control the virtual arm; after a while, the animals stopped moving their limbs altogether, using only their brains to move the virtual hand on the screen.

The monkeys used their virtual hand to explore three objects that appear visually identical but have different "textures" -- each texture corresponding to different electrical signals sent to the brain of the animals. The researchers selected one of the objects as the "target," and whenever the monkeys were able to locate it they would receive a sip of juice as a reward. After a small number of trials, the monkeys learned to quickly explore the virtual environment, feeling the textures of the objects to find the target.

Watch:

One of the monkeys used got the tasks right more than 85 percent of the time; another monkey got the tasks right about 60 percent of the time.

To allow the monkeys to control the virtual arm, the scientists implanted electrodes to record electrical activity of populations of 50 to 200 neurons in the motor cortex. At the same time, another set of electrodes provided continuous electrical feedback to thousands of neurons in the primary tactile cortex, allowing the monkeys to discriminate between objects based on their texture alone.

"It's almost like creating a new sensory channel through which the brain can resume processing information that cannot reach it anymore through the real body and peripheral nerves," Nicolelis said.

A major challenge was to "keep the sensory input and the motor output from interfering with each other, because the recording and stimulating electrodes were placed in connected brain tissue," according to a news report in Nature:

"The researchers solved the problem by alternating between a situation in which the brain-machine-brain interface was stimulating the brain and one in which motor cortex activity was recorded; half of every 100 milliseconds was devoted to each process."

The Duke researcher is leading an international consortium called the Walk Again Project, whose goal is to restore full body mobility to quadriplegic patients using brain-machine interfaces and robotic exoskeletons.

An avid fan of soccer, Nicolelis hopes to have a demonstration ready for 2014, with a quadriplegic child performing the kickoff for the FIFA World Cup in Brazil, his home country.

Images and video: Duke University

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.