Imagine commanding a robotic arm to perform a task while your two human hands stay busy with a completely different job. Now imagine that you give that command just by thinking it.

Researchers today announced they have successfully built such a device. The user can be thinking about two tasks at once, and the robot can decipher which of those thoughts is directed at itself, then perform that task. And what’s special about it (as if a mind-controlled, multitasking, supernumerary limb isn’t cool enough by itself) is that using it might actually improve the user’s own multitasking skills.

“Multitasking tends to reflect a general ability for switching attention. If we can make people do that using brain-machine interface, we might be able to enhance human capability,” says Shuichi Nishio, a principal researcher at Advanced Telecommunications Research Institute International in Kyoto, Japan, who codeveloped the technology with his colleague Christian Penaloza, a research scientist at the same institute.

Nichio and Penaloza achieved the feat by developing algorithms to read the electrical activity of the brain associated with different actions. When a person thinks about performing some kind of physical task—say picking up a glass of water—neurons in particular regions of the brain fire, generating a pattern of electrical activity that is unique to that type of task. Thinking about a different type of task, like balancing a tray of precarious dishes, generates a different pattern of electrical activity.

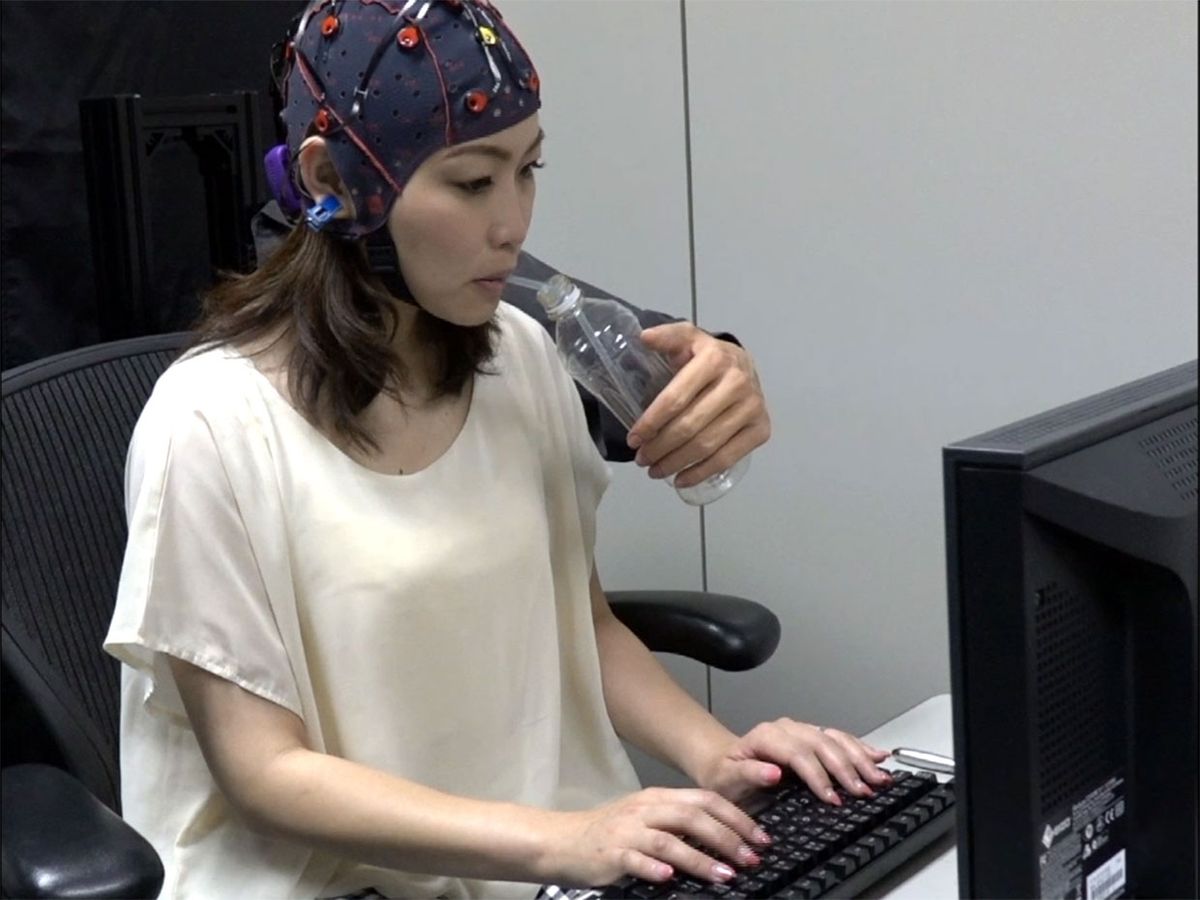

The brain activity associated with those tasks can be recorded using electrodes noninvasively placed on the scalp. A trained algorithm then interprets the electrical recordings, distinguishing brain activity patterns linked to one task versus another. It then informs the robotic arm to move based on the user’s thoughts. Such systems are generally known as brain-machine interfaces (BMI).

To test the system, Nishio and Penaloza recruited 15 healthy volunteers to have their minds read while they multitasked. Wearing an electrode cap, each participant sat in a chair and used his or her two human hands to balance a ball on a board, while the computer recorded their brain’s electrical activity.

Sitting in the same chair, this time with a connected robotic arm turned on, participants visualized the robotic arm grasping a nearby bottle. The computer recorded the neural firing in their brains, sensing the intention to grasp the bottle, and performed the command.

Then the participants were asked to simultaneously perform both tasks: balancing the ball on the board and mind-commanding the robotic arm. With the computer’s help, the participants successfully performed both tasks about three-quarters of the time, according to today’s report.

Some of the participants were much better at the multitasking portion of the experiment than others. “People were clearly separated, and the good performers were able to multitask 85 percent of the time,” and the poor performers could only multitask about 52 percent of the time, says Penaloza. Lower scores didn’t reflect the accuracy of the BMI system, but rather the skill of the performer in switching attention from one task to the another, he says.

It was interesting how quickly the participants learned to simultaneously perform these two tasks, says Nishio. Normally that would take many training sessions. He and Penaloza say they believe that using brain-machine interface systems like this one may provide just the right biofeedback that helps people learn to multitask better. They are continuing to study the phenomenon in the hope that it can be used therapeutically.

We’ve seen supernumerary limbs before, such as mind-controlled hand exoskeletons for quadriplegic individuals, inertia-controlled dual robotic arms, pain-sensing prosthetics, cyborg athletes, and even a music-mediated drumming arm.

Penaloza and Nishio say theirs is the first mind-controlled robot that can read a multitasking mind. “Usually when you’re controlling something with BMI, the user really needs to concentrate so they can do one single task,” says Penaloza. “In our case it’s two completely different tasks, and that’s what makes it special.”

There’s a clear need to develop these technologies for people with disabilities, but the utility of such systems for able-bodied people isn’t yet clear. Still, the cool factor has researchers and at least one philosopher-artist brainstorming the question: If we can have a third arm, how would we use it?

Emily Waltz is a features editor at Spectrum covering power and energy. Prior to joining the staff in January 2024, Emily spent 18 years as a freelance journalist covering biotechnology, primarily for the Nature research journals and Spectrum. Her work has also appeared in Scientific American, Discover, Outside, and the New York Times. Emily has a master's degree from Columbia University Graduate School of Journalism and an undergraduate degree from Vanderbilt University. With every word she writes, Emily strives to say something true and useful. She posts on Twitter/X @EmWaltz and her portfolio can be found on her website.