In a recent TED talk, Swiss magician Marco Tempest, who's performed tricks using robots, said that "besides the faces and bodies we give our robots, we cannot read their intentions, and that makes us nervous. When someone hands an object to you, you can read intention in their eyes, their face, their body language. That's not true of the robot."

Now Japanese researchers want to change that. They say that a big problem with today's robots is that we don’t know what’s going on inside their heads. Robots that have facial expressions and are capable of gesticulating can help us feel more at ease interacting with them, but the researchers want to go one step further: They want to build robots with some of the same involuntary physiological reactions that we humans have, such as sweating when we feel anxious or getting goosebumps when we're scared.

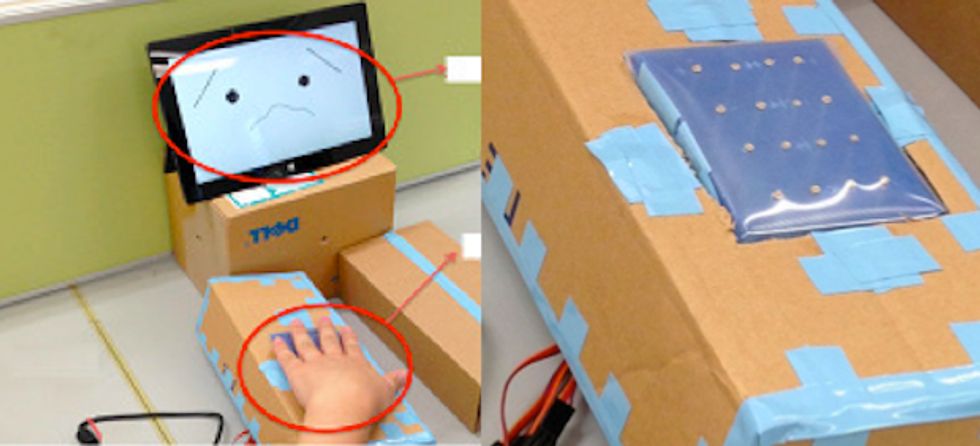

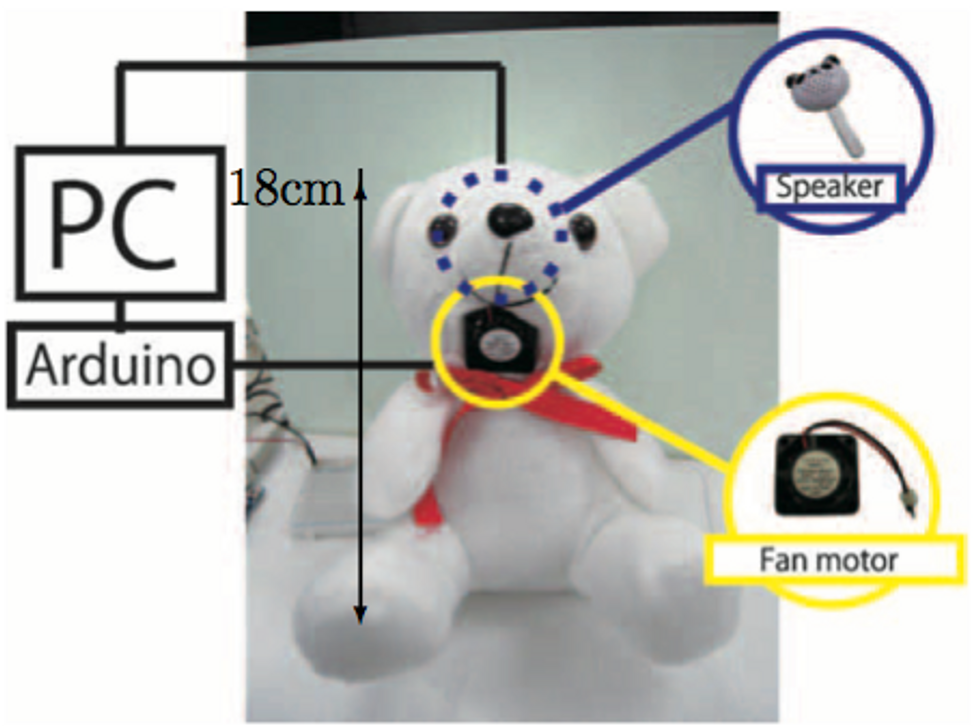

Dr. Tomoko Yonezawa and her group at Kansai University, in Japan, have just started exploring this idea, and at the Human-Robot Interaction conference early this year they presented some proof-of-concept devices. One prototype [pictured above] gets "goosebumps" when the robot is faced with a cool breeze or told a scary story. Another device is a robotic head that can "sweat."

While these prototypes are still in their early stages, the concept of giving robots certain capabilities that mimic our physical behaviors has long intrigued researchers. Other groups have used sounds and facial expressions for social robots, but physiological reactions have been little explored.

You might ask, "Couldn’t a robot just state its intentions and 'feelings' instead of needing this subtle and complex cue system?" According to research at Georgia Tech, sometimes we actually feel uneasy when the robot explicitly states its intent before an action. It's just... awkward.

What's more, Yonezawa says that "sometimes we make facial expressions that are different from what’s going on in our mind." In contrast, involuntary behaviors reveal our "true feelings," and by giving robot these capabilities, we could feel more at ease with them, because we would be able to read their intentions. Her lab is currently improving its prototypes, including a more integrated and powerful breath generator that she plans to demonstrate next month.

"Breatter: A Simulation of Living Presence with Breath that Corresponds to Utterances" by Yukari Nakatani and Tomoko Yonezawa of Kansai University and "Involuntary Expression of Embodied Robot Adopting Goose Bumps," by Tomoko Yonezawa, Xiaoshun Meng, Naoto Yoshida, and Yukari Nakatani of Kansai University were presented as Late-Breaking Reports at the Human Robot Interaction Conference this past March.