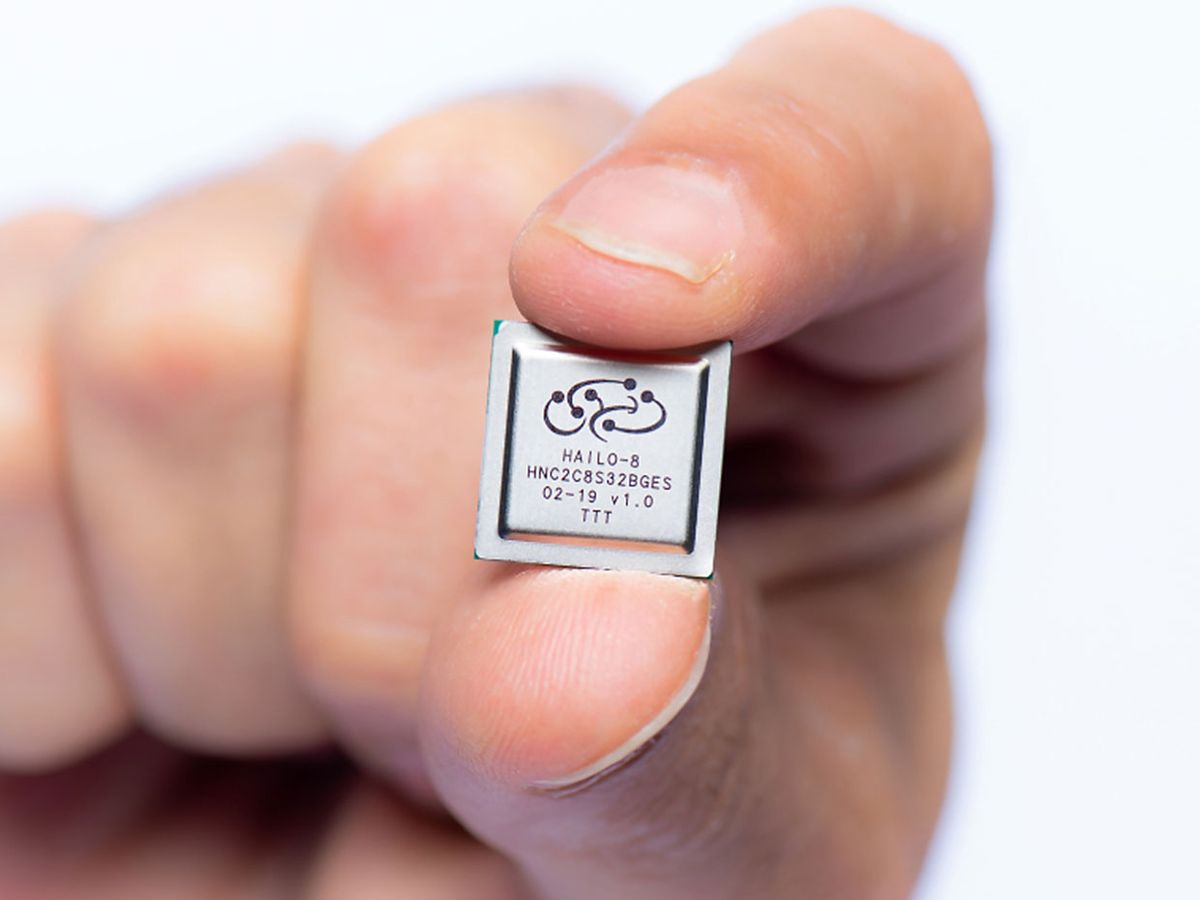

Israeli startup Hailo says it has raised US $60 million in the second round of funding it will use to mass produce its Hailo-8 chip. The Hailo-8 is designed to do deep learning in cars, robots, and other “edge” machines. Such edge chips are meant to reduce the cost, size, and power consumption needs of using AI to process high-resolution information from sensors such as HD cameras.

The three-year-old company’s technology stemmed from a cold, hard look at what AI needed and what contemporary chips could deliver. “When you do a bottom up analysis of what should be the cost—[in terms of] power to run these applications—we saw an order of magnitude gap between what is possible and what is actually being done,” says Hailo CEO Orr Danon. “The problem lies in the architecture.”

In a typical GPU or CPU, there is a relatively narrow access pathway to memory but a very deep memory structure, Danon explains. But for AI “what you need is very wide access but shallow address space.” Another problem is that the broad programmability of CPUs and GPUs means that elements having to do with the control of data dominate those that have to do with the flow of data. These architectures are “very flexible and very robust, but that’s irrelevant to running neural networks,” he says.

Hailo calls its solution a structure-defined data flow architecture. Deep learning is called “deep” because its neural networks consist of a deep stack of neural layers between the input and the output. Hailo’s software tools take a trained neural net and examine how much memory, computing capability, and data flow control each layer needs. The software then maps this to the processor’s resources so that each layer is contiguous with its neighbors.

“You’re not only controlling how the data moves, but also the structure,” says Danon. “Being able to do so keeps the data movement on the device very local.”

The result is a chip that can perform 26 trillion operations per second and reach 672 frames per second on a ResNet-50 image classification benchmark—with an efficiency of 3.1 trillion operations per second per watt. (You can see the Hailo-8 compared to other AI accelerators on the MLPerf benchmarks here.)

The chips is targeted at edge systems—including partially autonomous vehicles, smart cameras, smartphones, drones and AR/VR gadgets—that need sophisticated deep learning.

The new cash infusion was led by existing investors, but Hailo also won new investment from the venture capital arms of ABB, NEC, and London VC firm Latitude Ventures.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.