This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

Humanoid robots are a lot more capable than they used to be, but for most of them, falling over is still borderline catastrophic. Understandably, the focus has been on getting humanoid robots to succeed at things as opposed to getting robots to tolerate (or recover from) failing at things, but sometimes, failure is inevitable because stuff happens that’s outside your control. Earthquakes, accidentally clumsy grad students, tornadoes, deliberately malicious grad students—the list goes on.

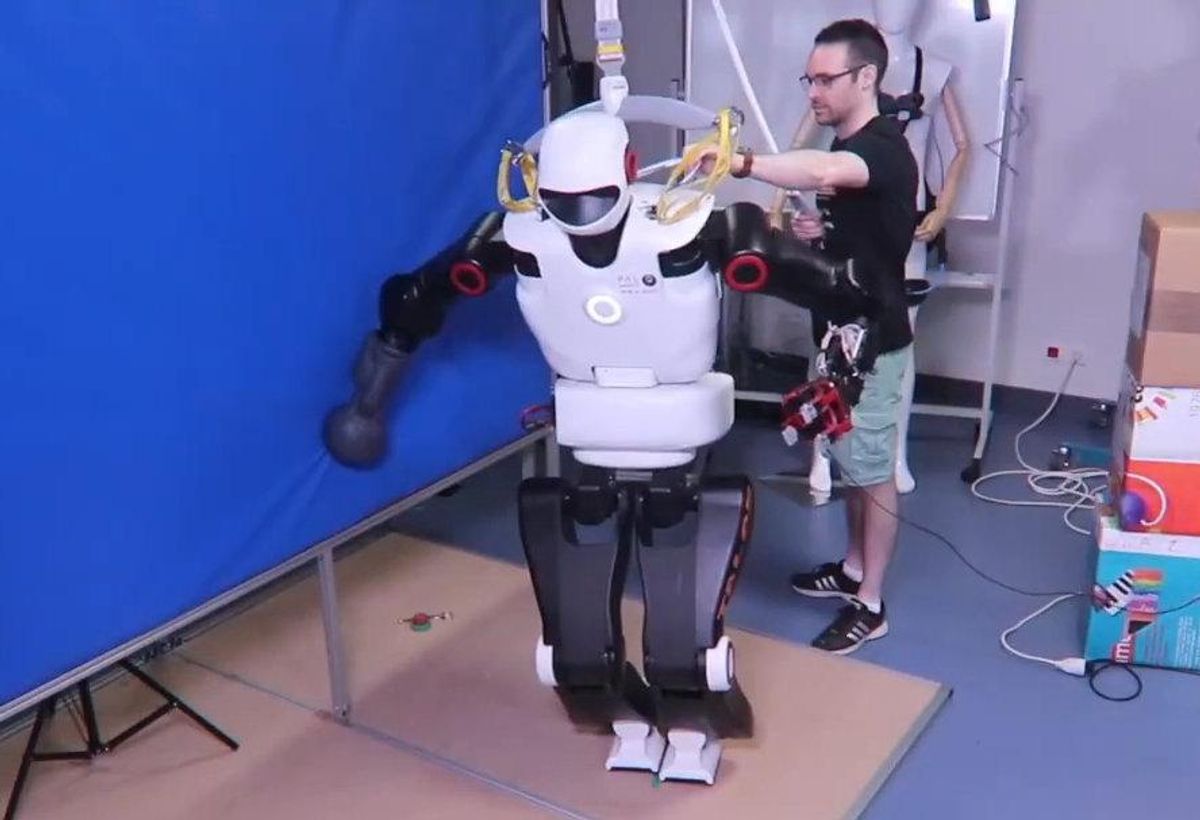

When humans lose their balance, the go-to strategy is a highly effective one: Use whatever happens to be nearby to keep from falling over. While for humans this approach is instinctive, it’s a hard problem for robots, involving perception, semantic understanding, motion planning, and careful force control, all executed under aggressive time constraints. In a paper published earlier this year in IEEE Robotics and Automation Letters, researchers at Inria in France show some early work getting a TALOS humanoid robot to use a nearby wall to successfully keep itself from taking a tumble.

The tricky thing about this technique is how little time a robot has to understand that it’s going to fall, sense its surroundings, make a plan to save itself, and execute that plan in time to avoid falling. In this paper, the researchers address most of these things—the biggest caveat is probably that they’re assuming that the location of the nearby wall is known, but that’s a relatively straightforward problem to solve if your robot has the right sensors on it.

Once the robot detects that something in its leg has given out, its Damage Reflex (D-Reflex) kicks in. D-Reflex is based around a neural network that was trained in simulation (taking a mere 882,000 simulated trials), and with the posture of the robot and the location of the wall as inputs, the network outputs how likely a potential wall contact is to stabilize the robot, taking just a few milliseconds. The system doesn’t actually need to know anything specific about the robot’s injury, and will work whether the actuator is locked up, moving freely but not controllably, or completely absent—the “amputation” case. Of course, reality rarely matches simulation, and it turns out that a damaged and tipping-over robot doesn’t reliably make contact with the the wall exactly where it should, so the researchers had to tweak things to make sure that the robot stops its hand as soon as it touches the wall whether it’s in the right spot or not. This method worked pretty well—using D-Reflex, the TALOS robot was able to avoid falling in three out of four trials where it would otherwise have fallen. Considering how expensive robots like TALOS are, this is a pretty great result, if you ask me.

The obvious question at this point is, “Okay, now what?” Well, that’s beyond the scope of this research, but generally “now what” consists of one of two things. Either the robot falls anyway, which can definitely happen even with this method because some configurations of robot and wall are simply not avoidable, or the robot doesn’t fall and you end up with a slightly busted robot leaning precariously against a wall. In either case, though, there are options. We’ve seen a bunch of complementary work on surviving falls with humanoid robots in one way or another. And in fact one of the authors of this paper, Jean-Baptiste Mouret, has already published some very cool research on injury adaptation for legged robots.

In the future, the idea is to extend this idea to robots that are moving dynamically, which is definitely going to be a lot more challenging, but potentially a lot more useful.

First, do not fall: learning to exploit a wall with a damaged humanoid robot, by Timothee Anne, Eloïse Dalin, Ivan Bergonzani, Serena Ivaldi, and Jean-Baptiste Mouret from Inria, is published in IEEE Robotics and Automation Letters.

- Robots Learning Judo Techniques to Fall Down Without Exploding ›

- DARPA Robotics Challenge: A Compilation of Robots Falling Down ›

- Airbags Could Protect Humanoid Robots From Catastrophic Falls ›

- Life-Size Humanoid Robot Is Designed to Fall Over (and Over and Over) ›

- It’s Totally Fine for Humanoid Robots to Fall Down - IEEE Spectrum ›

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.