Humans may not use all of the brain's computing capacity, but they do use most of the cranium for computing, according to biophysicist Bruno Michel at IBM's Zurich research laboratory. Co-located cortices and capillaries keep our neurons powered and cooled with minimal fuss. Yet today's computers dedicate around 60 percent of their volume to getting electricity in and heat out compared to perhaps 1 percent in a human brain, Michel estimates. Last week in Zurich, he told journalists about IBM's long-term plan to help computers achieve human-like space- and energy efficiency. The tool: a kind of electronic blood.

Liquid cooling has been on the market since Cray tried it on for size in 1985. The technique hit consumer computers in the 1990s, though it remains a custom, quirky, or high-end option. During the era of Moore's Law, the easiest way to bring down the price of computing has been to fit more transistors on a chip. But a lesser-known limit lurked in the background: Rent's Rule, which noted a logarithmic relationship between the number of logic units in a computer and the number of communications gates between them. In other words, packing in more logic requires packing in a lot more communication. More communication means a need for more energy in and more heat out.

Michel describes that as the bottleneck for supercomputing: "In the future we are worried about the energy bill and not about the purchase cost of a computer. So we want to squeeze as much as we can out of a watt or joule and not out of a silicon chip." he says.

Today's computer chips get their electric current from tiny copper wires and dissipate heat into surrounding air that bulky and energy-hungry air conditioners must whisk away. The need for continuous air flow means chip designers have stuck to a more or less flat design. Water's much higher heat-carrying capacity make it possible to shunt heat away from chip components that are much, much closer together. The upshot: they could be stacked on top of one another in a three-dimensional arrangement much like the brain's. That would give the chip shorter communication distances, too, further reducing the amount of heat generated.

Cool. But not that cool. IBM's SuperMUC cools its chips with water at 45 degrees Celsius. You wouldn't want to spill any on your hand, but it's still cool enough to bring down a computer chip's temperature. Michel says that the hot water from a water-cooled 10-megawatt data center could heat 700 homes via district heating, which is common in Europe. IBM set up a first working example of a heat-recycling liquid-cooled supercomputer, called Aquasar, at the Swiss Federal Institute of Technology Zurich (ETH). If it works on a larger scale, it would save energy in two places: less energy would be needed to cool the computer in the first place, and some of the energy spent could be reused to heat homes.

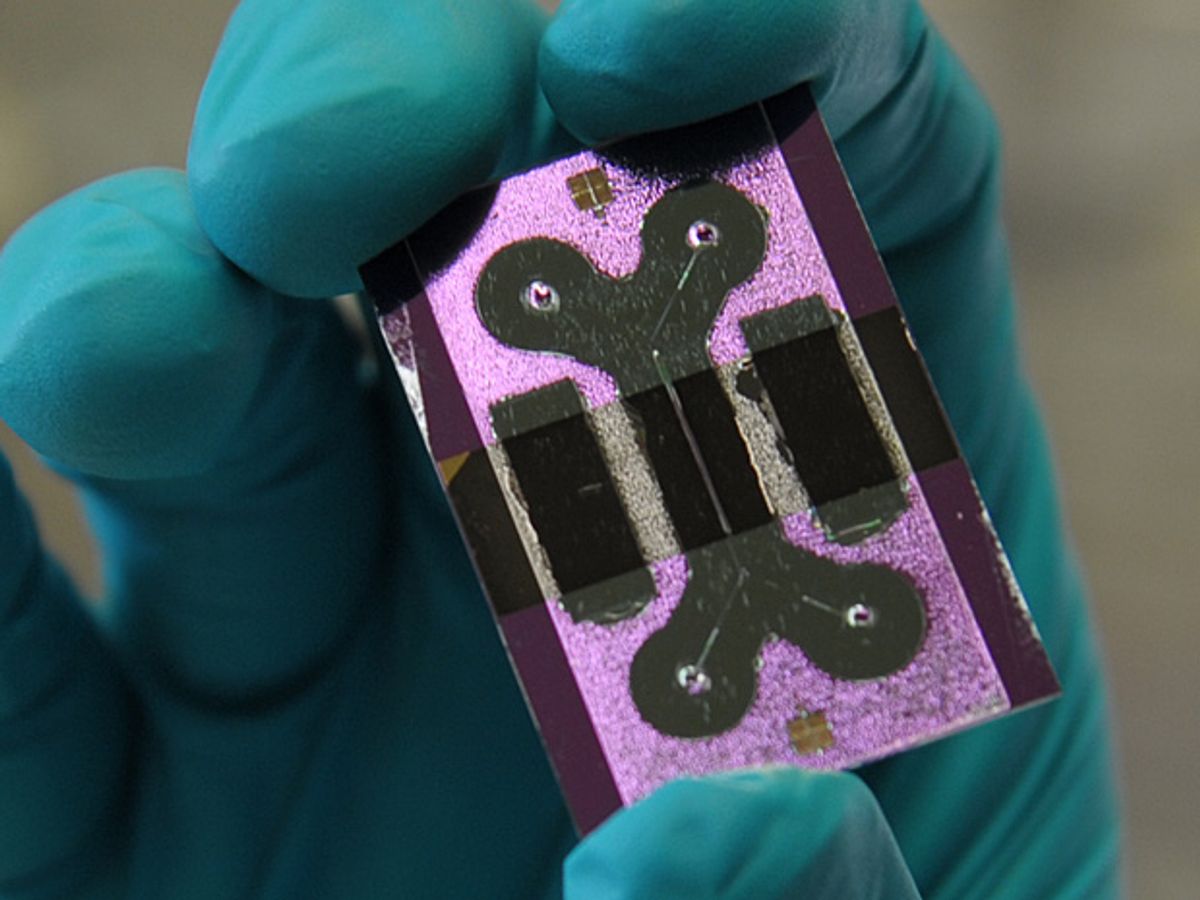

Getting electricity in via a liquid is more complicated. Patrick Ruch, a colleague of Michel's at IBM in Zurich, and others are examining vanadium as a potential electrolyte for a redox flow battery. This is a concept used more often for storing energy from variable power sources such as solar panels or wind power, due to its low cost and scalability. But it has a low energy density compared to some other batteries. Ruch, who began the project this year, has his work cut out for him. He says, "the goal is to be able to provide a substantial amount of the power that the microprocessor needs by this electrochemical solution."

Photo: IBM Research

Lucas Laursen is a journalist covering global development by way of science and technology with special interest in energy and agriculture. He has lived in and reported from the United States, United Kingdom, Switzerland, and Mexico.