How to Catch Brain Waves in a Net

A mesh of electrodes draped over the cortex could be the future of brain-machine interfaces

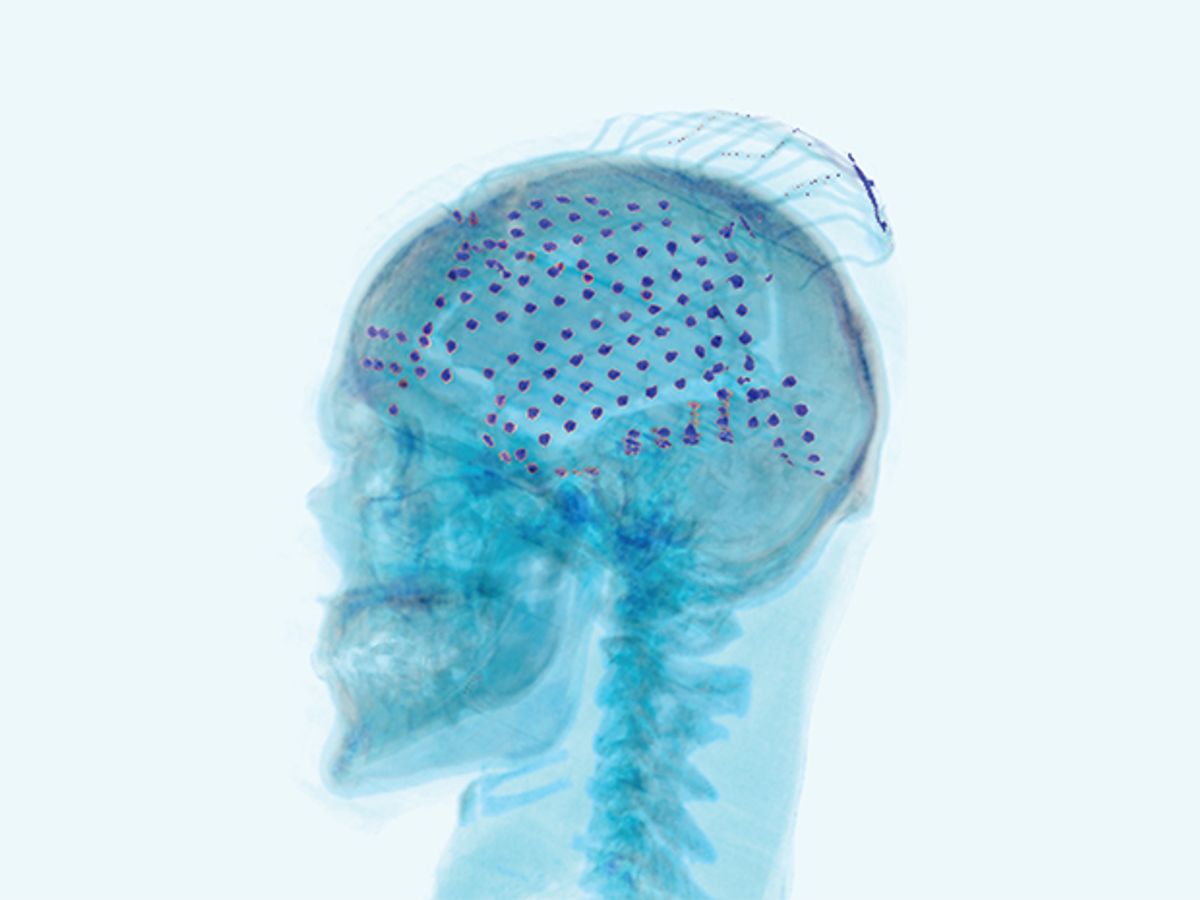

This photo-illustration shows the electrodes placed over the surface of a patient’s brain to record an electrocorticogram (ECoG).

Last year, an epilepsy patient awaiting brain surgery at the renowned Johns Hopkins Hospital occupied her time with an unusual activity. While doctors and neuroscientists clustered around, she repeatedly reached toward a video screen, which showed a small orange ball on a table. As she extended her hand, a robotic arm across the room also reached forward and grasped the actual orange ball on the actual table. In terms of robotics, this was nothing fancy. What made the accomplishment remarkable was that the woman was controlling the mechanical limb with her brain waves.

The experiment in that Baltimore hospital room demonstrated a new approach in brain-machine interfaces (BMIs), which measure electrical activity from the brain and use the signal to control something. BMIs come in many shapes and sizes, but they all work fundamentally the same way: They detect the tiny voltage changes in the brain that occur when neurons fire to trigger a thought or an action, and they translate those signals into digital information that is conveyed to the machine.

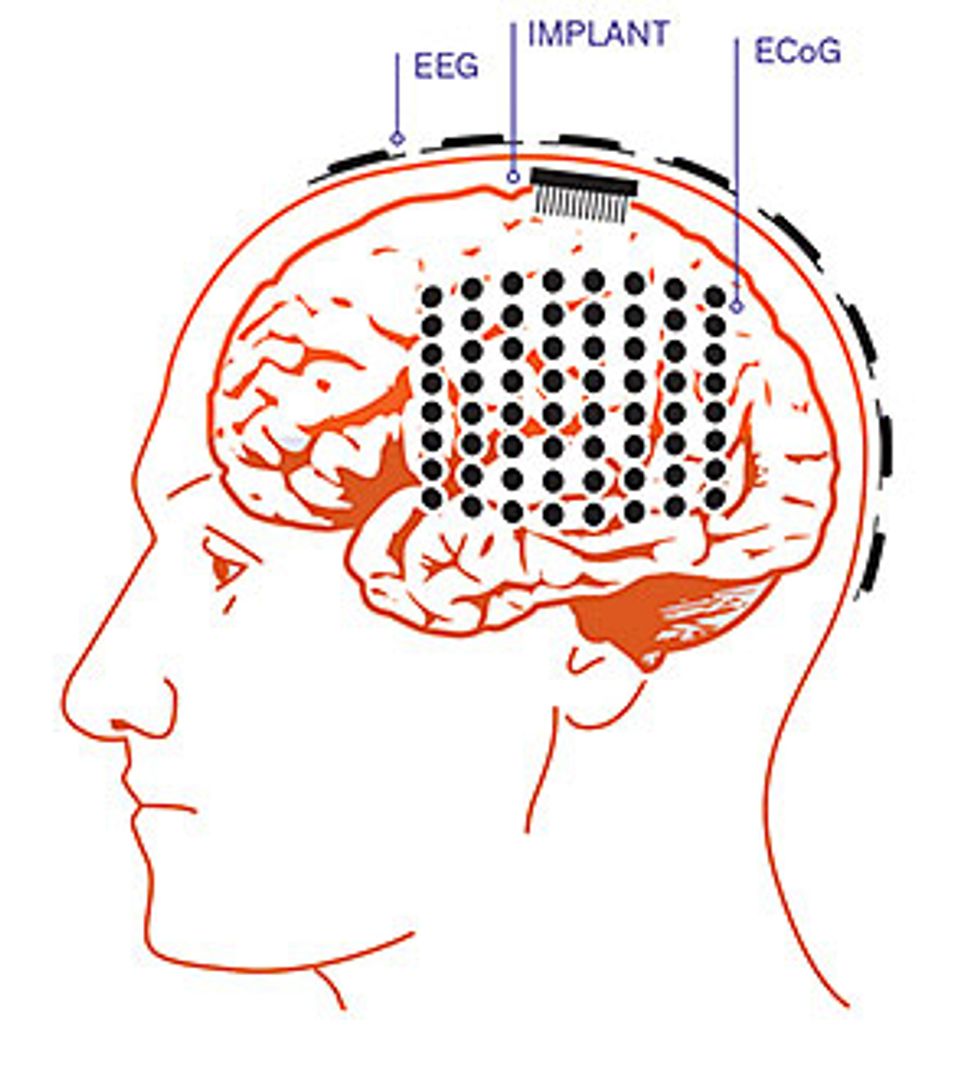

To sense what’s going on in the brain, some systems use electrodes that are simply attached to the scalp to record the electroencephalographic signal. These EEG systems record from broad swaths of the brain, and the signal is hard to decipher. Other BMIs require surgically implanted electrodes that penetrate the cerebral cortex to capture the activity of individual neurons. These invasive systems provide much clearer signals, but they are obviously warranted only in extreme situations where doctors need precise information. The patient in the hospital room that day was demonstrating a third strategy that offers a compromise between those two methods. The gear in her head provided good signal quality at a lower risk by contacting—but not penetrating—the brain tissue.

The patient had a mesh of electrodes inserted beneath her skull and draped over the surface of her brain. These electrodes produced an electrocorticogram (ECoG), a record of her brain’s activity. The doctors hadn’t placed those electrodes over her cerebral cortex just to experiment with robotic arms and balls, of course. They were trying to address her recurrent epileptic seizures, which hadn’t been quelled by medication. Her physicians were preparing for a last-resort treatment: surgically removing the patch of brain tissue that was causing her seizures.

Seizures result from abnormal patterns of activity in a faulty part of the brain. If doctors can precisely locate the place where those patterns originate, they can remove the responsible brain tissue and bring the seizures under control. To prepare for this woman’s surgery, doctors had cut through her scalp, her skull, and the tough membrane called the dura mater to insert a flexible grid of electrodes on the surface of her brain. By recording the electrical activity those electrodes registered over several days, the neurologists would identify the trouble spot.

The woman went on to have a successful surgery. But before the procedure, science received a valuable bonus: the opportunity to record neural activity while the patient was conscious and under observation. Working with my collaborator at the Johns Hopkins University School of Medicine, Nathan Crone, my team of biomedical engineers has done this about a dozen times in the past few years. These recordings are increasingly being used to probe human brain function and are producing some of the most exciting data in neuroscience.

As a patient moves or speaks under carefully controlled conditions, we record the ECoG signals and learn how the brain encodes intentions and thoughts. Now we are beginning to use those signals to control computers, robots, and prostheses. The woman in the hospital room didn’t need any mind-controlled mechanical devices herself, but she was helping us develop technology that could one day allow paralyzed patients to control robotic limbs of their own.

The machine at the end of a brain-machine interface could be anything: Over the past few decades, researchers have experimented with using neural signals to control a computer cursor, a wheelchair, and even a car. The dream of building a brain-controlled prosthetic limb, however, has received particular attention.

In 2006 the U.S. Defense Advanced Research Projects Agency (DARPA) bankrolled a massive effort to build a cutting-edge prosthetic arm and to control it with brain signals. In the first phase of this Revolutionizing Prosthetics program, the Johns Hopkins Applied Physics Laboratory developed a remarkable piece of machinery called the Modular Prosthetic Limb, which boasts 26 degrees of freedom through its versatile shoulder, elbow, wrist, and fingers.

To give amputees control of the mechanical arm, researchers first tried out existing systems that register the electrical activity in the muscles of the limb stump and transmit those signals to the prosthesis. But such systems offer very limited control, and amputees don’t find them intuitive to use. So DARPA issued its next Revolutionizing Prosthetics challenge in 2009, asking researchers to control the state-of-the-art prosthetic arm directly with signals from the brain.

Several investigators answered that call by using brain implants with penetrating electrodes. At Duke University, in Durham, N.C., and the University of Pittsburgh, researchers had already placed microelectrodes in the brains of monkeys, using the resulting signals to make a robotic arm reach and grasp. Neuroscientists at Brown University, in Providence, R.I., had implanted similar microelectrodes in the cortex of a paralyzed man and showed that he could control a computer cursor using neural signals. Another paralyzed patient who underwent this procedure at Brown recently controlled a robotic arm: She used it to raise a bottle to her lips to take a drink, performing her first independent action in 14 years.

That work certainly demonstrated the feasibility of building a “neural prosthesis.” But using penetrating electrodes poses significant challenges. Scar tissue builds up around the electrodes and can reduce signal quality over time. Also, the hardware, including electrode arrays and low-power transmitters that send the signal out through the skull, must operate reliably for many years. Finally, these first demonstrations did not produce smooth, quick, or dexterous movements. Some neuroscientists suggested that many more electrodes should be implanted—but doing so would heighten the risk of damaging brain tissue.

In light of those concerns, the United States’ National Institutes of Health challenged researchers to build a neural prosthesis with a less invasive control mechanism. The ideal would be a system based on EEG signals, simply using electrodes attached to the scalp. Unfortunately, the brain signals that external electrodes pick up are blurred and attenuated by their passage through the skull and scalp. This led our team to investigate the middle road: the use of ECoG signals.

ECoG systems provide a better signal-to-noise ratio than EEG, and the data includes high-frequency components that EEG can’t easily capture. ECoG systems also do a better job of extracting the most useful information from the brain, as an electrode placed over the motor cortex can specifically listen in on the electrical activity most relevant for controlling a prosthetic arm. Similarly, electrodes draped over the brain areas associated with speech can capture signals associated with verbal communication.

Raw ECoG signals appear to be a confused mess of squiggly lines with little discernible pattern. To make sense of the data, our team performs a spectral analysis to deconstruct the signal and find oscillations at certain specific frequencies. These are the brain waves you may have heard about. Neuroscientists have learned that different oscillation frequencies are associated with specific mental states, such as deep sleep, focused attention, or meditative contemplation.

Just imagine what neural prostheses could do for people who are severely paralyzed or for patients in the late stages of amyotrophic lateral sclerosis (also known as Lou Gehrig’s disease). These patients are essentially “locked in,” with intact brains but no ability to control their bodies, or even to speak. Could their intentions, translated into ECoG signals, be captured and relayed to robotic limbs?

Here’s a simple example of how an ECoG-based neural prosthesis could work. Researchers have previously shown that an imagined movement modulates the brain’s electrical activity in the mu band, which has a frequency of about 10 to 13 hertz. Thus, the paralyzed subject would imagine moving a limb, electrodes would capture the mu-band activity, and the BMI would use the signal to trigger an action, such as closing a robotic hand. Since we can use up to 64 electrodes placed about a centimeter apart and spread over a wide area of the brain, we have a lot of data to work with. As we develop better algorithms that can identify the key signals that code for movement, we can build systems that don’t just trigger an action but offer more fine-tuned control.

Our team took the first step toward building such a system in 2011, when we painstakingly matched up brain signals with particular movements. Our subject was a 12-year-old boy awaiting surgery for his seizure disorder. In the experiment, the boy reached forward and grasped one of the wooden blocks set before him, then released it and withdrew his hand. When we looked at the data from just a few electrodes that had been placed over his brain’s sensorimotor cortex, we discovered that oscillations in the high gamma band, with frequencies between 70 and 150 Hz, correlated well with the boy’s actions. We also found that the signal in a lower frequency band changed in predictable ways when he wiggled his fingers.

The next step was to couple the electrical signal to the machinery. We demonstrated that under carefully controlled conditions, epileptic patients with ECoG electrodes placed on their brains could indeed command the Modular Prosthetic Limb to perform simple actions, like reaching and grasping. While this was a considerable accomplishment, we struggled to decode the neural signals reliably and to get the prosthetic limb to move smoothly.

In the end, we decided that it just may not be realistic to expect an ECoG-controlled prosthesis to perform an entirely natural limb movement, such as picking up a pot of coffee and pouring some into a cup. After all, a typical person uses a combination of vision, touch, motor control, and cognitive processing to perform this mundane action. So last year our team began exploring another strategy. We built a hybrid BMI that combines brain signals with input from other sensors to help accomplish the task at hand.

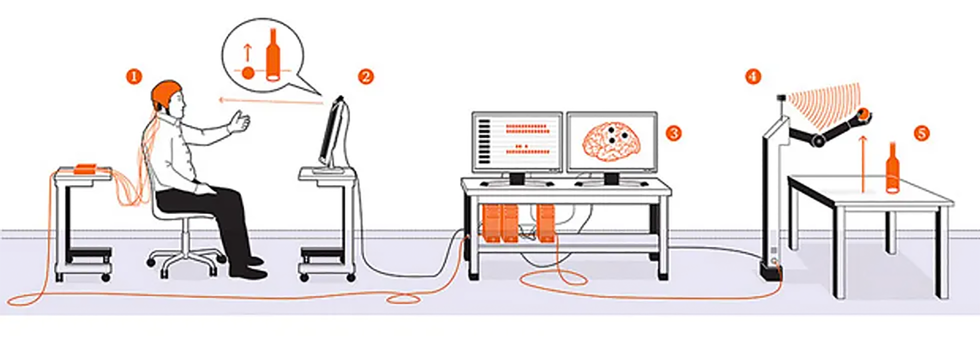

Several epileptic volunteers with ECoG arrays tested our novel system. The first, the woman described at the beginning of this article, focused her eyes on the image of a ball on a computer screen; the computer was streaming video from a setup across the room. An eye-tracking system recorded the direction of her gaze to locate the object she wanted to manipulate. Then, as she reached toward the screen, her ECoG electrodes recorded neural signals associated with that action. All this information was relayed to a robotic arm across the room, which was equipped with a Microsoft Kinect to help it recognize objects in three-dimensional space. When the arm received the signal to reach for the ball, its path-planning software calculated the necessary movements, orientations, and grasp configuration to smoothly pick up the ball and drop it in a trash can. The results were encouraging: In 20 out of 28 trials, this woman’s brain signals successfully triggered the robotic arm, which then completed the entire task.

Would this patient have done even better if we’d implanted electrodes in her brain rather than just draping the electrodes over the surface? Perhaps, but at a greater risk of brain trauma. Also, penetrating electrodes register only the local activity of individual cells or small clusters of neurons, whereas ECoG electrodes pick up activity across broader zones. ECoG systems may therefore be able to capture a richer picture of the brain activity taking place during the planning and execution of an action.

ECoG systems also hold the promise of being able to convey both motor and sensory signals. If a prosthetic limb has sensors that register when it touches an object, it could in principle send that sensory feedback to a patient by stimulating the brain through the ECoG electrodes. Similar stimulation is already done routinely in patients prior to epilepsy surgery in order to map the brain regions responsible for sensation. However, the sensations elicited have typically been very crude. In the future, more refined brain stimulation, using smaller electrodes and more precise activation patterns, may be able to better simulate tactile feedback. The goal is to use two-way communication between brain and prosthesis to help a user deftly control the limb.

While it might be tempting to test ECoG systems with severely paralyzed patients—the intended beneficiaries of this neuroprosthetic research—it is imperative to demonstrate that such systems can reliably restore meaningful function before exposing patients to the risks of surgery. For this reason, the clinical circumstances of patients preparing for epilepsy surgery represent an important opportunity to develop technology that will benefit a very different group of patients. We’ve found that many epilepsy patients are glad they can help others while they’re hospital-bound and under observation for the seizures that will provide guidance to their surgeons. Our hope is that these experiments will lead to a technology so clearly useful that we will feel well justified in trying it in paralyzed patients.

Thinking about the cost-benefit concerns gives a sense of déjà vu. I came to Johns Hopkins in the early 1980s, when doctors there had just implanted the first heart defibrillator in a patient. All the same doubts were aired. Was the technology too invasive? Would it be reliable? Would it provide enough benefit to justify the expense? But defibrillators rapidly proved their worth, and today more than 100,000 are implanted every year in the United States alone. The medical community may be nearing the same juncture with brain-machine interfaces, which might well be an accepted part of clinical medicine in just a couple of decades.

So far I’ve discussed the possibility of using ECoG signals to control prosthetic limbs, but there’s another fascinating possibility: Capturing these signals could also help people who have lost the ability to speak. For some people who have suffered a stroke or injury, the brain can still conceive words and generate speech commands, but the signals don’t make it to the mouth. When ECoG electrodes are placed over the language areas of the brain, including the regions that govern the muscles of speech articulation, the resulting signals presumably carry information pertaining to both language generation and the physical production of words. A speech prosthesis could decode those signals and send commands to a device that would give voice to the patient’s intended sentences.

Early research shows progress in understanding the brain’s commands to the mouth muscles. In one study, University of California researchers in San Francisco and Berkeley used an ECoG system to record activity in the motor cortex as their subjects patiently recited syllables such as “ba,” “da,” and “ga.” The resulting measurements showed distinct patterns for different consonants. For example, certain electrodes showed activity during the production of the “b” sound, which requires closure of the lips. Other electrodes registered activity during the “d” sound, in which the tongue hits the roof of the mouth. Still others saw action during the “g” sound, which involves the back of the mouth.

What would it take to build a speech prosthesis? First, we would need to improve our recording hardware. Today’s ECoG systems use only a few dozen electrodes on the cortex; clearly, a much higher density of electrodes would produce a better signal. We have already tried out new microelectrode ECoG systems in human patients that can pack 16 electrodes onto a 9-by-9-millimeter array.

Because speech production surely involves many brain regions, we’ll also have to improve our signal analysis to decipher neural activity, not just in one area but across large regions of the brain. We’ll need better spatial and temporal resolution to determine the exact sequence in which groups of neurons across the cortex fire to produce, say, the simple sound “ba.” Once we’ve managed to map individual phonemes or syllables, we can work toward understanding fluid speech by decoding a succession of brain commands.

Controlling a robot with a thought, speaking without making a sound: With ECoG systems, these magical feats now appear well within the realm of feasibility. By casting a net of electrodes over the surface of the brain, it’s possible to capture echoes of the ideas and commands that swirl below, in the currents of the mind.

This article originally appeared in the September 2014 print issue as “Catching Brain Waves in a Net.”