One of the sneakiest ways to spill the secrets of a computer system involves studying its pattern of power usage while it performs operations. That’s why researchers have begun developing ways to shield the power signatures of AI systems from prying eyes.

Among the AI systems most vulnerable to such attacks are machine-learning algorithms that help smart home devices or smart cars automatically recognize different types of images or sounds such as words or music. Such algorithms consist of neural networks designed to run on specialized computer chips embedded directly within smart devices, instead of inside a cloud computing server located in a data center miles away.

This physical proximity enables such neural networks to quickly perform computations with minimal delay but also makes it easy for hackers to reverse-engineer the chip’s inner workings using a method known as differential power analysis.

“This is more of a threat for edge devices or Internet of Things devices, because an adversary can have physical access to them,” says Aydin Aysu, an assistant professor of electrical and computer engineering at North Carolina State University in Raleigh. “With physical access, you can then measure the power or you can look at the electromagnetic radiation.”

The North Carolina State University researchers have demonstrated what they describe as the first countermeasure for protecting neural networks against such differential-power-analysis attacks. They describe their methods in a preprint paper to be presented at the 2020 IEEE International Symposium on Hardware Oriented Security and Trust in San Jose, Calif., in early December.

Differential-power-analysis attacks have already proven effective against a wide variety of targets such as the cryptographic algorithms that safeguard digital information and the smart chips found in ATM cards or credit cards. But Aysu and his colleagues see neural networks as equally likely targets with possibly even more lucrative payoffs for hackers or commercial competitors at a time when companies are embedding AI systems in seemingly everything.

In their latest research, they focused on binarized neural networks, which have become popular as lean and simplified versions of neural networks capable of doing computations with less computing resources.

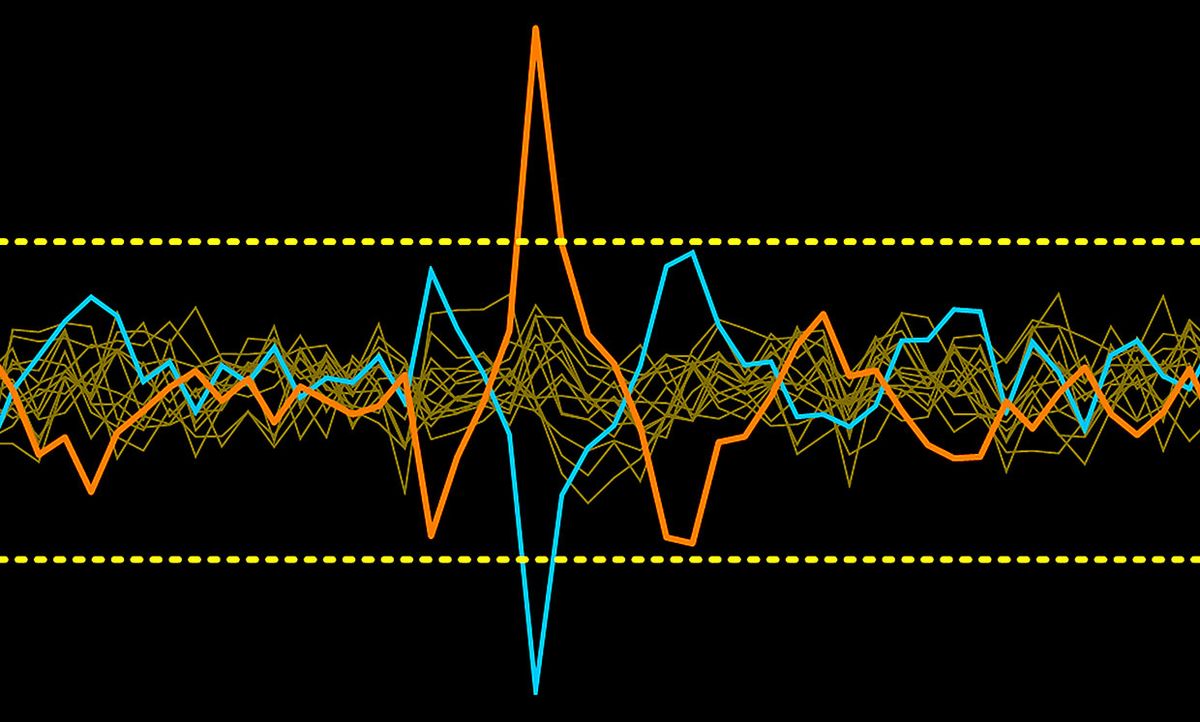

The researchers started out by showing how an adversary can use power-consumption measurements to reveal the secret weight values that help determine a neural network’s computations. By repeatedly having the neural network run specific computational tasks with known input data, an adversary can eventually figure out the power patterns associated with the secret weight values. For example, this method revealed the secret weights of an unprotected binarized neural network by running just 200 sets of power-consumption measurements.

Next, Aysu and his colleagues developed a countermeasure to defend the neural network against such an attack. They adapted a technique known as masking by splitting intermediate computations into two randomized shares that are different each time the neural network runs the same intermediate computation. Those randomized shares get processed independently within the neural network and only recombine at the final step before producing a result.

That masking defense effectively prevents an adversary from using a single intermediate computation to analyze different power-consumption patterns. A binarized neural network protected by masking required the hypothetical adversary to perform 100,000 sets of power-consumption measurements instead of just 200.

“The defense is a concept that we borrowed from work on cryptography research and we augmented for securing neural networks,” Aysu says. “We use the secure multipart computations and randomize all intermediate computations to mitigate the attack.”

Such defense is important because an adversary could steal a company’s intellectual property by figuring out the secret weight values of a neural network that forms the foundation of a particular machine-learning algorithm. Knowledge of a neural network’s inner workings could also enable adversaries to more easily launch adversarial machine-learning attacks that can confuse the neural network.

The masking defense can be implemented on any type of computer chip—such as field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs)—that can run a neural network. But the masking defense does need to be tuned for each specific machine-learning model that requires protection.

Furthermore, adversaries can sidestep the basic masking defense by analyzing multiple intermediate computations instead of a single computation. That can lead to a computational arms race if the masking defense counters by splitting the computations into even more shares. “Each time, you add some more overhead to the attacks as well as the defenses as they evolve,” Aysu says.

There’s already a trade-off between security and computing performance when implementing even the basic masking defense, Aysu explains. The initial masking defense slowed down the neural network’s performance by 50 percent and required approximately double the computing area on the FPGA chip.

Still, the need for such active countermeasures against differential-power-analysis attacks remains. Two decades after the first demonstration of such an attack, researchers are still developing ideal masking solutions even for standard cryptographic algorithms. The challenge becomes even harder for neural networks, which is why Aysu and his colleagues started out with the relatively simpler binarized neural networks that may also be among the most vulnerable.

“It’s very difficult, and we by no means argue that the research is done,” Aysu says. “This is the proof of concept, so we need more efficient and more robust masking for many other neural network algorithms.”

Their research has received support from both the U.S. National Science Foundation and the Semiconductor Research Corp.’s Global Research Collaboration. Aysu expects to have funding to continue this work for another five years, and hopes to enlist more Ph.D. students interested in the effort. He sees his group’s cybersecurity research on hardware as increasingly important in defending against attacks that could exploit power-usage patterns and other baseline information from computer system operations.

“Interest in hardware security is increasing because at the end of the day, hardware is the root of trust,” Aysu says. “And if the root of trust is gone, then all the security defenses at other abstraction levels will fail.”

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.