To be able to drive safely and reliably, autonomous cars need to have a comprehensive understanding of what’s going on around them. They need to recognize other cars, trucks, motorcycles, bikes, humans, traffic lights, street signs, and everything else that may end up on or near a road. They also have to do this in all kinds of weather and lighting conditions, which is why most (if not all) companies developing autonomous cars are spending a ludicrous (but necessary) amount of time and resources collecting data in an attempt to gain experience with every possible situation.

In most cases, this technique depends on humans making annotations to enormous sets of data in order to train machine learning algorithms: hundreds or thousands of people looking at snapshots or videos taken by cars driving down streets, and drawing boxes around vehicles and road signs and labeling them, over and over. Researchers from the University of Michigan think there’s a better way: Doing the whole thing in simulation instead, and they’ve shown that it can actually be more effective than using real data annotated by humans.

Roboticists tend to be wary of doing too much in simulation because more often than not, they tend to succeed. That is, because of the way that simulations simplify the real world, making things work in simulation offers very few guarantees that the same things will work once you try to do them outside the simulation. This gets particularly bad when doing anything that involves physics, but photorealistic graphics are much easier to convincingly fake, largely thanks to the gaming industry, and at the IEEE International Conference on Robotics and Automation in Singapore last week, researchers from the University of Michigan set out to determine whether or not the graphics in Grand Theft Auto V can be used to train a deep learning system to recognize objects.

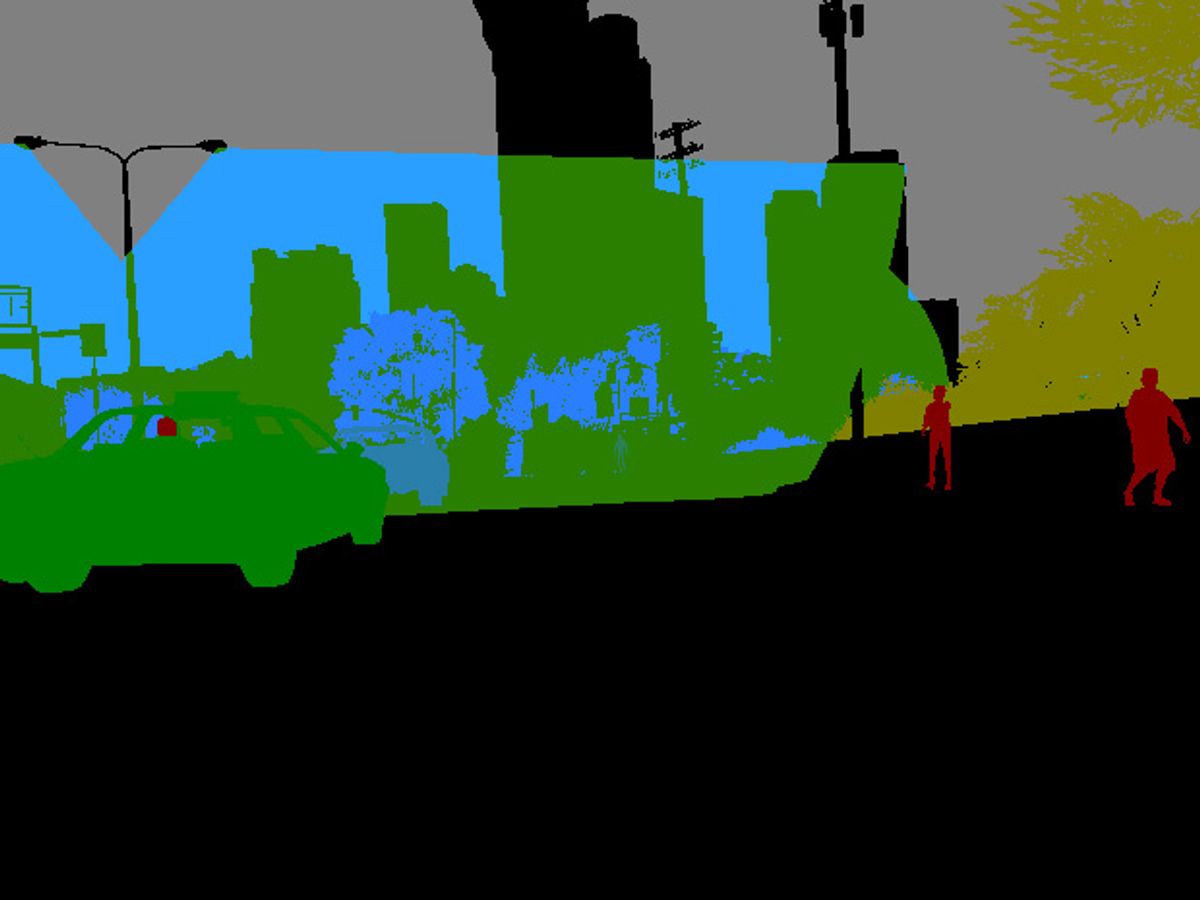

The appeal of training in simulation is threefold: First, you can do it much faster and far less expensively than running real cars on real roads taking real video. Secondly, in simulation the annotation is (mostly) already done for you, since the game knows exactly what objects it’s creating. And thirdly, simulation lets you generate whatever kinds of conditions and situations you like, as often as you like. Training on the road in California, for example, is frustrating, because the weather is almost always good. In simulation, you can make it rain or snow or anything else, and you can even use the exact same scene for training under lots of different conditions:

To see how well training in simulation worked, the researchers generated three simulated datasets in Grand Theft Auto V, consisting of 10,000, 50,000, and 200,000 distinct in-game images. A state of the art, deep-learning object-detection network was trained to identify objects using these data, and the same network was also trained on a separate, real-world, hand-annotated 3,000 image dataset called Cityscapes captured from camera-equipped cars driving around roads in Germany. The performance of the trained networks was then assessed by seeing how well they could identify objects on 7,500 images from (another) real-world, hand-annotated benchmark dataset called KITTI, which was also acquired in Germany but is unique from Cityscapes.

On the completely new KITTI benchmark dataset, the network trained in simulation did in fact perform better than the network trained on real, annotated data: In particular, the network trained on the 50,000 simulated images did better than the network trained on the 3,000 real images annotated by real live humans, and the network trained on the 200,000 simulated images did better still. As the researchers explain, their results suggest that you don’t get quite as much value out of each individual simulated image, but since the data show that you can just make it up with volume, it doesn’t matter:

It appears the variation and training value of a single simulation image is lower than that of a single real image. The lighting, color, and texture variation in the real world is greater than that of our simulation and as such many more simulation images are required to achieve reasonable performance. However, the generation of simulation images requires only computational resources and most importantly does not require human labeling effort. Once the cloud infrastructure is in place, an arbitrary volume of images can be generated.

Additional analysis suggests that the simulation-trained networks are actually better at picking out cars that are farther away or otherwise difficult to see, and also better at avoiding false positives. This may be because the simulations are able to generate a much wider variety of data than you tend to get by just driving a car around a city over and over, providing a more diverse input for training. Having said that, the problem of simulations being simulations remains: they’re not quite the same as the real world, and it’s very difficult to simulate situations that are hard to predict, which is one of the fundamental problems with autonomous cars. We may not be able to solve everything with Grand Theft Auto V, but the fact that virtual worlds have tangible training value on par with more resource-intensive methods will hopefully lead to a more efficient compromise and better, cheaper, self-driving cars.

“Driving in the Matrix: Can Virtual Worlds Replace Human-Generated Annotations for Real World Tasks?”, by Matthew Johnson-Roberson, Charles Barto, Rounak Mehta, Sharath Nittur Sridhar, Karl Rosaen, and Ram Vasudevan from the University of Michigan, was presented last week at the 2017 IEEE International Conference on Robotics and Automation in Singapore.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.