The popularity of the face recognition function in the latest high-end smartphones has brought the spotlight on to the 3D optical sensing technology which enables it. Three techniques for 3D sensing can be used to implement face recognition, and all are supported by advanced optical components and technologies supplied by ams.

Depth map: the basis of 3D face recognition

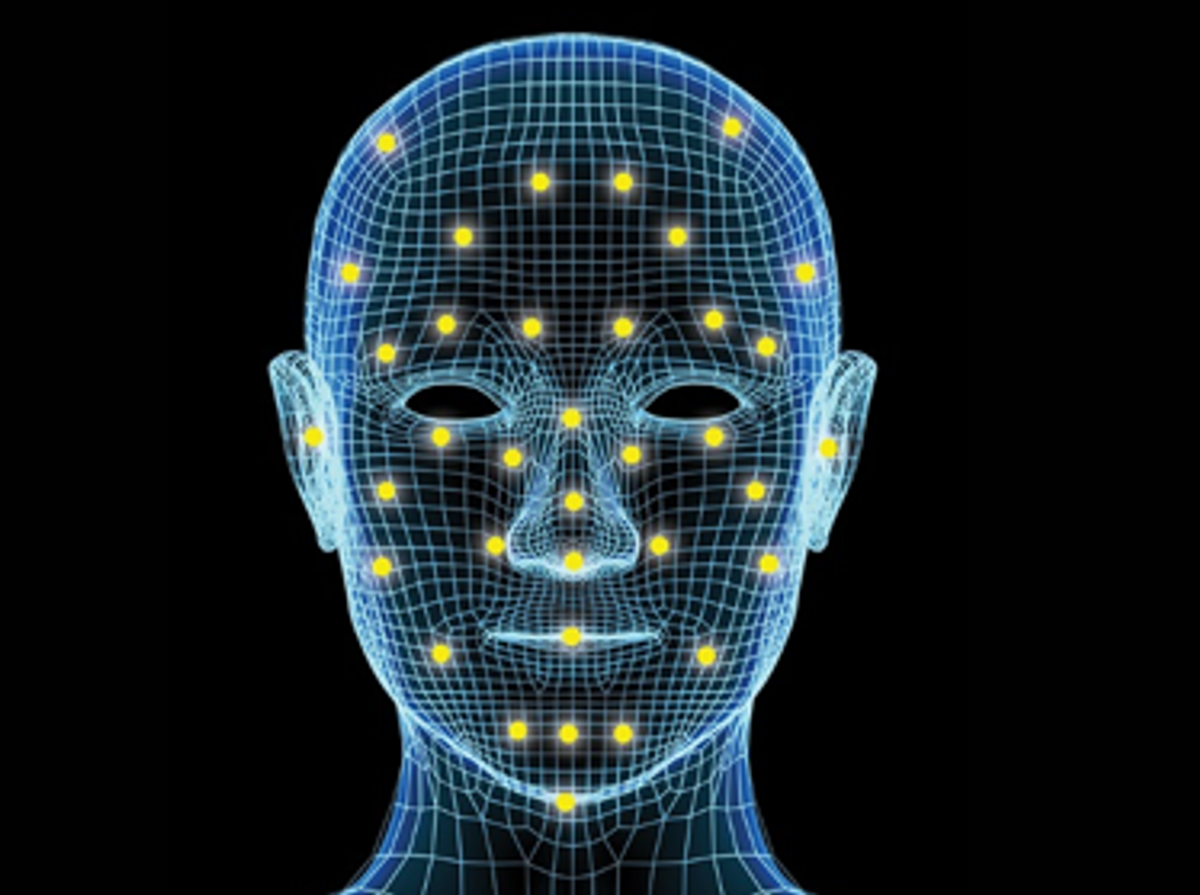

In mobile face recognition, a depth map captured by the phone’s 3D sensors is compared to a reference 3D image of the user. This 3D depth map generates more data about the face than a conventional 2D camera’s image. Secure 3D authentication enables the use of face recognition in critical applications such as mobile payments.

The techniques for generating a facial depth map include:

-

Time-of-flight sensing – measures distance by timing the flight of infrared light from the emitter to the user’s face and back to a photosensor.

-

Stereo imaging – as in human vision, two spaced photosensors create perspective and depth. Infrared light projectors enable an Active Stereo Vision system to work with no ambient light.

-

Structured light – algorithms generate depth maps by analyzing the distortions in random patterns of dots projected on the user’s face.

Learn more about the ams innovations in 3D sensing which are enabling smartphone manufacturers’ face recognition design programs, including eye-safe VCSEL laser emitter arrays, time-of-flight sensors, and system software developed in partnership with technology developers such as Face++.