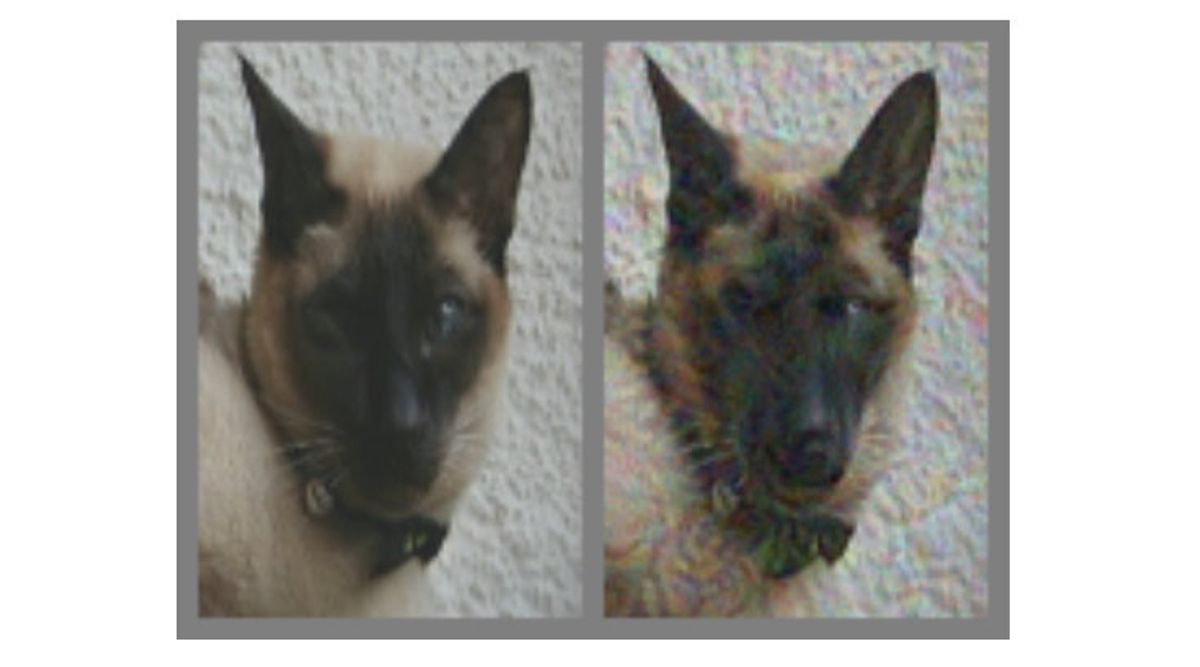

In the image above, there’s a picture of a cat on the left. On the right, can you tell whether it’s a picture of the same cat, or a picture of a similar looking dog? The difference between the two pictures is that the one on the right has been tweaked a bit by an algorithm to make it difficult for a type of computer model called a convolutional neural network (CNN) to be able to tell what it really is. In this case, the CNN think it’s looking at a dog rather than a cat, but what’s remarkable is that most people think the same thing.

This is an example of what’s called an adversarial image: an image specifically designed to fool neural networks into making an incorrect determination about what they’re looking at. Researchers at Google Brain decided to try and figure out whether the same techniques that fool artificial neural networks can also fool the biological neural networks inside of our heads, by developing adversarial images capable of making both computers and humans think that they’re looking at something they aren’t.

What are Adversarial Images?

Visual classification algorithms powered by convolutional neural networks are commonly used to recognize objects in images. You train these algorithms to recognize something like a panda by showing them lots of different panda pictures, and letting the CNN compare the pictures to figure out what features they share with each other. Once the CNN (commonly called a “classifier”) has identified enough panda-like features in its training pictures, it’ll be able to reliably recognize pandas in new pictures that you show it.

Humans recognize pandas in pictures by looking for abstract features: little black ears, big white heads, black eyes, fur, and so forth. The features that CNNs recognize aren’t like this at all, and don’t necessarily make any sense to humans, because we interpret the world much differently than a CNN does. It’s possible to leverage this to design “adversarial images,” which are images that have been altered with a carefully calculated input of what looks to us like noise, such that the image looks almost the same to a human but totally different to a classifier, and the classifier makes a mistake when it tries to identify them. Here’s a panda example:

A CNN-based classifier is about 60 percent sure that the picture on the left is a panda. But if you slightly change (“perturb”) the image by adding what looks to us like a bunch of random noise (highly exaggerated in the middle image), that same classifier becomes 99.3 percent sure that it’s looking at a gibbon instead. The reason this kind of attack can be so successful with a nearly imperceptible change to the image is because it’s targeted at one specific computer model, and likely won’t fool other models that may have been trained differently.

Adversarial images that can cause multiple different classifiers to make the same mistake need to be more robust—tiny changes that work for one model aren’t going to cut it. “Robust” adversarial images tend to involve more than just slight tweaks to the structure of an image. In other words, if you want your adversarial images to be effective from different angles and distances, the tweaks need to be more significant, or as a human might say, more obvious.

Targeting Humans

Here are two examples of robust adversarial images that make a little more sense to us humans:

The image of the cat on the left, which models classify as a computer, is robust to geometric transformations. If you look closely, or maybe even not that closely, you can see the image has been perturbed by introducing some angles and boxyness that we’d recognize as a characteristic of computers. And the image of the banana on the right, which models classify as a toaster, is robust to different viewpoints. We humans recognize the banana immediately, of course, but the weird perturbation next to it definitely has some recognizable toaster-like features.

When you generate a very robust adversarial image to be able to fool a whole bunch of different models, the adversarial image often starts to show the “development of human-meaningful features,” as in the examples above. In other words, a single adversarial image that can fool one model might not look any different to a human, but by the time you come up with one image that can fool five or 10 models at the same time, your image is likely relying on visual features that a human has the potential to notice.

By itself, this doesn’t necessarily mean that a human is likely to think that a boxy image of a cat is really a computer, or that a banana with a weird graphic next to it is a toaster. What it suggests, though, is that it might be possible to target the development of an adversarial image at humans by choosing models that match the human visual system as closely as possible.

Fooling the Eye (and the Brain)

There are some similarities between deep convolutional neural networks and the human visual system, but in general, CNNs look at things more like computers than like humans. That is, when a CNN is presented with an image, it’s looking at a static grid of rectangular pixels. Because of how our eyes work, humans see lots of detail within about five degrees of where we’re looking, but outside of that area, the detail we can perceive drops off linearly.

So, unlike with a CNN, it’s not very useful to (say) adversarially blur the sides of an image for a human, because that’s not something our eyes will detect. The researchers were able to model this by adding a “retinal layer” that modified the image fed into the CNN to simulate the characteristics of the human eye, with the goal of “[limiting] the CNN to information which is also available to the human visual system.”

We should note that humans make up for this kind of thing by moving our eyes around a lot, but the researchers compensated for this in the way they ran their experiment, in order to keep the comparison between humans and CNNs useful. We’ll get into that in a minute.

Using this retinal layer was the extent of the human-specific tweaking that the researchers did to their machine learning models. To generate the adversarial images for the experiment, they tested candidate images across 10 different machine learning models, each of which would reliably misclassify an image of (say) a cat as actually being an image of (say) a dog. If all 10 of the models were fooled, that candidate moved on to the human experiment.

Does it Work?

The experiment involved three groups of images: pets (cats and dogs), vegetables (cabbages and broccoli), and “hazards” (spiders and snakes, although as a snake owner I take exception to the group name). For each group, a successful adversarial image was able to fool people into choosing the wrong member of the group, by identifying it as a dog when it’s actually a cat, or vice versa. Subjects sat in front of a computer screen and were shown an image from a specific group for between 60 and 70 milliseconds, after which they could push one of two buttons to identify which image they thought they were looking at. The short amount of time that the image was shown mitigated the difference between how CNNs perceive the world and how humans do; the image at the top of this article, the researchers say, is unusual in that the effect persists.

The images shown to the subjects during the experiment had the potential to be an unmodified image, an adversarial image, an image where the perturbation layer was flipped upside down before being applied, or an image where the perturbation later was applied to a different image entirely. The last two cases made sure to control for the perturbation layer itself (does the structure of the perturbation layer make a difference as opposed to just whether or not it’s there?) and to determine whether the perturbation can really fool people into choosing one thing over another, as opposed to just making them less accurate overall.

Here’s an example showing the percentage of people who could accurately identify an image of a dog, along with the perturbation layer that was used to alter the image. Remember, people only had between 60 ms and 70 ms to look at each image and make a decision:

And here are the overall results:

This graph shows the accuracy of choosing the correct image. If you chose cat and it’s really an image of a cat, your accuracy is good. If you chose cat and it’s really an image of a dog perturbed to look like a cat, your accuracy is bad.

As you can see, people are significantly more likely to be accurate when identifying images that are unmodified, or images with flipped perturbation layers, than when identifying adversarial images. This suggests that adversarial image attacks can in fact transfer from CNNs to humans.

While these attacks are effective, they’re also more subtle than one might expect—no boxy cats or toaster graphics or anything of that sort. Since we can see the perturbation layers themselves and examine the images both before and after they’ve been futzed with, it’s tempting to try and figure out exactly what is screwing us up. However, the researchers point out that “our adversarial examples are designed to fool human perception, so we should be careful using subjective human perception to understand how they work.”

They are willing to make some generalizations about a few different categories of modifications, including “disrupting object edges, especially by mid-frequency modulations perpendicular to the edge; enhancing edges both by increasing contrast and creating texture boundaries; modifying texture; and taking advantage of dark regions in the image, where the perceptual magnitude of small perturbations can be larger.” You can see examples of these in the images below, with the red boxes highlighting where the effects are most visible.

What it Means

There’s much, much more going on here than just a neat trick. The researchers were able to show that their technique is effective, but they’re not entirely sure why, on a level that’s so abstract, it’s almost existential:

Our study raises fundamental questions how adversarial examples work, how CNN models work, and how the brain works. Do adversarial attacks transfer from CNNs to humans because the semantic representation in a CNN is similar to that in the human brain? Do they instead transfer because both the representation in the CNN and the human brain are similar to some inherent semantic representation which naturally corresponds to reality?

And if you really want your noodle baked, the researchers are happy to oblige, by pointing out how with “visual object recognition… it is difficult to define objectively correct answers. Is Figure 1 objectively a dog or is it objectively a cat but fools people into thinking it is a dog?” In other words, at what point does an adversarial image actually become the thing that it’s trying to fool you into thinking that it is?

The scary thing here (and I do mean scary) are some of the ways in which it might be possible to leverage the fact that there’s overlap between the perceptual manipulation of CNNs and the manipulation of humans. It means that machine learning techniques could potentially be used to subtly alter things like pictures or videos in a way that could change our perception of (and reaction to) them without us ever realizing what was going on. From the paper:

For instance, an ensemble of deep models might be trained on human ratings of face trustworthiness. It might then be possible to generate adversarial perturbations which enhance or reduce human impressions of trustworthiness, and those perturbed images might be used in news reports or political advertising.

More speculative risks involve the possibility of crafting sensory stimuli that hack the brain in a more diverse set of ways, and with larger effect. As one example, many animals have been observed to be susceptible to supernormal stimuli. For instance, cuckoo chicks generate begging calls and an associated visual display that causes birds of other species to prefer to feed the cuckoo chick over their own offspring. Adversarial examples can be seen as a form of supernormal stimuli for neural networks. A worrying possibility is that supernormal stimuli designed to influence human behavior or emotions, rather than merely the perceived class label of an image, might also transfer from machines to humans.

These techniques could also be used in positive ways, of course, and the researchers do suggest a few, like using image perturbations to “improve saliency, or attentiveness, when performing tasks like air traffic control or examination of radiology images, which are potentially tedious, but where the consequences of inattention are dire.” Also, “user interface designers could use image perturbations to create more naturally intuitive designs.” Hmm. That’s great, but I’m much more worried about the whole hacking of how my brain perceives whether people are trustworthy or not, you know?

Some of these questions could be addressed in future research—it may be possible to determine what exactly makes certain adversarial examples more likely to be transferable to humans, which might provide clues about how our brains work. And that, in turn, could help us understand and improve the neural networks that are being increasingly used to help computers learn faster and more effectively. But we’ll have to be careful, and keep in mind that just like those computers, sometimes we’re far too easy to fool.

Adversarial Examples that Fool both Human and Computer Vision, by Gamaleldin F. Elsayed, Shreya Shankar, Brian Cheung, Nicolas Papernot, Alex Kurakin, Ian Goodfellow, and Jascha Sohl-Dickstein, from Google Brain, is available on arXiv. And if you want to see more adversarial images used in the human experiments, the supplemental material is here.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.