A new AI learning scheme combined with a spray-on smart skin can decipher the movements of human hands to recognize typing, sign language, and even the shape of simple familiar objects. The technology quickly recognizes and interprets hand motion with limited data and minimal training and should work for all users, its developers say.

Besides finding use in gaming and virtual reality, the new hand-task-cognition technology could allow people to communicate with others and with machines using gestures. Other applications the technologists envision include surgeons remotely controlling medical devices, as well as a new modality for robots and prosthetics to achieve object and motion recognition.

The gesture-recognition technology developed so far has relied on bulky wrist bands that measure electrical signals produced by muscles or on wearable gloves with strain sensors at each joint. Other approaches have involved cameras that track human motion and interpret it using machine learning. Those motion-capturing camera systems require images taken from multiple surrounding angles, which means multiple cameras are needed for a single gesture-recognition system. These multicam systems also suffer from the inherent limitations of vision-based sensors, says Sungho Jo, a professor in the school of computing at the Korea Advanced Institute of Science and Technology. Such limitations include regions of a workspace that are not covered by multiple cameras, as well as errors that inevitably occur when a hand or other object is occluded from view.

The software used so far has also been cumbersome. Researchers have typically relied on machine-learning models based on supervised learning algorithms that are computationally intense. They require that a large amount of data be collected for each new user and task, all of which require humans to label.

To make a more streamlined motion-recognition system, Jo and colleagues at Seoul National University and Stanford University focused on making both the sensors and algorithms more efficient. “We tried to create a gesture-recognition system that is both lean enough in form and adaptable enough to work for essentially any user and tasks with limited data,” he says.

There are two key parts of the new system, which the team reported in the journal Nature Electronics. One is a mesh made of millions of nanowires of silver coated with gold that are embedded in a polyurethane plastic coating. The mesh, he says, is both durable and stretchy and helps the sensor stick to skin. “It conforms intimately to the wrinkles and folds of each human finger that wears it,” Jo says.

The mesh can be directly printed on skin using a portable machine, and is so thin and lightweight that it is almost imperceptible, he says. He adds that is also biocompatible and breathable—and endures a few days of daily use, including handwashing, unless it is rubbed off with soap and water.

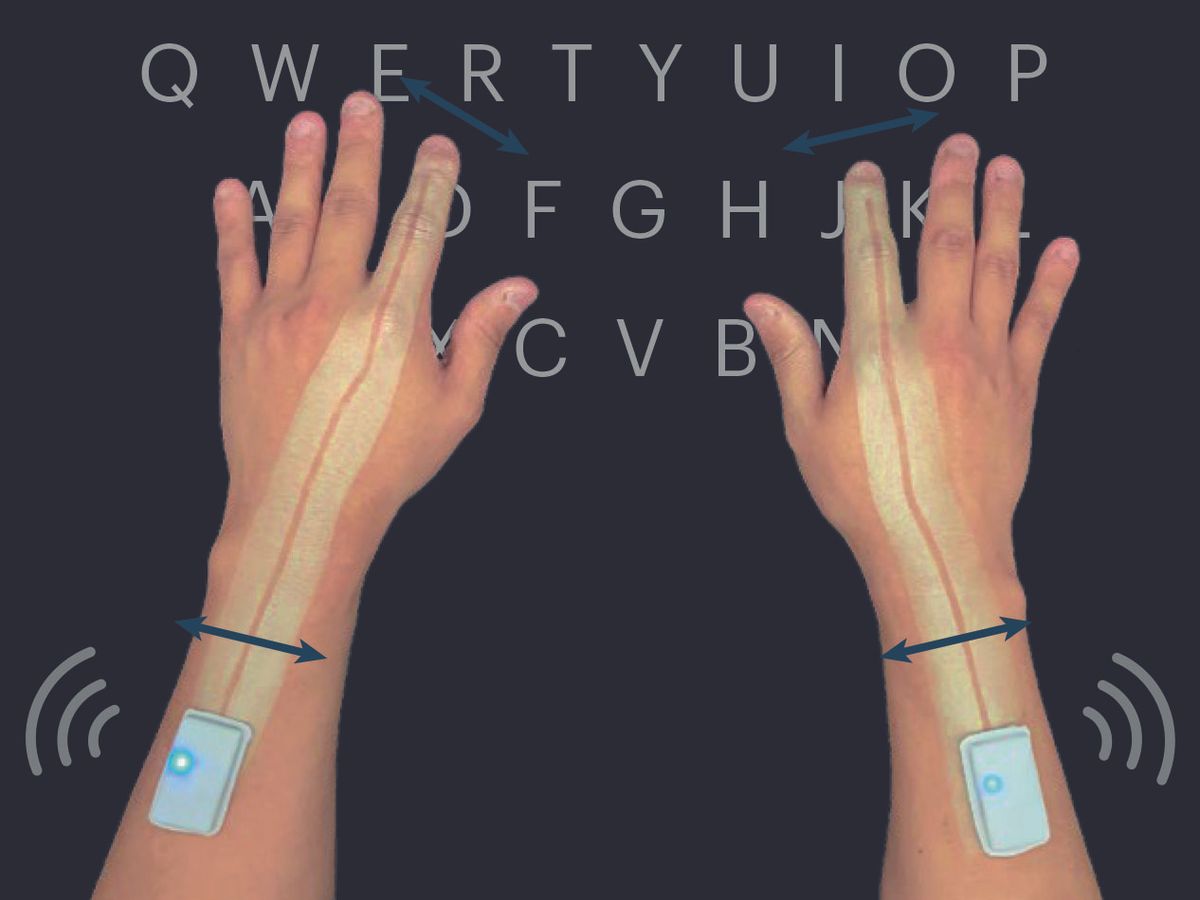

The team directly printed the mesh onto the back of a user’s hand going down the index finger. The nanowire network senses tiny changes to electrical resistance as the skin underneath stretches. As the hand moves, the nanomesh creates unique signal patterns that it wirelessly sends via a lightweight Bluetooth unit to a computer for processing.

This is where the AI kicks in. A machine-learning system maps the changing patterns in electrical conductivity to specific physical tasks and gestures. The researchers first use random hand and finger motions from three different users to help the AI learn the general correlation between motions.

Then, based on this prior knowledge, the researchers train the system to distinguish between the signal patterns generated from specific tasks such as typing on a phone, two-hand typing on a keyboard, and holding and interacting with six objects of different shapes. Each user performed individual gestures related to the tasks five times to generate a small data set, with which the researchers trained the model. The algorithm learns to recognize when the user is typing a specific letter on the keyboard, for instance, or tracing the sloped surface of a pyramid. In tests, the system was then able to recognize objects being held by and sentences being typed on a virtual keyboard by a new user.

“Our learning scheme is not only far more computationally efficient but also versatile, as it can rapidly adapt to different users and tasks with few demonstrations,” Jo says.

Jo adds that they now plan to try placing nanomesh devices on multiple fingers to capture a larger range of hand motions. More sensors will result in a greater amount of data to be analyzed, he says, so the researchers will need to carefully consider the balance between accuracy and reasonable computational workloads for the AI system.

This article appears in the March 2023 print issue as “Spray-on Smart Skin Translates Your Hand Motions.”

Prachi Patel is a freelance journalist based in Pittsburgh. She writes about energy, biotechnology, materials science, nanotechnology, and computing.