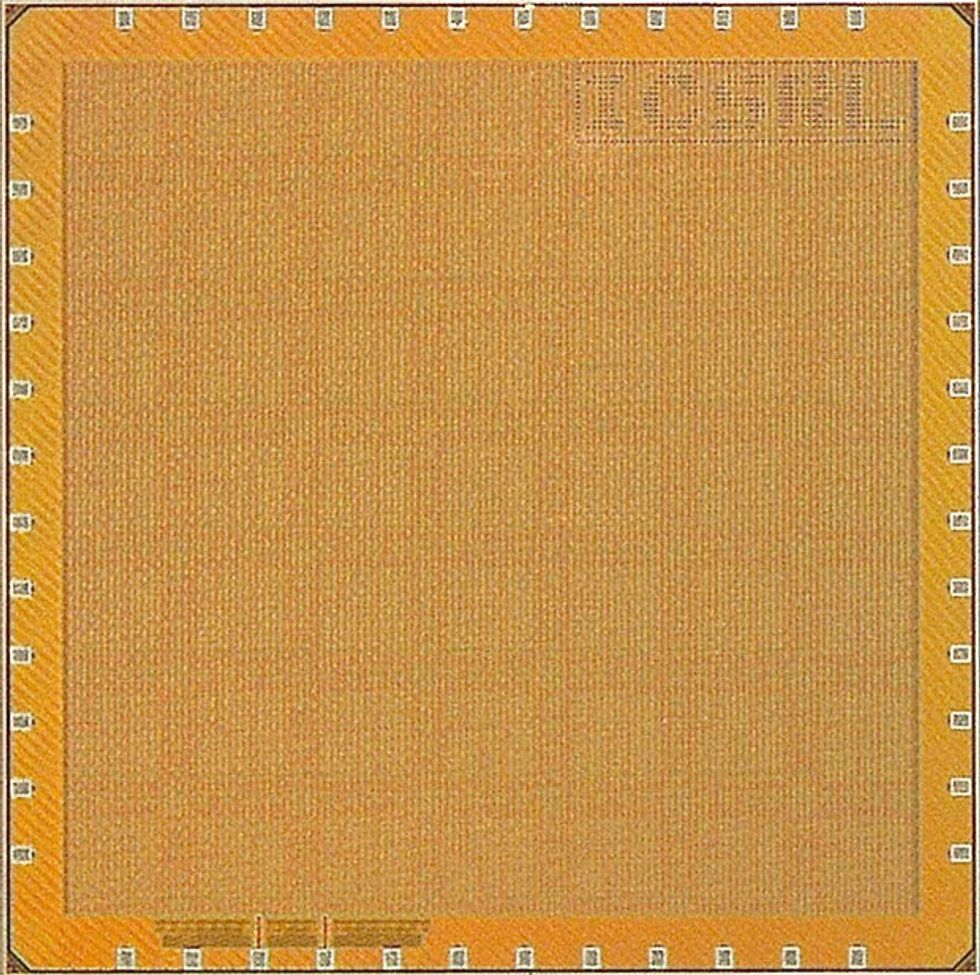

Engineers at Georgia Tech say they’ve come up with a programmable prototype chip that efficiently solves a huge class of optimization problems, including those needed for neural network training, 5G network routing, and MRI image reconstruction. The chip’s architecture embodies a particular algorithm that breaks up one huge problem into many small problems, works on the subproblems, and shares the results. It does this over and over until it comes up with the best answer. Compared to a GPU running the algorithm, the prototype chip—called OPTIMO—is 4.77 times as power efficient and 4.18 times as fast.

The training of machine learning systems and a wide variety of other data-intensive work can be cast as a set of mathematical problem called constrained optimization. In it, you’re trying to minimize the value of a function under some constraints, explains Georgia Tech professor Arijit Raychowdhury. For example, training a neural net could involve seeking the lowest error rate under the constraint of the size of the neural network.

“If you can accelerate [constrained optimization] using smart architecture and energy-efficient design, you will be able to accelerate a large class of signal processing and machine learning problems,” says Raychowdhury. A 1980s-era algorithm called alternating direction method of multipliers, or ADMM, turned out to be the solution. The algorithm solves enormous optimization problems by breaking them up and then reaching a solution over several iterations.

“If you want to solve a large problem with a lot of data—say one million data points with one million variables—ADMM allows you to break it up into smaller subproblems,” he says. “You can cut it down into 1,000 variables with 1,000 data points.” Each subproblem is solved and the results incorporated in a “consensus” step with the other subproblems to reach an interim solution. With that interim solution now incorporated in the subproblems, the process is repeated over and over until the algorithm arrives at the optimal solution.

In a typical CPU or GPU, ADMM is limited because it requires the movement of a lot of data. So instead the Georgia Tech group developed a system with a “near-memory” architecture.

“The ADMM framework as a method of solving optimization problems maps nicely to a many-core architecture where you have memory and logic in close proximity with some communications channels in between these cores,” says Raychowdhury.

The test chip was made up of a grid of 49 “optimization processing units,” cores designed to perform ADMM and containing their own high-bandwidth memory. The units were connected to each other in a way that speeds ADMM. Portions of data are distributed to each unit, and they set about solving their individual subproblems. Their results are then gathered, and the data is adjusted and resent to the optimization units to perform the next iteration. The network that connects the 49 units is specifically designed to speed this gather and scatter process.

The Georgia Tech team, which included graduate student Muya Chang and professor Justin Romberg, unveiled OPTIMO at the IEEE Custom Integrated Circuits Conference last month in Austin, Tex.

The chip could be scaled up to do its work in the cloud—adding more cores—or shrunk down to solve problems closer to the edge of the Internet, Raychowdhury says. The main constraint in optimizing the number of cores in the prototype, he jokes, was his graduate students’ time.

A version of this post appears in the July 2019 print issue as “New Silicon Revives An Old Algorithm.”

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.